Week 3 Lecture 15 Linear Classification

Summary

TLDRThe script discusses transitioning from linear regression to linear classification methods, explaining the concept of linear classification where the boundary between classes is linear. It introduces discriminant functions, which assign class labels based on the highest output value, and highlights the limitations of using linear regression for classification, such as the issue of 'masking.' The script also touches on the use of basis transformations to overcome these limitations and suggests that at least K-1 basis transformations are needed for K classes to avoid masking.

Takeaways

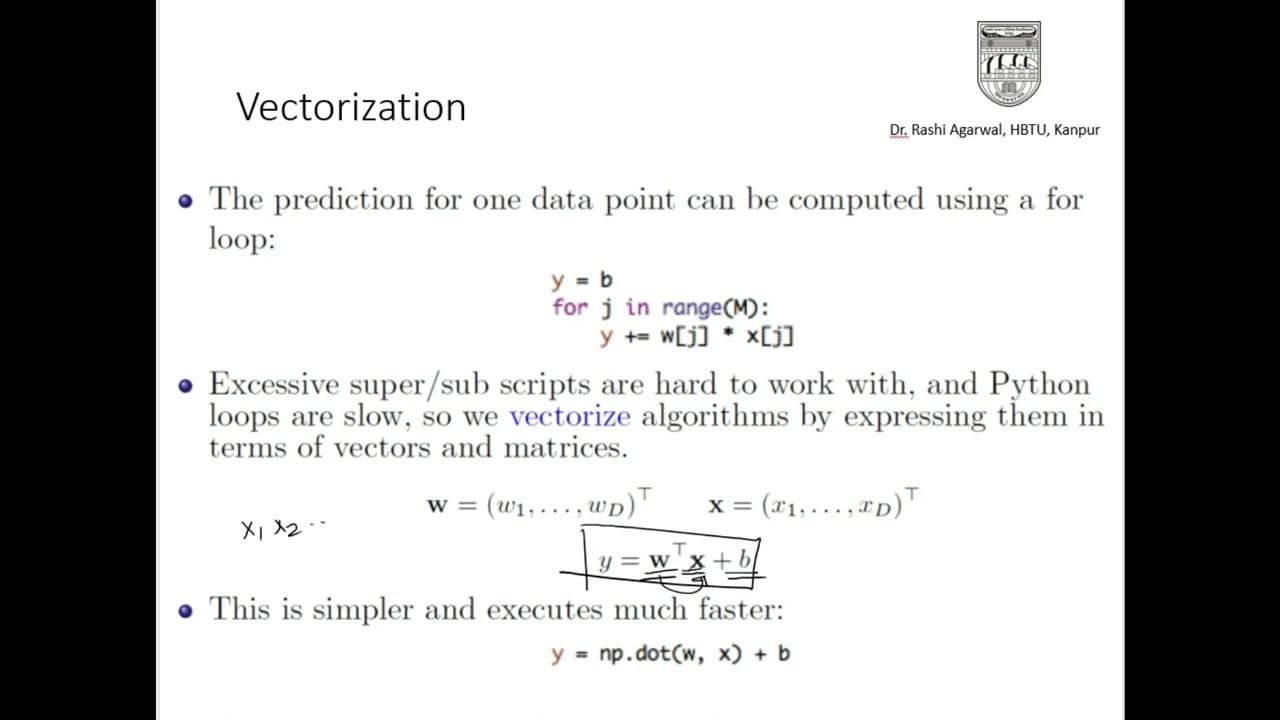

- 🔍 The discussion transitions from linear regression to linear classification methods, emphasizing the concept of a linear boundary separating different classes.

- 📊 Linear classification involves using a linear boundary to separate classes, which can be achieved through linear or nonlinear discriminant functions, as long as they result in a linear decision surface.

- 🤖 Discriminant functions are introduced as a way to model each class, with the class having the highest function value being assigned to a data point.

- 📈 The script explains that for a two-class problem, the separating hyperplane is found where the discriminant functions for each class are equal.

- 🧠 It's highlighted that while discriminant functions don't have to be linear, they can be nonlinear as long as a monotone transformation makes them linear, which is crucial for the linearity of the decision surface.

- 📚 Three approaches for linear classification are mentioned: using linear regression as a discriminant function, logistic regression, and linear discriminant analysis, which considers class labels.

- 👥 The second class of methods discussed models the hyperplane directly, such as the perceptron algorithm, which is an example of a more direct approach to finding an optimal hyperplane.

- 🔢 The script outlines a mathematical setup for classification with K classes, using indicator variables and linear regression to predict class probabilities.

- ⚠️ A potential issue with using linear regression for classification is highlighted, known as 'masking,' where certain classes may never be predicted due to the dominance of other classes in the data.

- 🔧 The concept of basis transformations is introduced as a method to overcome the limitations of linear models in classification, allowing for more complex decision boundaries.

Q & A

What is the main difference between linear regression and linear classification?

-In linear regression, the response is a continuous value that is a linear function of the inputs. In contrast, linear classification uses a boundary that is linear to separate different classes, with the classification decision based on which side of the boundary the input falls on.

What is meant by a 'linear boundary' in the context of classification?

-A 'linear boundary' refers to a separating surface, typically a hyperplane, that divides different classes in a feature space. This boundary is defined by a linear equation, meaning that it does not curve and can be represented as a straight line in two dimensions or a flat plane in three dimensions.

What is a discriminant function in the context of classification?

-A discriminant function is a function associated with each class that helps in classifying a data point. If the discriminant function for 'class I' outputs a higher value than for all other classes for a given data point, the data point is classified as belonging to 'class I'.

How does the concept of 'masking' in linear regression for classification affect the classification outcome?

-Masking occurs when one class's output dominates the others, preventing some classes from ever being chosen as the classification for any input points. This can happen when the data points for certain classes are not represented well in the feature space, leading to biased classification decisions.

Why might linear regression not be the best choice for classification in some cases?

-Linear regression might not be suitable for classification when the classes are not linearly separable or when there is a high degree of overlap between classes. Additionally, the outputs from linear regression cannot be directly interpreted as probabilities, which is often desired in classification tasks.

What is the relationship between the number of classes and the required basis transformations for classification?

-The rule of thumb is that if you have K classes in your input data, you need at least K - 1 basis transformations to avoid issues like masking and to ensure that each class has a chance to dominate the classification in some region of the input space.

How can logistic regression be considered as an alternative to linear regression for classification?

-Logistic regression models the probability of the classes as a function of the inputs and is constrained to output values between 0 and 1, making it more suitable for classification tasks where the outputs are probabilities.

What is the purpose of using indicator variables in linear regression for classification?

-Indicator variables are used to represent the class labels in a binary format (0 or 1) for each class. This allows the linear regression model to be fit to the data for each class separately, with the goal of predicting the expected value or probability of each class given the input features.

How does the perceptron algorithm differ from linear regression in the context of classification?

-The perceptron algorithm is a type of linear classifier that directly models the hyperplane for classification, rather than using discriminant functions. It updates its weights based on misclassifications to iteratively find the optimal separating hyperplane.

What is the significance of the separating hyperplane in linear discriminant analysis?

-In linear discriminant analysis, the separating hyperplane is used to find a direction that maximizes the separation between classes. This method is similar to principal component analysis but takes into account the class labels to derive the directions that best separate the classes.

How can one interpret the output of a linear regression model in the context of classification?

-The output of a linear regression model for each class can be interpreted as the expected value of the class label given the input features. However, these outputs should not be directly interpreted as probabilities due to the lack of constraints in linear regression models.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)