Building a Serverless Data Lake (SDLF) with AWS from scratch

Summary

TLDRThis video from the 'knowledge amplifier' channel dives into the AWS Serverless Data Lake Framework (SDLF), an open-source project that streamlines the setup of data lake systems. It outlines the core AWS services integral to SDLF, such as S3, Lambda, Glue, and Step Functions, and discusses their roles in creating a reusable, serverless architecture. The script covers the framework's architecture, detailing the data flow from raw ingestion to processed analytics, and highlights the differences between near real-time processing in Stage A and batch processing in Stage B. It also touches on CI/CD practices for data pipeline development and provides references to related tutorial videos for practical implementation guidance.

Takeaways

- 📚 The video introduces the AWS Serverless Data Lake Framework (SDLF), a framework designed to handle large volumes of structured, semi-structured, and unstructured data.

- 🛠️ The framework is built using core AWS serverless services including AWS S3 for storage, DynamoDB for cataloging data, AWS Lambda for light data transformations, AWS Glue for heavy data transformations, and AWS Step Functions for orchestration.

- 🏢 Companies like Formula 1 Motorsports, Amazon retail Ireland, and Naranja Finance utilize the SDLF to implement data lakes within their organizations, highlighting its industry adoption.

- 🌐 The framework supports both near real-time data processing in Stage A and batch processing in Stage B, catering to different data processing needs.

- 🔄 Stage A focuses on light transformations and is triggered by events landing in S3, making it suitable for immediate data processing tasks.

- 📈 Stage B is designed for heavy transformations using AWS Glue and is optimized for processing large volumes of data in batches, making it efficient for periodic data processing tasks.

- 🔧 The video script explains the architecture of SDLF, detailing the flow from raw data ingestion to processed data ready for analytics.

- 🔒 Data quality checks are emphasized as crucial for ensuring the reliability of data used in business decisions, with a dedicated Lambda function suggested for this purpose.

- 🔄 The script outlines the use of AWS services for data transformation, including the use of AWS Step Functions to manage workflows and AWS Lambda for executing tasks.

- 🔧 The importance of reusability in a framework is highlighted, with the SDLF being an open-source project that can be adapted and reused by different organizations.

- 🔄 CI/CD pipelines are discussed for managing project-specific code changes, emphasizing the need to implement continuous integration and delivery for variable components of the framework.

Q & A

What is the AWS Serverless Data Lake Framework (SDLF)?

-The AWS Serverless Data Lake Framework (SDLF) is an open-source project that provides a data platform to accelerate the delivery of enterprise data lakes. It utilizes various AWS serverless services to create a reusable framework for data storage, processing, and security.

What are the core AWS services used in the SDLF?

-The core AWS services used in the SDLF include AWS S3 for storage, DynamoDB for cataloging data, AWS Lambda and AWS Glue for compute, and AWS Step Functions for orchestration.

How does the SDLF handle data ingestion from various sources?

-Data from various sources is ingested into the raw layer of the SDLF, which is an S3 location. The data can come in various formats, including structured, semi-structured, and unstructured data.

What is the purpose of the Lambda function in the data ingestion process?

-The Lambda function acts as a router, receiving event notifications from S3 and forwarding the event to a team-specific SQS queue based on the file's landing location or filename.

Can you explain the difference between the raw, staging, and processed layers in the SDLF architecture?

-The raw layer contains the ingested data in its original format. The staging layer stores data after light transformations, such as data type checks or duplicate removal. The processed layer holds the data after heavy transformations, such as joins, filters, and aggregations, making it ready for analytics.

How does the SDLF ensure data quality in the ETL pipeline?

-The SDLF uses a Lambda function to perform data quality validation. This function can implement data quality frameworks to ensure the data generated by the ETL pipeline is of good quality before it is used for analytics.

What is the role of AWS Step Functions in the SDLF?

-AWS Step Functions are used for orchestration in the SDLF. They manage the workflow of data processing, starting from light transformations in the staging layer to heavy transformations in the processed layer.

How does the SDLF differentiate between Stage A and Stage B in terms of data processing?

-Stage A is near real-time, processing data as soon as it lands in S3 and triggers a Lambda function. Stage B, on the other hand, is for batch processing, where data is accumulated over a period and then processed together using AWS Glue.

What is the significance of using CI/CD pipelines in the SDLF?

-CI/CD pipelines are used to manage the deployment of project-specific code for light transformations and AWS Glue scripts. They ensure that only the variable parts of the project are updated, streamlining the development and deployment process.

How can one implement the SDLF using their own AWS services?

-To implement the SDLF, one can refer to the provided reference videos that cover creating event-based projects using S3, Lambda, and SQS, triggering AWS Step Functions from Lambda, and interacting between Step Functions and AWS Glue, among other topics.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

Intro to Databricks Lakehouse Platform Architecture and Security

AWS Glue 5.0 Announced: What's New and Why You Should Upgrade

51. Databricks | Pyspark | Delta Lake: Introduction to Delta Lake

Intel's Lunar Lake AI Chip Event: Everything Revealed in 10 Minutes

Only Data Engineering Roadmap You Need 2025

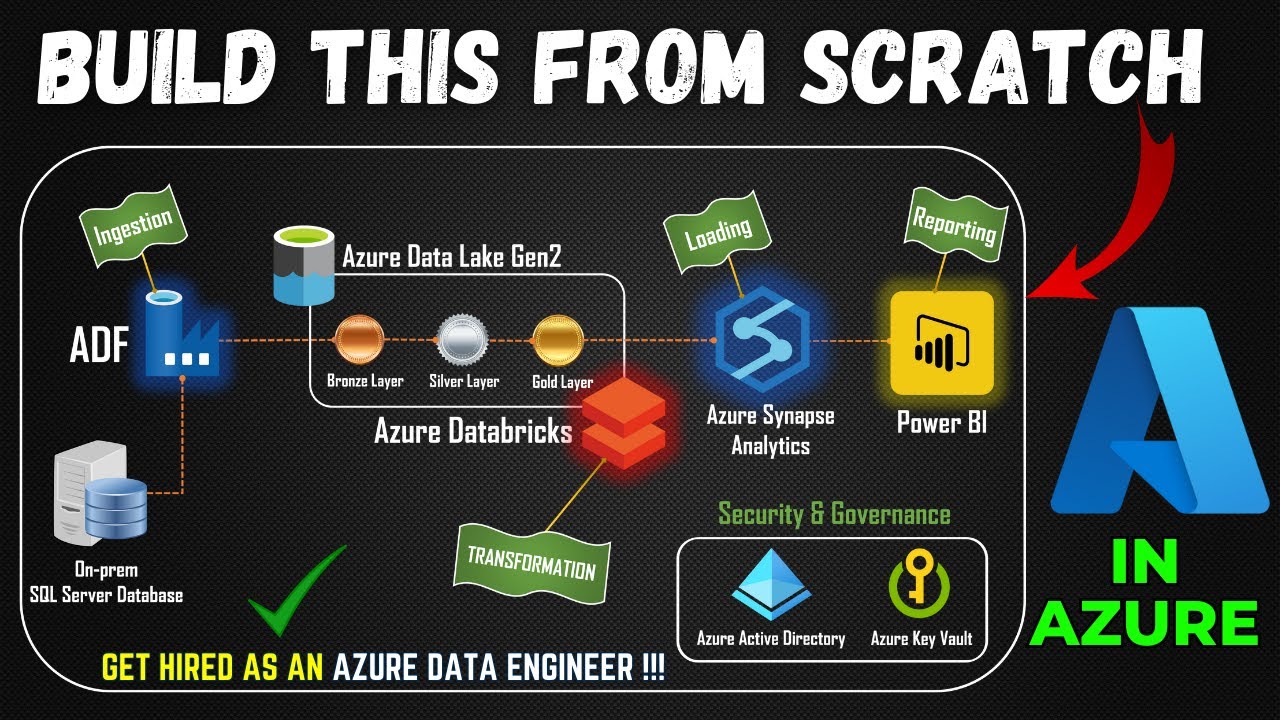

Part 1- End to End Azure Data Engineering Project | Project Overview

5.0 / 5 (0 votes)