Easy 100% Local RAG Tutorial (Ollama) + Full Code

Summary

TLDRThe video script details a tutorial on setting up a local, offline Retrieval-Augmented Generation (RAG) system using the AMA model. The process involves converting a PDF to text, creating embeddings, and querying the model for information extraction. The presenter demonstrates how to download and install AMA, set up the environment, and run a Python script to extract data from a PDF. They also show how to adjust parameters for better results and invite viewers to try the tutorial from a GitHub repo link provided in the description.

Takeaways

- 😀 The video demonstrates how to extract information from a PDF file using a local system.

- 📄 The presenter converted news from the previous day into a PDF and then into a text file for processing.

- 🔍 The process involves using Python scripts to handle the PDF and text data for further analysis.

- 📝 The text from the PDF is appended to a file with each chunk on a separate line for better data structure.

- 🤖 The video introduces the use of a local RAG (Retrieval-Augmented Generation) system for querying the data.

- 🧐 The system can answer questions about the content, such as statements made by Joe Biden, by pulling context from the documents.

- 💻 The setup is completely offline and requires about 70 lines of code, making it lightweight and easy to implement.

- 🔧 The video provides a tutorial on setting up the system, including downloading and installing necessary components.

- 🔗 The tutorial and code are available on a GitHub repository, which viewers can clone or fork to try for themselves.

- 🛠️ Adjustments can be made to the system, such as changing the number of top results (top K) displayed in the output.

- 📈 The system is not perfect but is good enough for the presenter's use case, suggesting it's suitable for personal or small-scale projects.

Q & A

What is the purpose of the script?

-The script demonstrates how to extract information from a PDF file and create embeddings for an offline retrieval-augmented generation (RAG) system using a local model.

What is the first step mentioned in the script for setting up the RAG system?

-The first step is to convert news from yesterday into a PDF file and then append it to a text file with each chunk on a separate line.

Why is the text from the PDF appended with each chunk on a separate line?

-Appending text with each chunk on a separate line is beneficial because it was found to work best for creating embeddings and retrieving relevant information.

What command is used to start the RAG system in the script?

-The command used to start the RAG system is 'python local rag.py'.

How does the script handle the retrieval of information about Joe Biden?

-The script uses a search query for 'what did Joe Biden say' and retrieves context from the documents, showing chunks with mentions of President Biden.

What is the significance of setting 'top K' to three in the script?

-Setting 'top K' to three means that the system will pull three different chunks of information that are most relevant to the search query.

What is the main advantage of using the described RAG system?

-The main advantage is that the RAG system is lightweight, easy to use, quick, and operates 100% locally without the need for an internet connection.

How can the user adjust the number of results retrieved by the RAG system?

-The user can adjust the 'top K' value in the script to change the number of results retrieved, for example, changing it to five for more results.

What is the recommended way to obtain the code for setting up the RAG system?

-The recommended way is to visit the GitHub repo provided in the description, clone the repository, and follow the instructions there.

What is the minimum requirement for the PDF file before it can be processed by the RAG system?

-The PDF file needs to be uploaded and processed by 'python pdf.py' to be in the correct format with each chunk on a separate line.

How can the user ensure that the RAG system is working correctly?

-The user can test the system by asking questions related to the content of the PDF, such as 'what did Joe Biden say', and checking if relevant chunks are retrieved.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

Chat With Documents Using ChainLit, LangChain, Ollama & Mistral 🧠

Llama-index for beginners tutorial

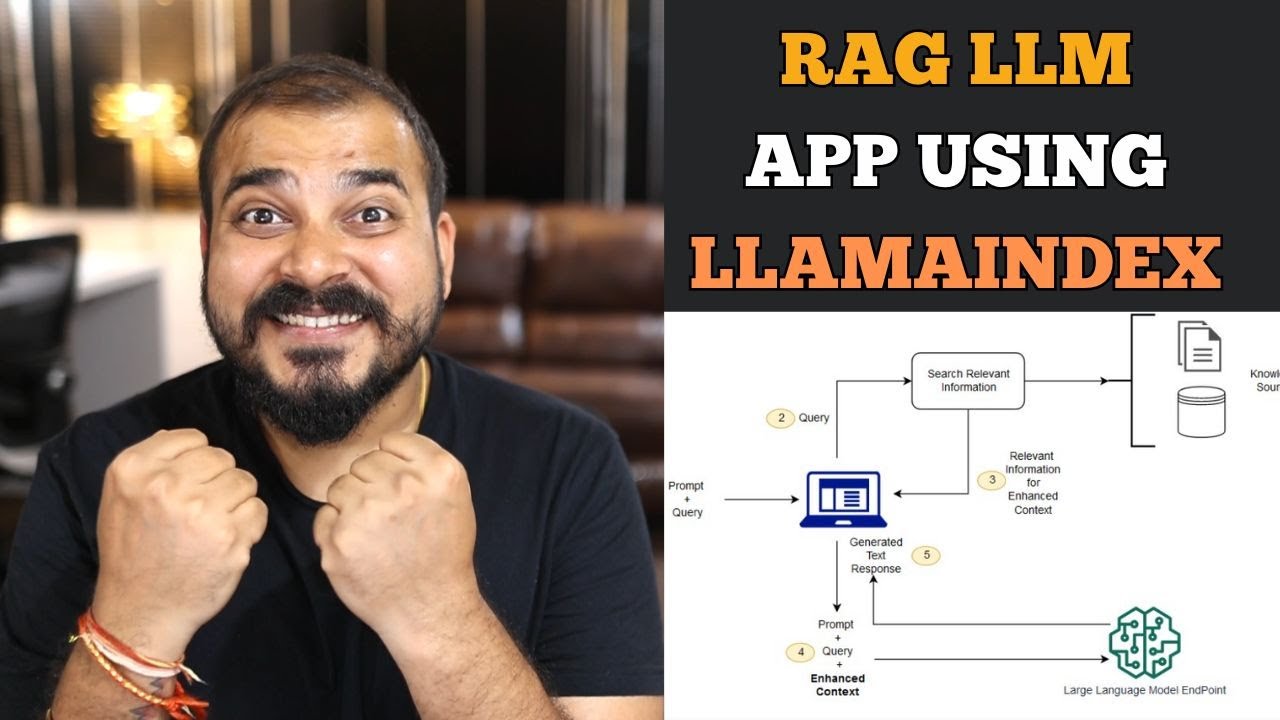

End to end RAG LLM App Using Llamaindex and OpenAI- Indexing and Querying Multiple pdf's

Realtime Powerful RAG Pipeline using Neo4j(Knowledge Graph Db) and Langchain #rag

n8n RAG system done right!

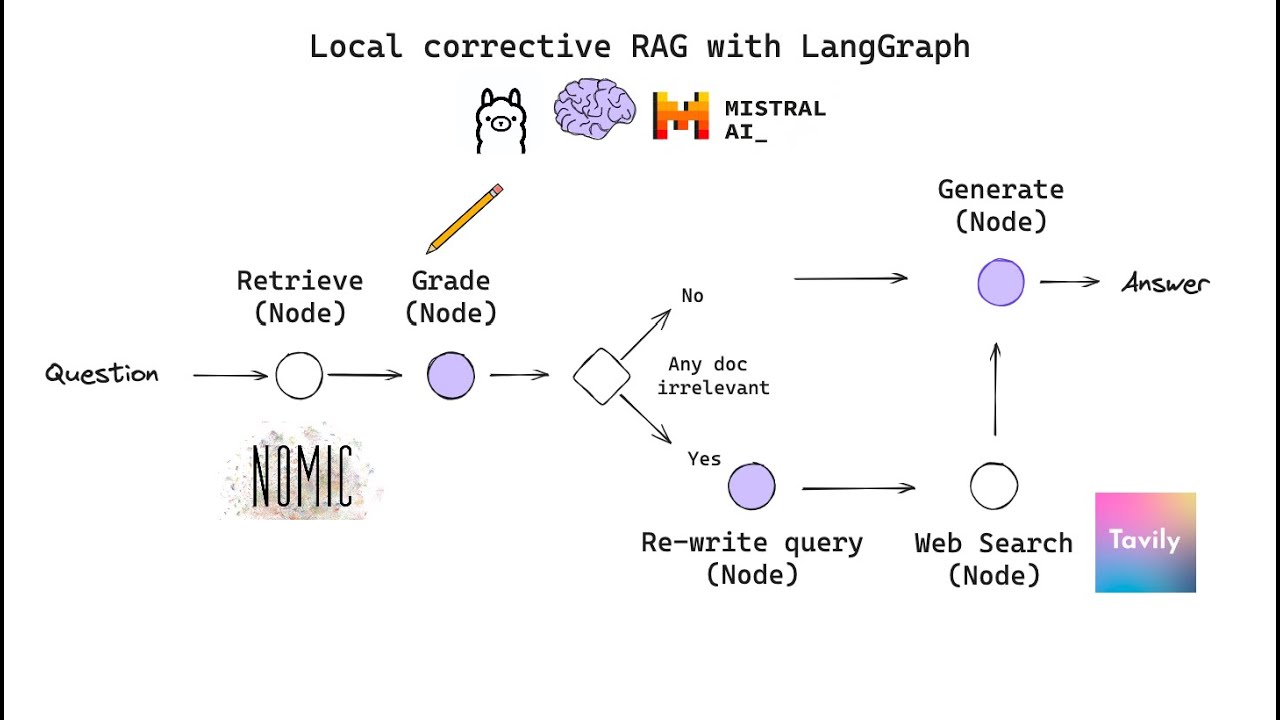

Building Corrective RAG from scratch with open-source, local LLMs

5.0 / 5 (0 votes)