Deep Belief Nets - Ep. 7 (Deep Learning SIMPLIFIED)

Summary

TLDRThis video explores Deep Belief Networks (DBNs), a powerful deep learning model developed by Geoff Hinton. DBNs consist of stacked Restricted Boltzmann Machines (RBMs), which are trained layer by layer to capture complex patterns in data. By addressing the vanishing gradient problem through unsupervised pre-training, DBNs require only a small labeled dataset for fine-tuning, making them efficient for real-world applications. The use of GPUs accelerates training, and DBNs outperform shallow networks in accuracy. The video provides an insightful look into DBNs, showcasing their advantages over traditional models and their role in advancing deep learning.

Takeaways

- 😀 DBNs are an advanced model that combines multiple RBMs to solve the vanishing gradient problem, unlike traditional shallow networks.

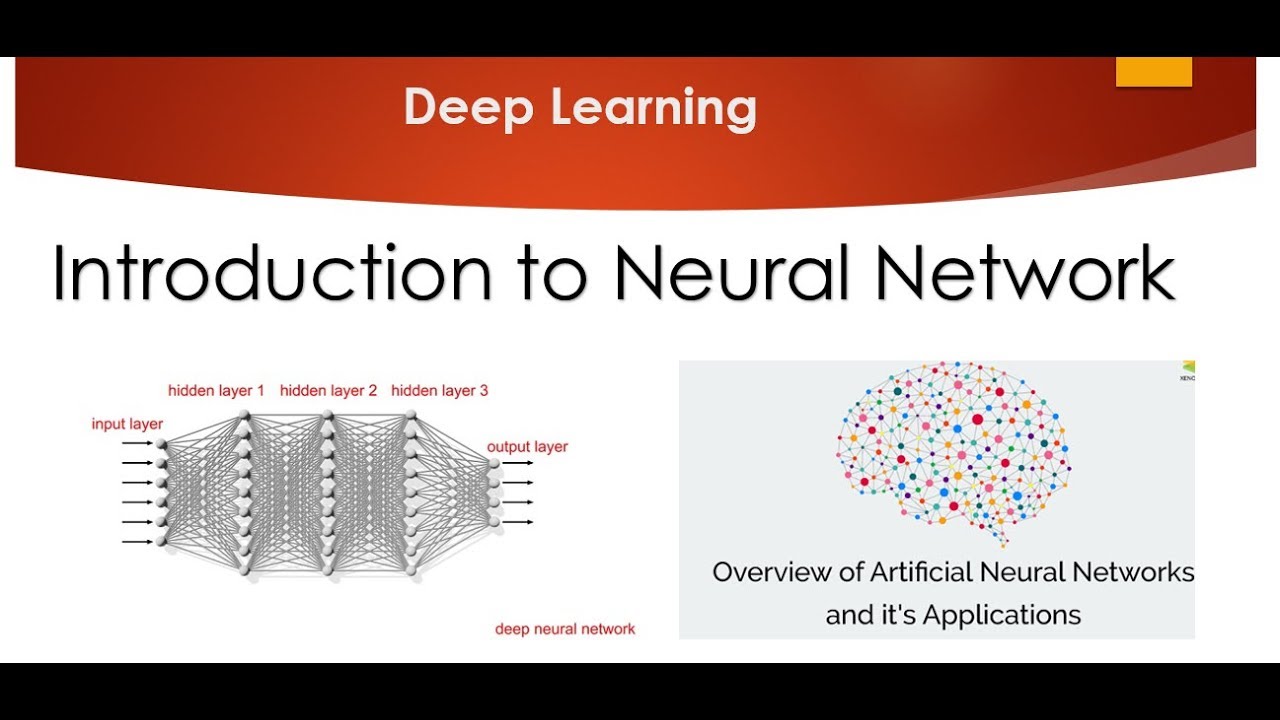

- 😀 A Deep Belief Network (DBN) is structured like a Multi-Layer Perceptron (MLP) but differs in how it is trained, allowing for better performance.

- 😀 DBNs are built by stacking multiple RBMs, where the output of one layer becomes the input for the next, creating a hierarchical structure.

- 😀 The training process of DBNs involves unsupervised learning first, where each RBM layer learns to reconstruct its input, followed by supervised learning to fine-tune the network.

- 😀 DBNs improve the model by globally adjusting all inputs in succession, rather than focusing on smaller features like in convolutional networks.

- 😀 A key strength of DBNs is that they can achieve good performance with a small amount of labeled data, making them efficient for real-world applications.

- 😀 After training the RBM layers, DBNs require only a small labeled dataset to associate patterns with names, enhancing the model's accuracy.

- 😀 The process of fine-tuning the DBN with supervised learning results in improved accuracy, even with minimal labeled data.

- 😀 One major advantage of DBNs is that they can be trained relatively quickly with GPUs, making them suitable for large-scale tasks.

- 😀 DBNs address the vanishing gradient problem, making them more effective than shallow networks in training deep neural models.

- 😀 The modular nature of DBNs, using stacked RBMs, allows them to outperform single-layer models and adapt to complex data patterns.

Q & A

What is the primary function of an RBM in the context of DBNs?

-An RBM (Restricted Boltzmann Machine) extracts features and reconstructs its inputs, acting as a building block for the deeper layers of a Deep Belief Network (DBN).

How does a Deep Belief Network (DBN) address the vanishing gradient problem?

-A DBN addresses the vanishing gradient problem by using a stacked structure of RBMs, where each layer is trained sequentially. This avoids the issues faced by traditional deep networks by ensuring more effective gradient propagation during training.

What is the main difference between a DBN and a Multilayer Perceptron (MLP)?

-While a DBN and an MLP share a similar network structure, the key difference is in their training methods. A DBN uses unsupervised pre-training with RBMs, followed by supervised fine-tuning, while an MLP typically relies on backpropagation for training.

How does training a DBN differ from training a traditional deep network?

-In a DBN, each RBM layer is trained independently in an unsupervised manner to reconstruct its input. This stepwise training process contrasts with traditional deep networks, where all layers are typically trained simultaneously using backpropagation.

What role does supervised learning play in training a DBN?

-Supervised learning in a DBN is used to fine-tune the model after the unsupervised pre-training phase. A small labeled dataset is used to adjust the model’s weights, ensuring that it can correctly associate learned patterns with specific labels.

Why does a DBN require only a small set of labeled data for fine-tuning?

-Because the DBN's unsupervised pre-training phase allows it to learn useful features from a large, unlabeled dataset, the model requires fewer labeled examples to fine-tune and associate these features with specific classes.

How do RBMs in a DBN differ from layers in convolutional networks?

-Unlike convolutional networks, where early layers detect simple patterns and later layers combine them, RBMs in a DBN process the entire input in succession, gradually refining the model’s understanding of the data as each layer is trained.

What is the significance of treating the hidden layer of an RBM as the visible layer for the next RBM in a DBN?

-Treating the hidden layer of one RBM as the visible layer for the next allows each RBM to learn progressively higher-level representations of the input data, forming a hierarchical structure that improves the model's ability to capture complex patterns.

How does the DBN training process mimic the way a camera lens focuses an image?

-The DBN training process is analogous to a camera lens focusing an image because it gradually refines the entire input data over several layers, much like a lens improving the clarity of an image step by step.

What are the advantages of using GPUs in training DBNs?

-GPUs accelerate the training of DBNs by allowing parallel processing of the multiple layers, enabling faster computation and reducing the time required for both unsupervised pre-training and supervised fine-tuning.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)