Introduction to Clustering

Summary

TLDRIn this video, Arham introduces the concept of clustering, an unsupervised learning technique used to group similar data points. The video explains four types of clustering methods: centroid-based (e.g., K-Means), connectivity-based (hierarchical clusters), distribution-based (e.g., expectation maximization), and density-based (focusing on dense regions). The video emphasizes the importance of defining the number of clusters and addresses the sensitivity of clustering methods to outliers. It also briefly touches on the use of clustering in real-world applications like Google searches and product recommendations. Overall, the video offers a comprehensive overview of clustering techniques and their applications.

Takeaways

- 😀 Clustering is an unsupervised learning technique that groups similar data points together based on their characteristics.

- 😀 A cluster is a set of data points that are more similar to each other than to points in other clusters.

- 😀 Clustering does not require a response class, unlike other machine learning techniques.

- 😀 Numeric data is essential for clustering; categorical variables must be converted to numeric form through techniques like one-hot encoding.

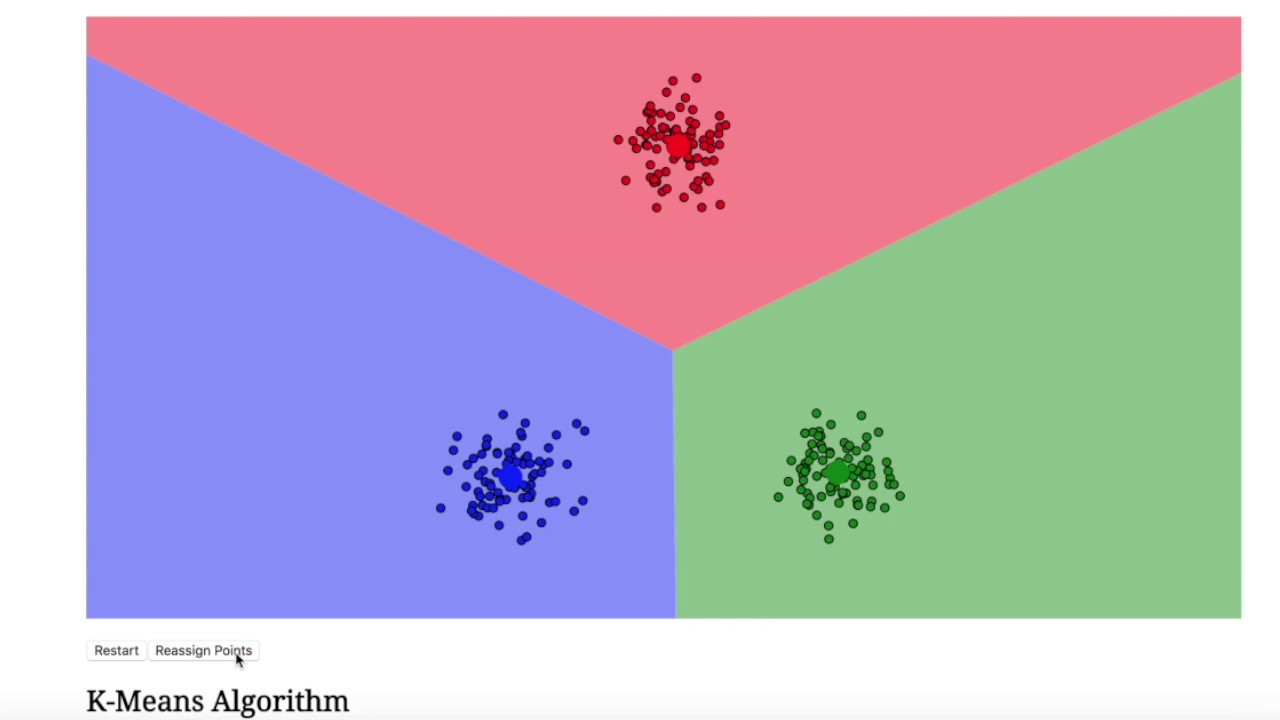

- 😀 Centroid-based clustering uses a centroid to represent each cluster, and a well-known example is the K-Means algorithm.

- 😀 K-Means clustering iteratively minimizes the distance between data points and centroids, but the number of clusters (K) needs to be predefined by the user.

- 😀 Connectivity-based clustering groups data points based on their proximity to one another, and can be either bottom-up or top-down, with clusters shown in a dendrogram.

- 😀 Distribution-based clustering assumes that data points within a cluster follow a normal distribution, and an example of this method is the Expectation Maximization (EM) algorithm.

- 😀 Density-based clustering defines clusters as areas of concentrated data points and is less sensitive to outliers than other methods.

- 😀 Clustering algorithms are sensitive to outliers, which can distort the results, and the elbow method can be used to estimate the optimal number of clusters.

- 😀 Clustering is widely used in real-world applications like search engines, recommendation systems, and market segmentation to group similar data points or items together.

Q & A

What is clustering in the context of data analysis?

-Clustering is the process of grouping data points into partitions based on their similarity, where points within the same group are more similar to each other than to those in other groups.

How does clustering relate to the way we perceive the world?

-Clustering is similar to how we perceive clusters in nature, such as groups of stars in the night sky. These groupings share similar characteristics and are named or recognized based on those similarities.

What distinguishes clustering from other machine learning techniques?

-Clustering is an unsupervised learning technique, meaning it does not rely on predefined labels or a response class. It groups data based on similarity without prior knowledge of the outcome.

Why must categorical variables be converted to numeric form in clustering?

-Clustering algorithms require data in numeric form to calculate similarities between data points. Categorical data must be transformed, often using techniques like one-hot encoding, to allow clustering algorithms to process it.

What is centroid-based clustering and how does it work?

-Centroid-based clustering involves representing each cluster by a centroid, or center. Clusters are formed by calculating the distance from data points to the centroid. K-Means is a popular algorithm that uses this method to assign points to the nearest centroid.

What is the role of the 'K' value in K-Means clustering?

-The 'K' in K-Means refers to the number of clusters the user wants to create. It is a predefined value, and the algorithm iterates to find the optimal centroids for the chosen number of clusters.

How does connectivity-based clustering differ from centroid-based clustering?

-Connectivity-based clustering defines clusters by grouping nearby points based on distance, often forming a hierarchical structure. Unlike centroid-based clustering, it doesn't rely on a central point, and clusters can contain other clusters.

What is a dendrogram in connectivity-based clustering?

-A dendrogram is a tree-like diagram that visually represents the hierarchical structure of clusters in connectivity-based clustering. It shows how clusters are formed or split at each stage of the algorithm.

How does distribution-based clustering work?

-Distribution-based clustering assumes that each cluster follows a normal distribution. The algorithm uses probability to determine which data points belong to the same distribution, optimizing the clusters based on this probability.

What is density-based clustering, and how does it handle sparse data?

-Density-based clustering groups data points based on areas of high point density. It identifies dense regions as clusters and considers sparse areas as noise or boundaries between clusters, which helps handle irregularly shaped clusters.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)