Key Value Cache in Large Language Models Explained

Summary

TLDRIn this video, the speaker introduces key-value caching as a crucial concept in large language models like Llama 2 and Llama 3. They explain the inference strategy, highlighting how caching can reduce redundant calculations during token processing. By illustrating the mechanism of attention, the speaker emphasizes that only the last token needs to be recalculated, while previously computed values can be stored and reused. The video also delves into coding aspects, showcasing how to implement key-value caching in a transformer architecture, with references to existing models for further exploration. Overall, it's an insightful discussion on optimizing inference in AI models.

Takeaways

- 😀 The video emphasizes the importance of developing effective communication skills for personal and professional success.

- 😀 Active listening is highlighted as a crucial component of effective communication, fostering better understanding and collaboration.

- 😀 Body language plays a significant role in communication, with non-verbal cues often conveying more than spoken words.

- 😀 The script suggests practicing public speaking to enhance confidence and reduce anxiety in presenting ideas.

- 😀 Feedback is presented as a vital tool for growth, encouraging individuals to seek constructive criticism to improve their communication skills.

- 😀 The video discusses the impact of cultural differences on communication styles, advocating for sensitivity and adaptability.

- 😀 Building rapport is emphasized as essential for effective communication, helping to establish trust and openness in conversations.

- 😀 The importance of clarity and conciseness in messaging is stressed, urging individuals to articulate their thoughts without ambiguity.

- 😀 The script recommends utilizing technology and social media wisely to enhance communication but warns against misinterpretations in written forms.

- 😀 Continuous learning and practice are encouraged as keys to mastering communication skills, underscoring that improvement is an ongoing process.

Q & A

What is key-value caching, and why is it important in large language models?

-Key-value caching is a technique used to optimize the inference process in large language models by storing previously computed key and value pairs. This reduces redundant calculations for tokens that have already been processed, leading to faster and more efficient inference.

How does the attention mechanism function in the context of key-value caching?

-In the attention mechanism, queries, keys, and values are used to determine the relevance of tokens in the input sequence. Key-value caching allows the model to retain the values associated with previous tokens, enabling it to focus on new tokens without recalculating values for those already seen.

What problem does key-value caching solve during the inference phase?

-Key-value caching addresses the inefficiency of recalculating attention values and key matrices for every token during inference. It allows the model to skip unnecessary computations, focusing only on the most recent tokens, which significantly speeds up the process.

What are the steps involved in implementing key-value caching in a model like LLaMA 2?

-Implementing key-value caching involves defining cache structures for keys and values, replacing entries as new tokens are processed, and ensuring that previous values can be reused in subsequent computations without re-evaluation.

What coding techniques are suggested for effectively utilizing key-value caching?

-The video suggests using PyTorch to define cache keys and values as tensors. It includes examples of how to initialize these caches, update them during inference, and efficiently retrieve stored key and value pairs.

Why is it unnecessary to calculate attention values for all tokens during inference?

-During inference, many of the attention values for previously processed tokens remain the same, meaning they do not need to be recalculated. This redundancy allows models to focus only on the most recent tokens while reusing cached values.

How does the concept of multi-head attention relate to key-value caching?

-In multi-head attention, multiple sets of keys and values are generated for different heads. Key-value caching can help share these projections across heads, optimizing memory usage and computation by avoiding the duplication of key-value pairs for each head.

What is the significance of the LLaMA 2 paper mentioned in the video?

-The LLaMA 2 paper provides detailed insights into the implementation of key-value caching within its architecture, highlighting its role in improving inference speed and efficiency in large language models.

Can you explain the term 'rotary embeddings' mentioned in the transcript?

-Rotary embeddings, also referred to as 'rope,' are a method of incorporating positional information into the model's embeddings. They help maintain the order of tokens in sequences, which is crucial for understanding context in language processing.

What resources does the speaker recommend for further exploration of the topic?

-The speaker encourages viewers to check the description for pinned resources related to key-value caching and invites suggestions for future video topics, fostering ongoing engagement and learning.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

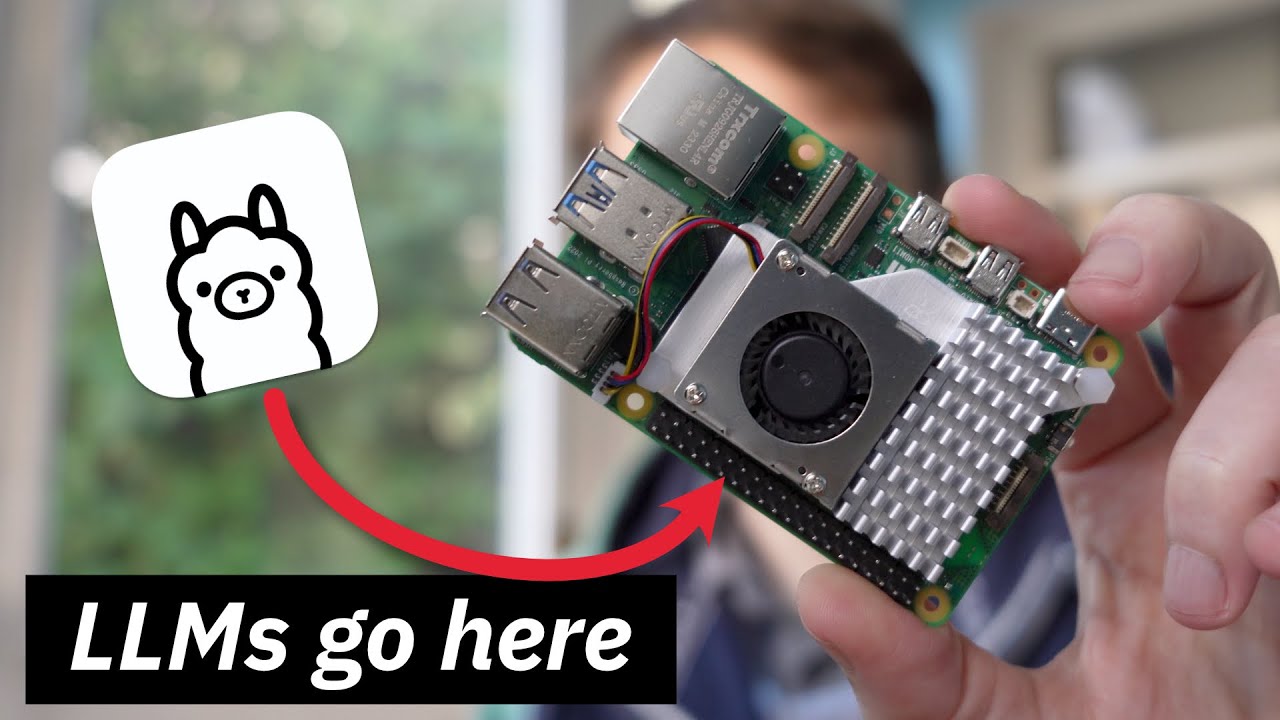

Using Ollama to Run Local LLMs on the Raspberry Pi 5

Phi-3-mini vs Llama 3 Showdown: Testing the real world applications of small LLMs

Should You Use Open Source Large Language Models?

Llama Index ( GPT Index) step by step introduction

LLAMA 3.1 405b VS GROK-2 (Coding, Logic & Reasoning, Math) #llama3 #grok2 #local #opensource #grok-2

Llama 3.2 is INSANE - But Does it Beat GPT as an AI Agent?

5.0 / 5 (0 votes)