Gaussian Naive Bayes, Clearly Explained!!!

Summary

TLDRIn this StatQuest video, Josh Starmer explains Gaussian Naive Bayes, focusing on how it helps predict if someone will love the movie 'Troll 2' based on data like popcorn, soda, and candy consumption. He walks through calculating probabilities using Gaussian distributions and logs to avoid underflow issues. The video also highlights how certain features, like candy consumption, can heavily influence predictions. Josh encourages viewers to explore cross-validation for better classification models and promotes study guides and other resources for learning more. Engaging and educational, the video makes machine learning concepts accessible.

Takeaways

- 🎥 The video introduces Gaussian Naive Bayes and assumes prior knowledge of multinomial Naive Bayes, the log function, and Gaussian distribution.

- 🍿 Example scenario involves predicting whether someone loves the movie Troll 2 based on the amount of popcorn, soda, and candy they consume.

- 📊 Gaussian distributions are created for the data points (popcorn, soda, candy) of people who love and do not love Troll 2.

- 🔍 The prior probabilities (initial guesses) for whether someone loves or doesn't love Troll 2 are set to 0.5, based on training data.

- 💡 Likelihoods are calculated for each food item using the Gaussian distributions, then combined with the prior probabilities.

- 🔢 To prevent underflow when working with very small likelihood numbers, the log function is used, transforming multiplication into the sum of logs.

- 🧮 After taking the log, scores are calculated for both 'loves Troll 2' and 'does not love Troll 2', with the final classification based on the larger score.

- 🍭 The large difference in candy consumption was the deciding factor in classifying the new person as someone who does not love Troll 2.

- ⚖️ The script suggests that cross-validation can help determine which variables (popcorn, soda, candy) are most important for classification.

- 📚 The presenter promotes StatQuest study guides, which offer more detailed explanations on Gaussian Naive Bayes and other topics.

Q & A

What is Gaussian Naive Bayes?

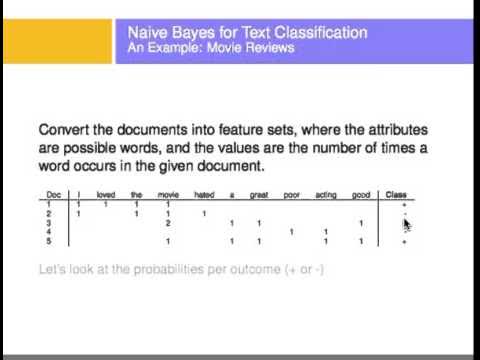

-Gaussian Naive Bayes is a type of Naive Bayes classifier that assumes the features follow a normal (Gaussian) distribution. It is used for classification tasks where the data can be modeled using Gaussian distributions.

What assumptions does this tutorial make?

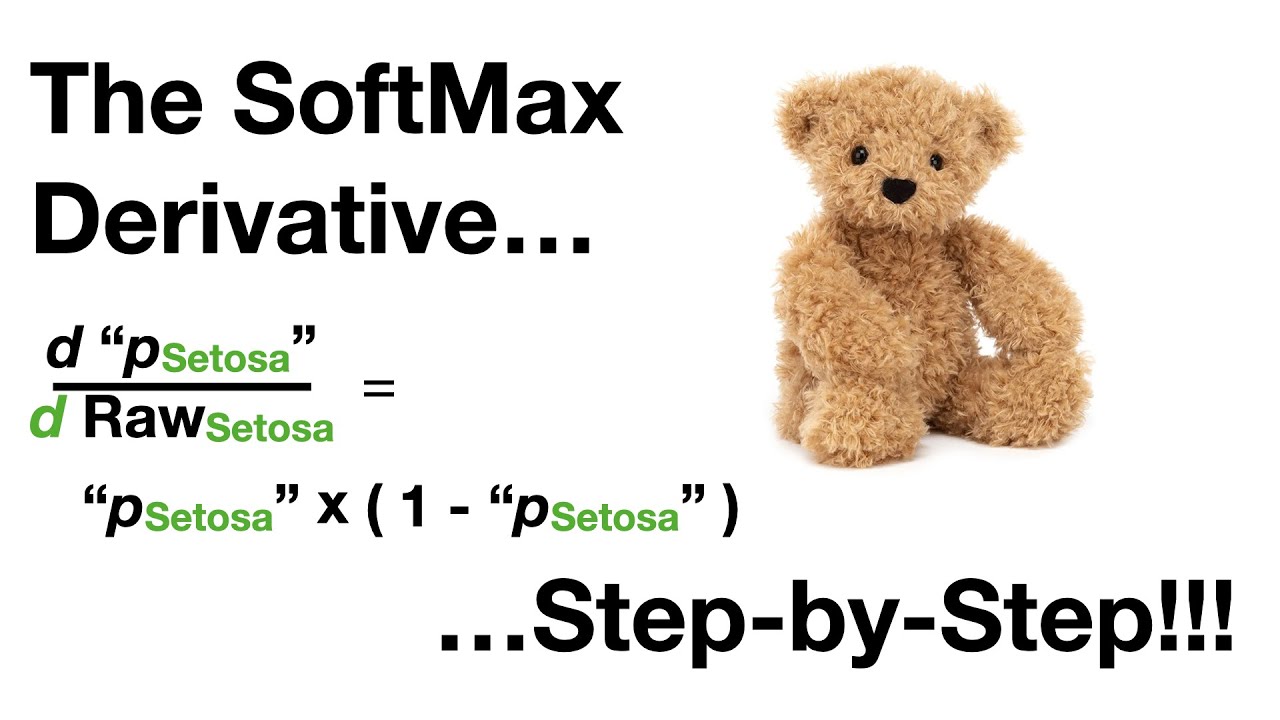

-The tutorial assumes familiarity with multinomial naive Bayes, the log function, the normal (Gaussian) distribution, and the difference between probability and likelihood.

What example is used to explain Gaussian Naive Bayes in the video?

-The example used involves predicting whether someone would love the 1990 movie 'Troll 2' based on how much popcorn, soda pop, and candy they consume daily.

What are the prior probabilities in Gaussian Naive Bayes?

-The prior probabilities are the initial guesses of whether someone loves or does not love 'Troll 2.' In the example, both prior probabilities are 0.5, representing equal likelihood.

What role does likelihood play in Gaussian Naive Bayes?

-Likelihood represents how well the observed data fits under each of the Gaussian distributions for the features (popcorn, soda, and candy consumption) given the classification (loves or does not love 'Troll 2').

Why is the log function used in this classification process?

-The log function is used to prevent underflow, which occurs when very small numbers are multiplied, leading to computational errors. Taking the log converts the multiplication of small numbers into a summation of their logarithms.

How is the final classification made in this example?

-The final classification is made by comparing the log scores for 'loves Troll 2' and 'does not love Troll 2.' In this case, the score for 'does not love Troll 2' is higher, so the new person is classified as not loving the movie.

What factor had the most influence on the classification decision?

-Candy consumption had the most influence, as the log likelihood for candy showed a significant difference between those who love and do not love 'Troll 2,' outweighing the other factors like popcorn and soda.

What is cross-validation, and how does it apply to this example?

-Cross-validation is a technique used to assess the performance of a model by splitting the data into training and testing sets. In this example, cross-validation can be used to determine which features (popcorn, soda, or candy) are most important for making accurate classifications.

What does the video suggest for further study on this topic?

-The video suggests checking out StatQuest's study guides, which provide detailed explanations and are useful for exam preparation or job interviews. It also recommends learning about cross-validation if the viewer isn't familiar with it.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тариф5.0 / 5 (0 votes)