This is How I Scrape 99% of Sites

Summary

TLDRIn this video, the creator demonstrates a powerful method for scraping e-commerce sites by bypassing traditional HTML scraping and targeting backend APIs. They show how to identify key API endpoints using browser developer tools and how to use proxies to avoid getting blocked. The creator walks through the process of obtaining product data, including pricing and availability, by manipulating API requests. The video also covers coding techniques to automate data extraction using Python, with an emphasis on efficiency and ethical web scraping practices.

Takeaways

- 😀 Identify backend APIs instead of scraping raw HTML to efficiently extract data from e-commerce sites.

- 😀 Use browser developer tools (Network tab) to capture API requests and responses in JSON format for easy parsing.

- 😀 Focus on XHR (XMLHttpRequest) and JSON responses when analyzing network traffic for relevant product data.

- 😀 Use high-quality proxies, such as residential and mobile proxies, to avoid being blocked while scraping large amounts of data.

- 😀 Sticky sessions are useful for maintaining the same IP address for a specific period to avoid triggering anti-bot defenses.

- 😀 Utilize `curl-cffi` and `requests` libraries in Python to handle TLS fingerprinting and mimic real browser requests.

- 😀 Add custom headers, like a user-agent, to your requests to avoid detection as a bot and reduce the risk of being blocked.

- 😀 Model your scraped data with Pydantic models for structured, clean, and reusable code that can handle different data formats.

- 😀 Combine multiple API endpoints, such as search and product details, into a single workflow for more efficient data collection.

- 😀 Ethical scraping is important: avoid overloading servers and always ensure you are collecting publicly available data.

Q & A

Why is scraping HTML from e-commerce websites often ineffective for extracting product data?

-Scraping HTML is ineffective because e-commerce websites typically load product data dynamically through backend APIs, meaning the product data isn't directly available in the HTML. Instead, the data is populated through API calls that provide structured JSON responses.

How can you find the backend API for an e-commerce website?

-To find the backend API, open the browser’s developer tools, go to the 'Network' tab, and filter for XHR (XMLHttpRequest) requests. Look for responses in JSON format, which typically contain the product data you're interested in.

What are some key strategies to avoid being blocked while scraping data at scale?

-To avoid being blocked, use high-quality proxies to rotate IP addresses and prevent detection. Residential and mobile proxies are particularly effective at bypassing anti-bot measures, as they mimic real user traffic. Using sticky sessions is also recommended to hold onto a single IP address for a few minutes.

What is TLS fingerprinting, and how does it affect web scraping?

-TLS fingerprinting is a method used to detect and block automated scraping bots by analyzing patterns in secure connections. If a bot’s TLS fingerprint doesn't match that of a real browser, the request may be blocked, resulting in a 403 Forbidden error.

How can the 403 Forbidden error caused by TLS fingerprinting be overcome in web scraping?

-The 403 error caused by TLS fingerprinting can be overcome by using libraries like `curl-cffi`, which helps to mimic a real browser’s fingerprint and avoid detection. This allows the scraping script to make requests that appear to come from an actual user.

What are the benefits of using proxy services like Proxy Scrape for web scraping?

-Proxy services like Proxy Scrape offer access to a large pool of high-quality, rotating IP addresses. This helps to prevent IP bans during large-scale scraping operations. They provide residential, mobile, and data center proxies with both rotating and sticky session options, ensuring anonymity and reliability.

What Python libraries are used in the script for making requests and handling JSON data?

-The script uses the `requests` library for making HTTP requests, and `curl-cffi` is used to handle TLS fingerprinting. For structuring and modeling data, the script uses the `Pydantic` library, while the `Rich` library is used for better output formatting.

What is the role of data modeling in web scraping projects?

-Data modeling is essential for organizing and structuring the scraped data, making it easier to manipulate and store. In the script, `Pydantic` models are used to create structured representations of product data, availability, and search results, allowing for cleaner and more manageable data processing.

How does the script handle pagination in API responses?

-The script handles pagination by modifying the `start` index in the API request URL. By changing this parameter, the script can query different pages of search results, enabling it to loop through all available items.

What ethical considerations should be kept in mind while scraping data from websites?

-When scraping, it's important to be considerate of the website's terms of service and avoid overloading the server with excessive requests. Use proxies to minimize the risk of being blocked, and ensure that the data you are scraping is publicly available. Always be mindful of the potential impact on the website’s performance.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

🛑 Stop Making WordPress Affiliate Websites (DO THIS INSTEAD)

Industrial-scale Web Scraping with AI & Proxy Networks

Is Data Scraping Legal?

Scraping with Playwright 101 - Easy Mode

P2 | Web scraping with Python | Real-time price comparison from multiple eCommerce| Python projects

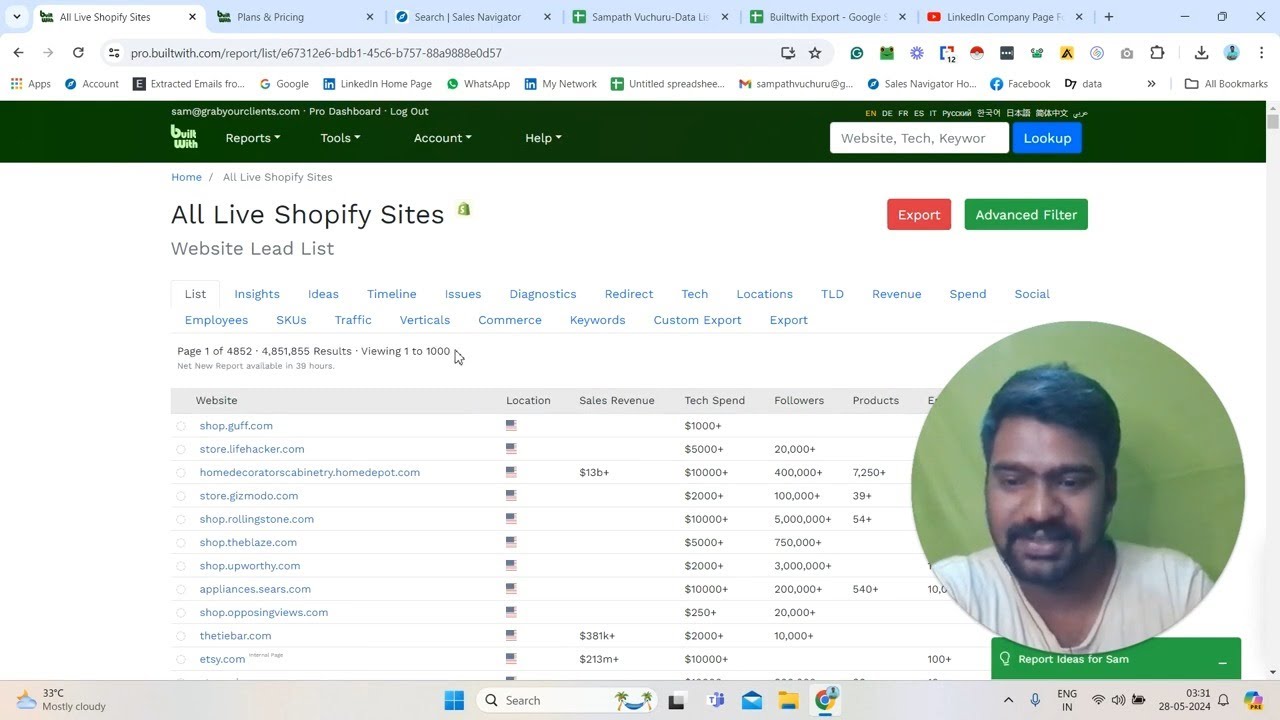

How to Use BuiltWith Pro for E-commerce Data | BuiltWith Pro Tutorial (2024)

5.0 / 5 (0 votes)