HUGE AI NEWS : MAJOR BREAKTHROUGH!, 2x Faster Inference Than GROQ, 3 NEW GEMINI Models!

Summary

TLDRThis week in AI brought groundbreaking advancements: Google's diffusion models are now real-time game engines, rendering gameplay as it happens. Magic Labs unveiled an AI model with a 100 million token context window, a significant leap from current capabilities. Sam Altman announced OpenAI's collaboration with the US AI Safety Institute for pre-release model testing, emphasizing national leadership in AI safety. Bland AI showcased AI's future in handling phone calls, suggesting significant workforce changes. Lastly, Google introduced new AI models, including an improved Gemini 1.5 Pro for complex coding tasks.

Takeaways

- 😲 Google Research's diffusion models are now capable of real-time game engines, rendering gameplay as it happens.

- 🌐 The implications of this technology suggest a future with simulated worlds that people can explore.

- 🚀 Magic Labs achieved a breakthrough with their 'ltm2 mini' model, boasting a 100 million token context window, equivalent to 750 novels.

- 📚 The 'hash hop' evaluation method tests the model's ability to retrieve information from a vast context, pushing AI capabilities.

- 📈 The advancements in AI context length are exponential, with models now handling up to 100 million tokens, a significant leap from previous limits.

- 🤖 Bland AI presents a future where AI can handle millions of calls in any language, revolutionizing customer service and enterprise operations.

- 💡 Prompting techniques are shown to significantly improve AI performance, with emotional manipulation of prompts enhancing outcomes.

- 🧠 Stephen Wolfram discusses the possibility that deep learning mechanisms may be too complex for humans to ever fully understand.

- 🔍 Cerebrus inference, a new AI model, is introduced, offering twice the speed of Google's previous model, Gro, with 800 tokens per second.

- 🧪 Dario Amade suggests AI could accelerate biological discovery by 100x, potentially compressing decades of research into just a few years.

Q & A

What is the significance of Google's research on diffusion models being compared to real-time game engines?

-Google's research on diffusion models being compared to real-time game engines is significant because it demonstrates AI's capability to render gameplay in real time, which was previously thought to be sci-fi technology. This advancement has profound implications for the future of simulated worlds and interactive AI systems.

What does the breakthrough by Magic Labs with their LTM2 Mini model mean for AI technology?

-Magic Labs' breakthrough with their LTM2 Mini model, which has a 100 million token context window, signifies a massive leap in AI's ability to process and understand large amounts of data. This could revolutionize how AI is used in various applications, particularly in coding platforms where understanding extensive codebases is crucial.

How does the 'hash hop' challenge demonstrate the capabilities of Magic Labs' LTM2 Mini model?

-The 'hash hop' challenge is designed to test the model's ability to store and retrieve maximum information for a given context size at all times. It requires the model to retrieve the value of a randomly selected hash pair from a large set of hash pairs, showcasing its advanced context handling and information retrieval capabilities.

What are the potential implications of AI systems reaching 100 million token context length?

-Reaching 100 million token context length in AI systems could lead to the creation of more sophisticated and realistic simulated worlds, improved natural language processing, and enhanced capabilities in coding platforms to understand and manipulate large codebases.

Why is the collaboration between OpenAI and the US AI Safety Institute significant?

-The collaboration between OpenAI and the US AI Safety Institute is significant as it indicates a move towards ensuring AI safety at a national level. It suggests that future AI models will undergo pre-release testing to guarantee safety standards, which is crucial for public trust and ethical AI development.

What is Bland AI, and how does it represent the future of AI in the workforce?

-Bland AI is an AI agent designed for enterprises to handle millions of phone calls simultaneously in any language, demonstrating the future of AI in the workforce by automating customer service and sales calls. It signifies a shift towards AI-driven efficiency in industries that heavily rely on phone communication.

How does the concept of 'conversational pathways' in Bland AI ensure intelligent and hallucination-proof conversations?

-Bland AI uses 'conversational pathways,' a tree of prompts that guide the AI through a conversation, ensuring it navigates dialogues in an intelligent and contextually appropriate manner. This structured approach helps prevent the AI from generating irrelevant or incorrect responses.

What is computational irreducibility, and why is it important in the context of AI and machine learning?

-Computational irreducibility refers to the phenomenon where the behavior of a computational system cannot be predicted from its components alone and must be computed in its entirety. In the context of AI and machine learning, it implies that the mechanisms behind deep learning might be too complex for humans to fully understand, suggesting that machine learning systems may rely on a form of evolutionary adaptation rather than structured mechanisms.

How does the introduction of Cerebrus inference impact the speed of AI model responses?

-Cerebrus inference is introduced as a significant advancement that doubles the speed of AI model responses compared to previous models like GPT. It can process 800 tokens per second, which is a game-changer for real-time applications and indicates a future where AI can provide rapid, complex analysis and responses.

What are the potential applications of AI increasing the rate of discovery in biology by 100x?

-If AI can increase the rate of discovery in biology by 100x, it could significantly compress the timeline for research and development in fields like genomics, pharmaceuticals, and disease treatment. This could lead to faster cures for diseases, advancements in longevity research, and a general acceleration of scientific progress in biology.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

AI Never Sleeps: This Week's News That Shook the AI World!

Why Unreal Engine 5.5 is a BIG Deal

Google goes wild, again... 11 things you missed at I/O

Doom Powered Entirely by AI, Cursor AI, Meta SAPIEN, OpenAI Drama, Project Orion

BATALHA de INTELIGÊNCIA ARTIFICIAL! - Gemini | ChatGPT-4o

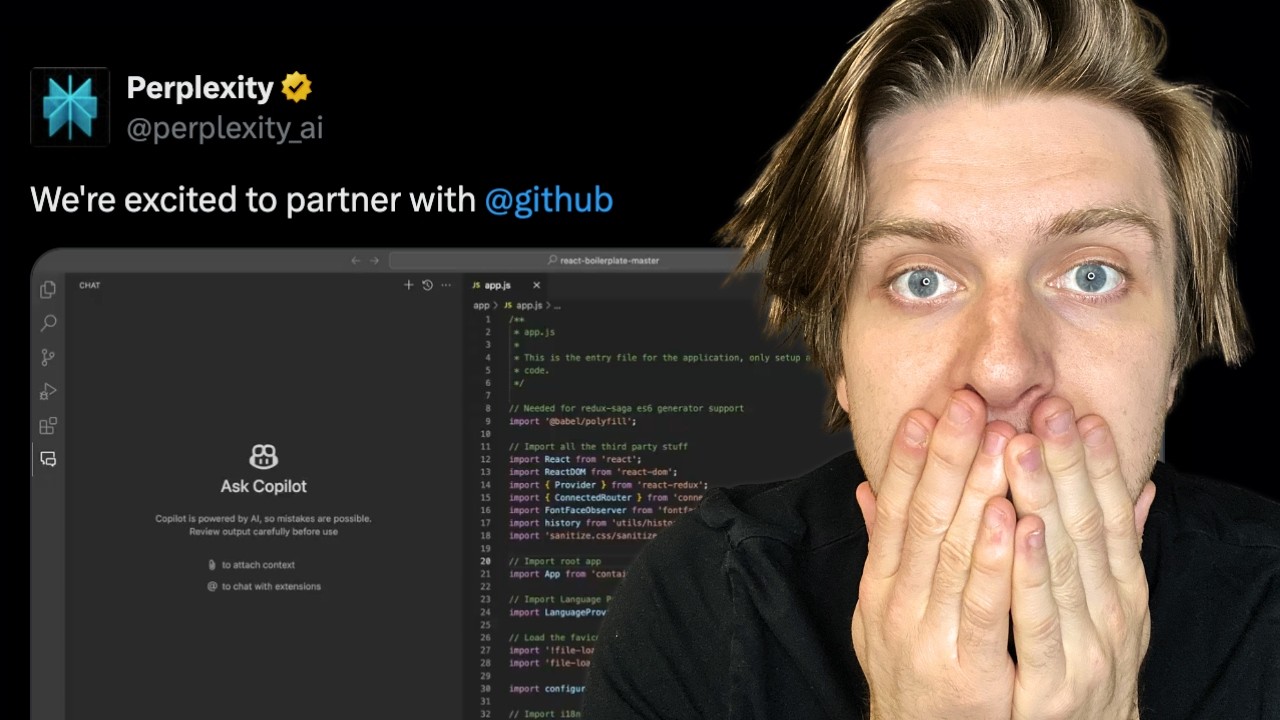

GitHub Copilot Just Destroyed All AI Code Editor Startups

5.0 / 5 (0 votes)