Cross-validation for minimizing MSE

Summary

TLDRThe script discusses the concept of trace in matrices, particularly its relevance in the context of covariance matrices and eigenvalues. It delves into the mean squared error (MSE) of an estimator, introducing a modified estimator to potentially reduce MSE by adjusting eigenvalues through the addition of a lambda term to the identity matrix. The script also covers the use of cross-validation to find the optimal lambda, explaining the process and its importance in improving estimator performance.

Takeaways

- 📉 The trace of \(X X^T\) inverse is a key quantity in the analysis.

- 📊 The trace of a matrix is the sum of its diagonal entries.

- 🔄 The trace of a matrix is also the sum of its eigenvalues.

- 🔢 Eigenvalues of \(X X^T\) inverse are the reciprocals of the eigenvalues of \(X X^T\).

- 📈 The mean squared error (MSE) can be written in terms of the eigenvalues of \(X X^T\).

- ✨ A new estimator \( \hat{W}_{new} \) is introduced, which incorporates a regularization term \( \lambda I \).

- 🧮 Adding \( \lambda I \) increases the eigenvalues, reducing the MSE.

- 🔍 There exists a \( \lambda \) that results in a lower MSE than the maximum likelihood estimator (MLE).

- 🔬 Cross-validation is used to find the optimal \( \lambda \) for minimizing the MSE.

- 🔄 K-fold cross-validation involves dividing the dataset into K parts to train and validate the estimator.

- 🧩 The process aims to find the \( \lambda \) that gives the least average error across all folds.

- 💡 Leave-one-out cross-validation is a more extreme form of cross-validation.

- ⚖️ Choosing K depends on the available time and resources.

- 🤔 The new estimator is derived from a more principled approach seen in probabilistic modeling.

Q & A

What is the trace of a matrix?

-The trace of a matrix is the sum of its diagonal entries. If a matrix A has diagonal entries a_1, a_2, ..., a_d, then the trace of A, denoted as Tr(A), is ∑_{i=1}^{d} a_i.

How is the trace of a matrix related to its eigenvalues?

-The trace of a matrix is equal to the sum of its eigenvalues. If λ_1, λ_2, ..., λ_d are the eigenvalues of a matrix A, then Tr(A) is ∑_{i=1}^{d} λ_i.

What is the significance of the trace in the context of the mean squared error (MSE)?

-In the context of the MSE, the trace is significant because it represents the sum of the reciprocals of the eigenvalues of the matrix XX^T, which directly influences the MSE of an estimator.

What is the relationship between the eigenvalues of a matrix and its inverse?

-The eigenvalues of the inverse of a matrix are the reciprocals of the original matrix's eigenvalues, provided that none of the original eigenvalues are zero.

What is the purpose of adding lambda times the identity matrix to XX^T in the new estimator?

-The purpose of adding lambda times the identity matrix to XX^T is to adjust the eigenvalues of the matrix, potentially reducing the MSE by increasing the denominators in the trace of the inverse matrix.

How does the new estimator W hat new differ from the maximum likelihood estimator (MLE)?

-The new estimator, W hat new, is defined as (XX^T + λI)^{-1}XY, where λ is a positive real number and I is the identity matrix. This differs from the MLE, which is XX^T^{-1}XY, as the new estimator includes an additional term to adjust the eigenvalues.

What is the advantage of using the new estimator with the added lambda term?

-The advantage is that by adding lambda to the diagonal of XX^T, the eigenvalues are increased, which in turn reduces the reciprocals in the trace of the inverse matrix, potentially leading to a lower MSE.

What is the existence theorem mentioned in the script?

-The existence theorem states that there exists some real number lambda such that the new estimator W_new has a lower MSE than the MLE. However, it does not provide a method for finding this optimal lambda.

How is the optimal lambda found in practice?

-In practice, the optimal lambda is found through a procedure called cross-validation, where different values of lambda are tested on a validation set, and the one that yields the lowest error is selected.

What is K-fold cross-validation and how does it differ from regular cross-validation?

-K-fold cross-validation is a technique where the data is divided into K equal parts or 'folds'. For each fold, a model is trained on K-1 folds and validated on the remaining fold. This process is repeated K times, and the average error is used to select the best lambda. It differs from regular cross-validation by providing a more robust estimate of the model's performance by averaging over multiple train-validation splits.

What is the principled way to understand the new estimator introduced in the script?

-The principled way to understand the new estimator is through the concept of regularization, which is a common technique in machine learning to prevent overfitting by adding a penalty term to the loss function. In this case, the penalty term is lambda times the identity matrix.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

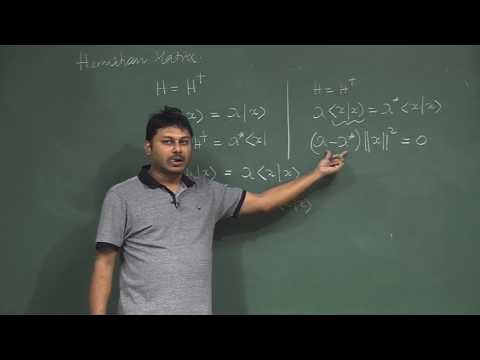

Lecture 16: Hermitian Matrix

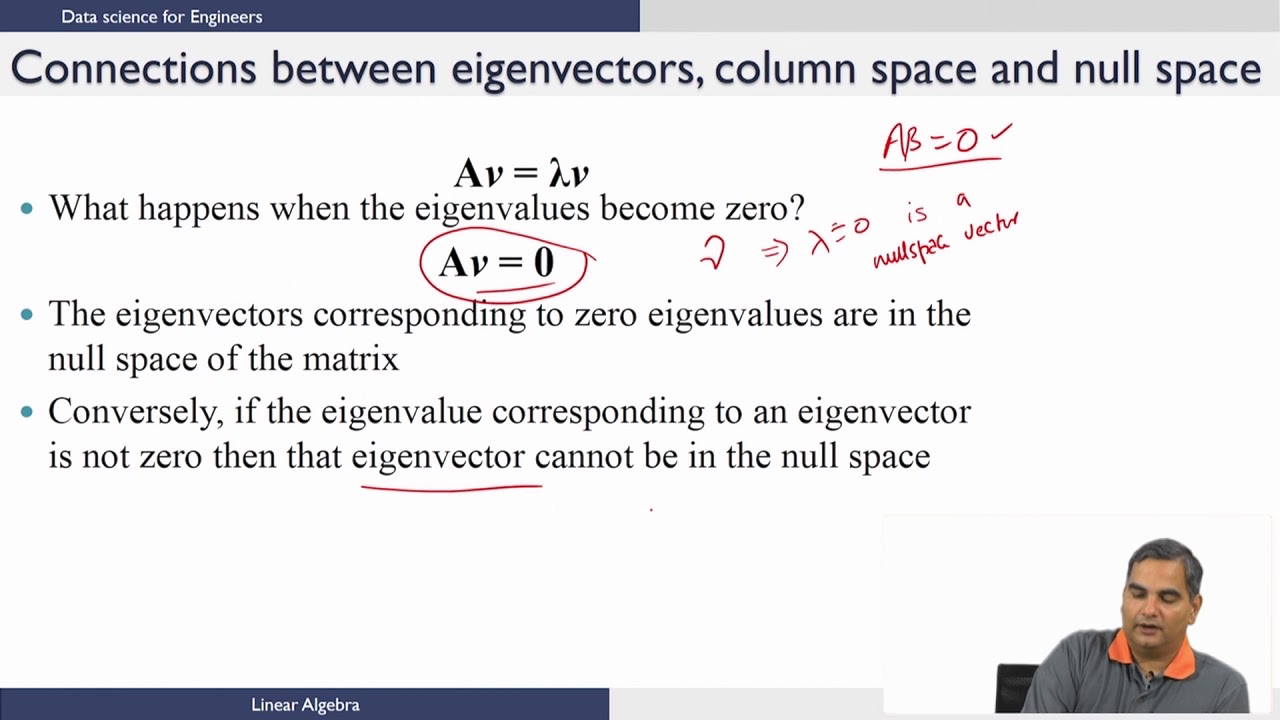

Linear Algebra - Distance,Hyperplanes and Halfspaces,Eigenvalues,Eigenvectors ( Continued 3 )

Bentuk Kuadrat [Aljabar Matriks]

MATRIKS RUANG VEKTOR | NILAI EIGEN MATRIKS 2x2 DAN 3x3

Eigenvalues and Eigenvectors | Properties and Important Result | Matrices

MATRIKS RUANG VEKTOR | VEKTOR EIGEN DAN BASIS RUANG EIGEN

5.0 / 5 (0 votes)