Introduction to Generative AI

Summary

TLDRThis video provides an introduction to Generative AI, explaining its definition, how it works, and its applications. Roger Martinez from Google Cloud covers topics such as artificial intelligence, machine learning, supervised and unsupervised models, and deep learning. He explains the difference between generative and discriminative models and highlights the power of large language models like Gemini. The video also touches on practical applications of generative AI, such as text-to-image and code generation, and discusses tools like Vertex AI Studio and PaLM API for developers to leverage Google's AI technologies.

Takeaways

- 🤖 Generative AI is a type of artificial intelligence that creates new content, such as text, images, audio, and synthetic data, based on patterns learned from existing data.

- 🧠 Artificial intelligence (AI) is a branch of computer science focused on building machines that can think and act like humans, while machine learning (ML) is a subfield of AI that trains models to make predictions from data.

- 📝 Supervised learning uses labeled data to predict future values, while unsupervised learning identifies patterns in unlabeled data, clustering similar data points.

- 🔗 Deep learning, a subset of machine learning, utilizes artificial neural networks to handle complex patterns, often using labeled and unlabeled data in semi-supervised learning.

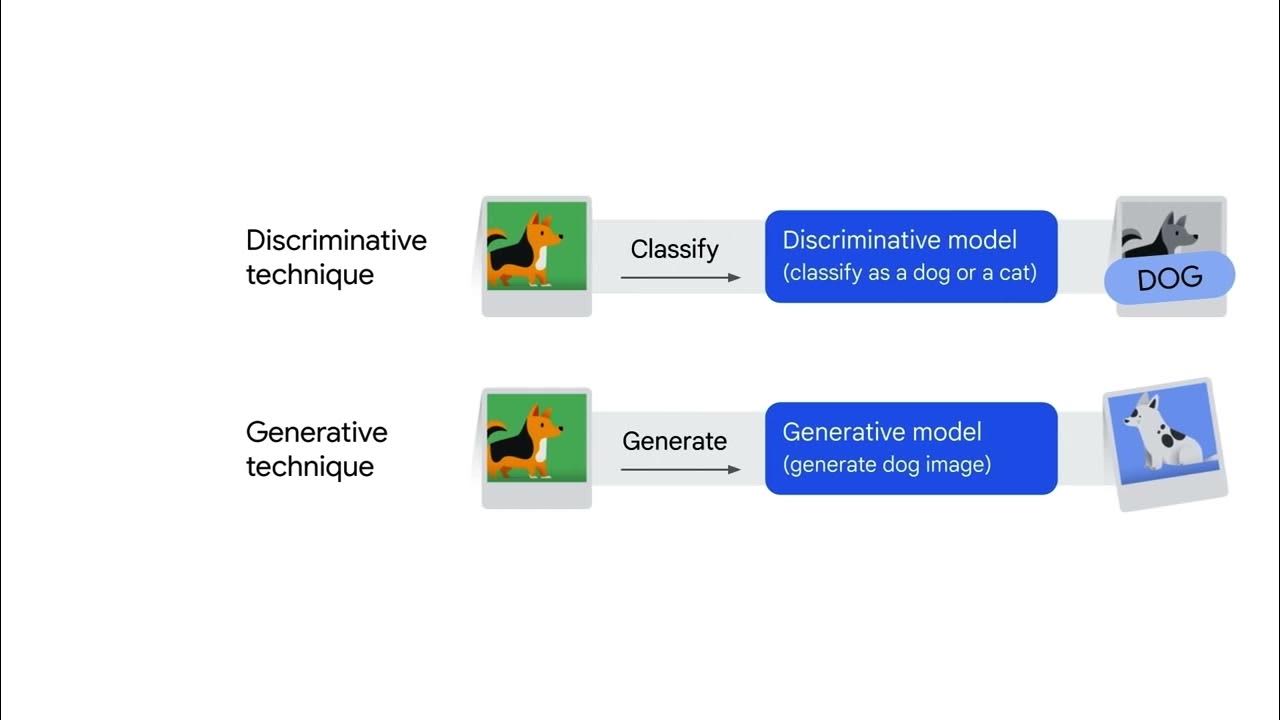

- 💡 Generative AI is a form of deep learning that generates new data instances, while discriminative models are used for classification or predicting labels.

- 📊 Large language models (LLMs), such as those used in generative AI, rely on transformers, which include an encoder and decoder to process input and generate relevant tasks.

- 🧩 Generative models can create various outputs based on inputs, including text, images, audio, and video, such as text-to-image or text-to-task models.

- 🌍 Foundation models, such as those in Google's Vertex AI and PaLM API, are large AI models pre-trained on vast datasets, which can be fine-tuned for tasks like sentiment analysis, image generation, and fraud detection.

- 💻 Gemini, a multimodal AI model, can process text, images, audio, and code, making it highly versatile for complex tasks that require understanding multiple types of input.

- 🚀 Tools like Vertex AI Studio, Vertex AI Search and Conversation, and the PaLM API make it easier for developers to build and deploy generative AI models, even with limited coding experience.

Q & A

What is generative AI?

-Generative AI is a type of artificial intelligence that creates new content based on patterns it has learned from existing data, such as text, imagery, audio, or synthetic data.

How does generative AI differ from traditional AI and machine learning?

-Traditional AI focuses on creating systems that can reason and act like humans. Machine learning is a subfield of AI where models learn from data to make predictions. Generative AI, a subset of deep learning, goes further by generating new content rather than just making predictions.

What are the two main types of machine learning models?

-The two main types are supervised models, which are trained on labeled data, and unsupervised models, which find patterns in unlabeled data.

How does deep learning relate to machine learning?

-Deep learning is a subset of machine learning that uses artificial neural networks, allowing models to process more complex patterns, inspired by the structure of the human brain.

What is the difference between a generative and a discriminative model?

-Discriminative models classify or predict labels for data, while generative models learn the underlying structure of data to create new content, such as generating text, images, or audio.

What are large language models (LLMs), and how do they relate to generative AI?

-LLMs are a subset of deep learning that can generate natural-sounding language based on patterns in large datasets. They are a key component of generative AI, allowing for applications like text generation and dialogue systems.

What role do transformers play in generative AI?

-Transformers are a type of deep learning architecture that revolutionized natural language processing by using encoders and decoders to process input sequences and generate relevant tasks, making generative AI more powerful.

What are some of the common applications of generative AI?

-Common applications include text generation, code generation, image generation, video creation, and generating 3D models, all based on the patterns learned from input data.

What is prompt design in generative AI?

-Prompt design refers to creating a short piece of text input to a large language model to control its output. Well-crafted prompts help guide the model to generate the desired content.

What tools does Google Cloud offer to help with generative AI development?

-Google Cloud offers tools like Vertex AI Studio for model exploration and customization, Vertex AI Search and Conversation for building chatbots and search engines, and the PaLM API for experimenting with large language models.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード5.0 / 5 (0 votes)