Genius Machine Learning Advice for 11 Minutes Straight

Summary

TLDRThe speaker emphasizes the importance of not blindly collecting more data in machine learning, suggesting that testing and modifying algorithms can be more effective. They highlight the need for systematic debugging and the value of hands-on learning in programming. The speaker advocates for sustained effort over time, the significance of understanding one's learning style, and the belief in the '10,000-hour rule' for expertise. They also discuss the maturation of AI, the importance of creating over consuming, and the power of deep learning despite initial skepticism. The talk concludes with advice for young people to find their passions, understand themselves, and the potential of cost functions in machine learning.

Takeaways

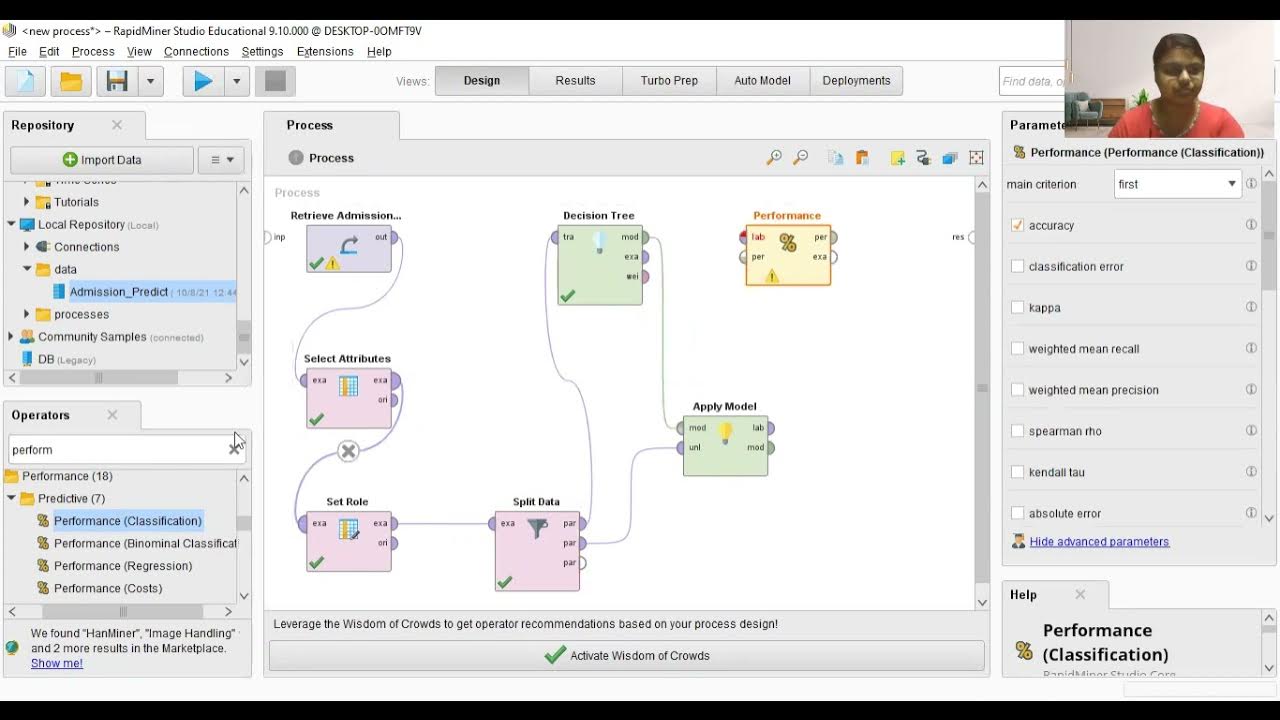

- 🔍 **Efficient Debugging**: The speaker emphasizes the importance of quickly identifying when collecting more data isn't the solution to a problem, suggesting that modifying the architecture or trying different approaches can be more effective.

- 🚀 **Expertise in Machine Learning**: Being adept at debugging machine learning algorithms can significantly speed up the process of getting a model to work, with experts being 10x to 100x faster than others.

- 🤔 **Systematic Problem Solving**: When faced with issues in machine learning, it's crucial to ask the right questions and systematically try different solutions like changing the architecture, adding more data, or adjusting the optimization algorithm.

- 💡 **Learning by Doing**: The speaker advocates for learning programming through hands-on experience, suggesting that one should not be afraid to get hands-on and dirty when trying to solve problems.

- 📚 **Deep Understanding Through Struggle**: Encourages spending time struggling with problems to learn more, rather than immediately seeking answers through quick searches, which can hinder the learning process.

- 📈 **Consistent Effort Over Time**: Success in fields like programming and machine learning comes from consistent effort over a long period, not from sporadic all-nighters or bursts of work.

- 📝 **Handwritten Notes for Retention**: The act of taking handwritten notes is recommended for better knowledge retention and understanding, as it requires recoding information in one's own words.

- 🤖 **Practical Application of AI**: When considering the application of AI, start with a specific problem rather than a general desire to use the technology, ensuring that the solution is targeted and effective.

- 🌟 **The Value of Creation**: The speaker promotes the idea of creation over consumption or redistribution, arguing that creating something new is more satisfying and fulfilling.

- 🧠 **Long-term Learning and Passion**: Encourages focusing on long-term learning and finding one's true passions, which can lead to a deeper understanding and more significant contributions in a field.

Q & A

Why do some engineers spend six months on a direction that might not be fruitful?

-Some engineers spend six months on a direction like collecting more data because they believe more data is valuable. However, they might not realize that the problem at hand might not benefit from more data, and a different approach, such as modifying the architecture, could be more effective.

How can one become proficient at debugging machine learning algorithms?

-Becoming proficient at debugging machine learning algorithms involves systematic questioning, such as why a model isn't working and what changes could be made, like altering the architecture, adding more data, adjusting regularization, or changing the optimization algorithm.

What is the best way to learn programming according to the transcript?

-The best way to learn programming is by doing it, not just watching videos. One should start a project, face challenges, and try to solve them without immediately seeking answers online, which allows for deeper learning and understanding.

What does 'getting your hands dirty' mean in the context of learning programming?

-'Getting your hands dirty' means spending time deeply engaged in trying to solve problems on your own, such as debugging a network that isn't converging, without immediately turning to Google for answers.

Why is sustained effort over time more valuable than sporadic all-nighters?

-Sustained effort over time is more valuable because it allows for consistent progress and learning, whereas all-nighters are not sustainable and can lead to burnout. Regular engagement with the material leads to better retention and understanding.

How does the concept of the '10,000 hours' theory apply to becoming an expert in a field?

-The '10,000 hours' theory suggests that spending a significant amount of time (10,000 hours) deliberately practicing and working on something can lead to expertise. It emphasizes the importance of consistent effort and time investment over natural talent.

What is the benefit of taking handwritten notes while studying?

-Taking handwritten notes increases retention by forcing you to recode the knowledge in your own words, which promotes long-term retention and understanding.

Why should one consider creating over consuming or redistributing?

-Creating is more satisfying and fulfilling than consuming or redistributing. It allows for the development of new ideas and innovations, which can have a more significant and lasting impact.

What advice is given for those interested in a career in AI?

-For those interested in a career in AI, the advice is to start with a clear goal of what you want to achieve with AI, such as creating a machine that can perform a task not currently possible, and then work backward to identify the steps and research needed to achieve it.

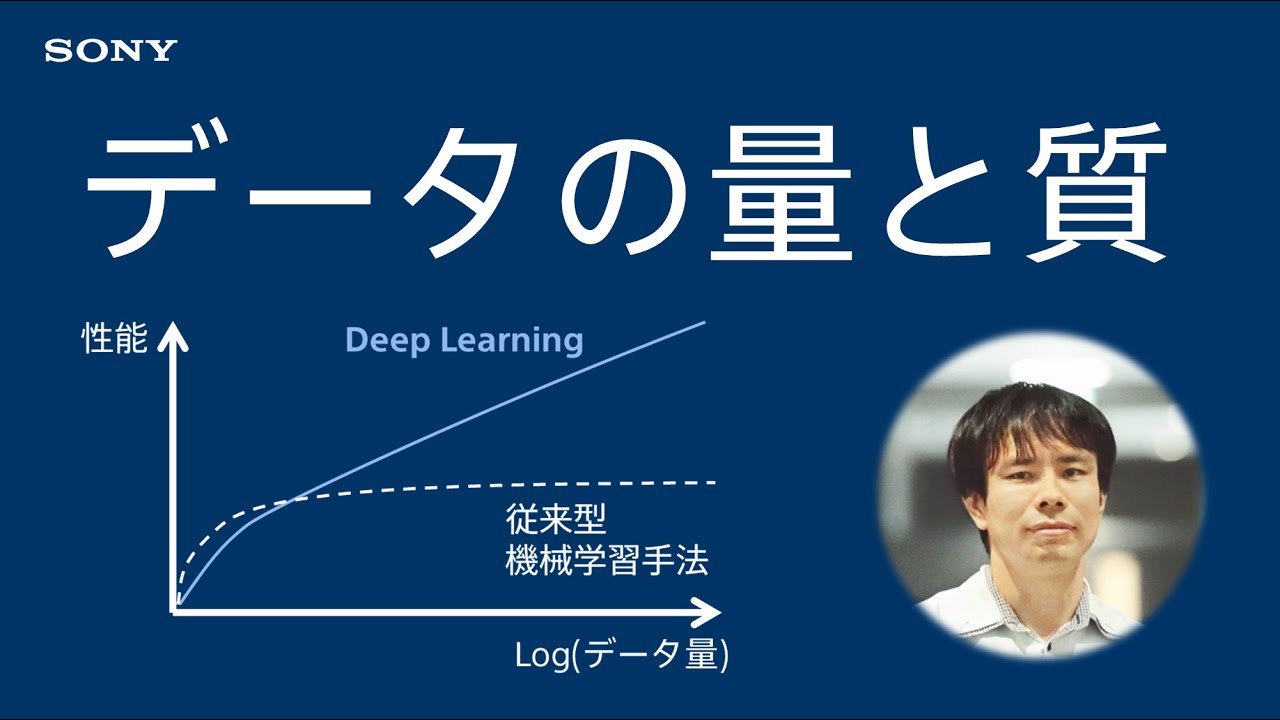

How has the perception of large neural networks changed over time?

-Initially, large neural networks were underestimated and not believed to be trainable. However, with the availability of large amounts of supervised data and computational power, along with the conviction that they could work, they have become successful and are now a cornerstone of deep learning.

Why are cost functions important in machine learning?

-Cost functions are important in machine learning because they provide a measurable way to assess the performance of a system. They allow for the optimization of models by minimizing or maximizing a specific measure, which is crucial for training and improving machine learning algorithms.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

5.0 / 5 (0 votes)