Coding with OpenAI o1

Summary

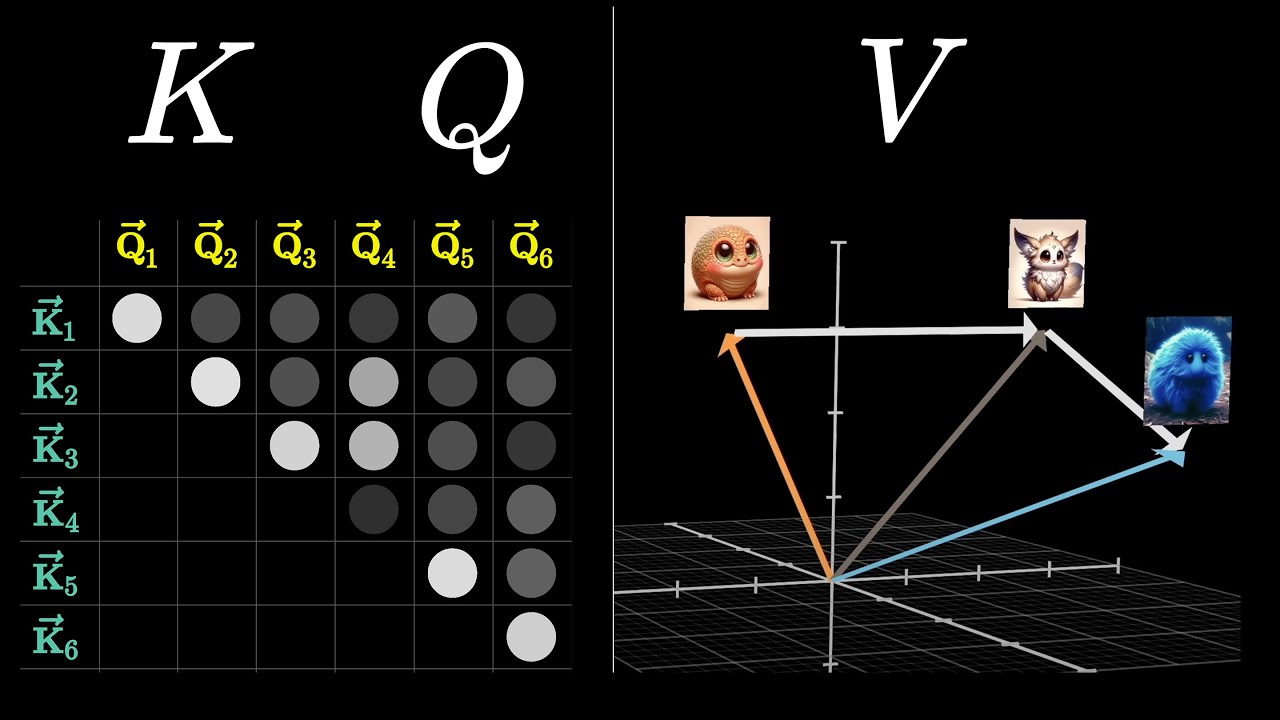

TLDRIn this video, the presenter discusses their experience using a new AI model, O1 Preview, to create an interactive visualization of the self-attention mechanism in Transformers, a technology behind models like GPT-3. They describe their initial lack of skills to visualize this complex process but how O1 Preview's thoughtful approach to coding allowed them to successfully develop a tool that visualizes word relationships in a sentence, such as 'the quick brown fox'. The tool dynamically shows attention scores when hovering over words, providing a valuable educational resource for their teaching sessions on Transformers.

Takeaways

- 💡 The speaker is showcasing a code example for visualizing the self-attention mechanism in Transformers, which is a technology behind models like GPT.

- 📚 The speaker teaches a class on Transformers and wanted to visualize self-attention to better explain the concept to students.

- 🤖 The speaker acknowledges a lack of personal skills in creating such visualizations and seeks help from a new model, O1 Preview.

- 💻 The speaker demonstrates the use of a command to engage the model's thinking process, which is a feature of O1 Preview that sets it apart from previous models like GPT-40.

- 🔍 The speaker provides specific requirements for the visualization, such as using the example sentence 'the quick brown fox' and visualizing attention scores with varying edge thicknesses.

- 📈 The visualization is interactive, with the ability to hover over tokens to see the attention scores and edges, which is a key feature of the visualization tool.

- 🛠️ The speaker uses the D editor of 2024 to implement the code provided by the model, indicating a futuristic or advanced tool for coding.

- 🌐 The visualization is viewable in a web browser, suggesting that the tool is web-based and can be accessed through a browser interface.

- 🔧 There is a mention of a minor rendering issue with overlapping, but overall, the speaker is satisfied with the model's output and its utility.

- 🎓 The speaker plans to use this visualization tool in teaching sessions, highlighting its potential educational value.

Q & A

What is the main topic of the video script?

-The main topic of the video script is the visualization of a self-attention mechanism in Transformers, a technology behind models like GPT, using an interactive component.

Why is visualizing self-attention important for understanding Transformers?

-Visualizing self-attention is important because it helps to understand how Transformers model the relationship between words in a sentence, which is crucial for tasks like language translation or text summarization.

What is the example sentence used in the script to demonstrate the visualization?

-The example sentence used is 'the quick brown fox', which is a pangram often used to demonstrate the use of all letters of the alphabet.

What is the interactive component mentioned in the script?

-The interactive component is the ability to hover over a word token in the visualization, which then displays edges with thicknesses proportional to the attention scores between words.

How does the new model O1 Preview help in creating the visualization?

-The new model O1 Preview assists by carefully thinking through the requirements and generating code that can be used to create the visualization, including handling the interactive components.

What is a common failure mode of existing models when given many instructions?

-A common failure mode is that existing models may miss one or more instructions when given too many at once, similar to how humans can overlook details when presented with complex tasks.

How does the model O1 Preview reduce the chance of missing instructions?

-The model O1 Preview reduces the chance of missing instructions by thinking slowly and carefully, going through each requirement in depth before generating the output code.

What editor does the speaker use to implement the visualization code?

-The speaker uses the 'D editor of 2024' to implement the visualization code, which is a fictional editor mentioned in the script.

What is the outcome when the speaker hovers over a word in the visualization?

-When hovering over a word, the visualization shows arrows representing the edges between words, with thicknesses indicating the strength of the attention scores between them.

What is the speaker's overall assessment of the model O1 Preview's performance in creating the visualization?

-The speaker is pleased with the model O1 Preview's performance, noting that it produced a correct and useful visualization that could be beneficial for their teaching sessions.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

ChatGPT o1 vs ChatGPT4 | Is it even better? | OpenAI launches new model GPT-o1

What are Transformers (Machine Learning Model)?

New Llama 3 Model BEATS GPT and Claude with Function Calling!?

Visualizing Attention, a Transformer's Heart | Chapter 6, Deep Learning

Stanford CS25: V1 I Transformers United: DL Models that have revolutionized NLP, CV, RL

GPT2 implemented in Excel (Spreadsheets-are-all-you-need) at AI Tinkerers Seattle

5.0 / 5 (0 votes)