Assumptions of Linear Regression | What are the assumptions for a linear regression model

Summary

TLDRIn this video, Aman, a data scientist, discusses the key assumptions of a basic linear regression model, which are crucial for any data science interview. He covers five main assumptions: linear relationship between target and predictors, low or no multicollinearity, random error behavior without heteroskedasticity, no autocorrelation between errors, and normal distribution of errors. He also touches on the independence of observations. Aman explains why these assumptions matter and how to check them using various plots like scatter plots and QQ plots. This is an essential guide for beginners in data science.

Takeaways

- 😀 Linear regression is one of the most fundamental models in data science, and understanding its assumptions is crucial for interviews.

- 😀 Assumption 1: There must be a linear relationship between the target variable (y) and independent variables (x1, x2, etc.).

- 😀 Assumption 2: No multicollinearity should exist, meaning that independent variables should not be highly correlated with each other.

- 😀 Multicollinearity is problematic because it causes inflated coefficients, making it difficult to interpret the relationships in the model.

- 😀 Assumption 3: The errors (residuals) in the regression model should not show heteroskedasticity, meaning the variance of errors should be constant across all levels of the independent variables.

- 😀 Heteroskedasticity is indicated when the error plot forms a funnel shape, which is a sign of non-constant variance.

- 😀 Assumption 4: There should be no autocorrelation between the errors. Errors should be independent of each other.

- 😀 Autocorrelation indicates that the model is not capturing the underlying data patterns and can lead to incorrect conclusions.

- 😀 Assumption 5: The error terms should be normally distributed for the model to perform correctly.

- 😀 In addition to these assumptions, it is important to ensure that all observations are independent of each other, which is also a key assumption in linear regression models.

- 😀 To check these assumptions, diagnostic plots such as QQ plots (for normality) and scatter plots (for linearity and homoscedasticity) can be useful.

Q & A

What are the key assumptions of a basic linear regression model?

-The key assumptions of a basic linear regression model include: 1) A linear relationship between the target variable and independent variables, 2) Low or no multicollinearity, 3) No heteroskedasticity in the error terms, 4) No autocorrelation between the errors, and 5) The errors should be normally distributed. Additionally, the observations should be independent of each other.

Why is it important for there to be a linear relationship between the target variable and predictors in linear regression?

-A linear relationship is essential because linear regression assumes that the target variable can be predicted by a straight-line relationship with the independent variables. If this relationship is not linear, the model will not perform well and may lead to inaccurate predictions.

What is multicollinearity, and why is it problematic in linear regression?

-Multicollinearity occurs when independent variables are highly correlated with each other. It causes problems in linear regression because it becomes difficult to isolate the individual effect of each independent variable on the target variable. This can lead to inflated coefficients, making the model unreliable.

What is heteroskedasticity, and how does it affect a linear regression model?

-Heteroskedasticity refers to the situation where the variance of the error terms is not constant across all levels of the independent variables. This can lead to biased estimates of the regression coefficients and affect the accuracy of predictions. The errors should show random behavior and not be related to the independent variables.

What is autocorrelation in the context of linear regression, and why should it be avoided?

-Autocorrelation refers to the correlation between the error terms (e.g., e1 is correlated with e2). This is problematic because if errors are correlated, it suggests that the model is not capturing the underlying data pattern, leading to inefficient estimates and less reliable predictions.

How can you check for heteroskedasticity in a linear regression model?

-You can check for heteroskedasticity by plotting the residuals (errors) against the predicted values. In a well-behaved model, the residuals should display a random scatter. If the plot shows a funnel-shaped pattern, indicating increasing or decreasing variance, this suggests heteroskedasticity.

What is the importance of ensuring that error terms are normally distributed in a linear regression model?

-Normal distribution of error terms ensures that the estimated coefficients are unbiased and efficient. It also supports the validity of hypothesis tests, such as t-tests and F-tests, which rely on the assumption of normality to determine the statistical significance of model coefficients.

What tools or plots can be used to check the normality of error terms in linear regression?

-A QQ plot (quantile-quantile plot) is commonly used to check for the normality of error terms. It compares the distribution of residuals against a theoretical normal distribution. If the points lie along a straight line, the residuals are normally distributed.

Why is it necessary for the observations to be independent in a linear regression model?

-Independence of observations is important because if the observations are correlated, it can lead to biased estimates of the regression coefficients. The model may fail to capture the true relationships in the data, leading to incorrect conclusions and predictions.

What are some of the diagnostic checks you can perform after fitting a linear regression model?

-After fitting a linear regression model, you can perform diagnostic checks like examining residual plots for heteroskedasticity, using a QQ plot to check for normality of errors, and using scatter plots to check for multicollinearity. Additionally, you can check for autocorrelation of errors using tests like the Durbin-Watson test.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

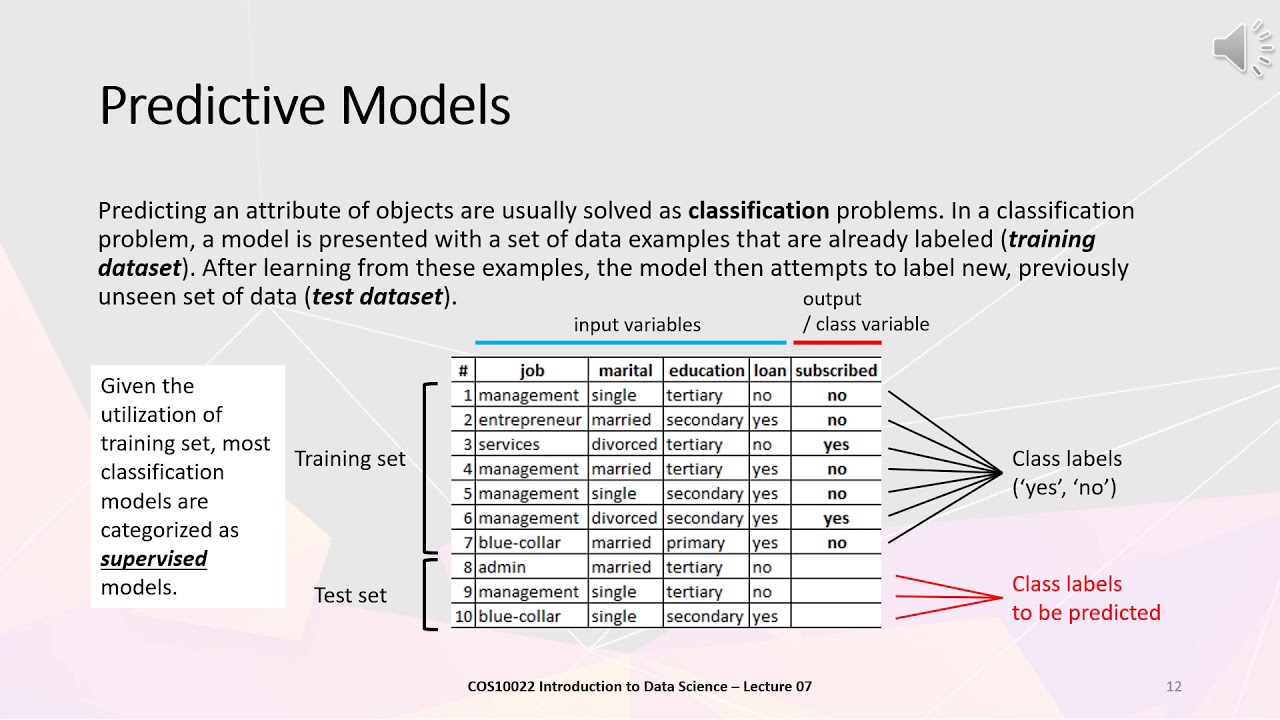

COS10022 Linear Regression (Lecture)

Regresi Linear Sederhana dengan Ordinary Least Square

10 Curve Fitting Part2 NUMERIK

What are the main Assumptions of Linear Regression? | Top 5 Assumptions of Linear Regression

Simple Linear Regression: An Easy and Clear Beginner’s Guide

KULIAH STATISTIK - ANALISIS REGRESI

5.0 / 5 (0 votes)