26 prompt ChatGPT +50% di qualità ✅

Summary

TLDRThe video script discusses the concept of prompt engineering and design for large language models like GPT, Gemini, and Lama. It highlights a study that identifies 26 techniques and tips for crafting better prompts leading to improved responses. The techniques range from being direct and avoiding negative language to incorporating audience knowledge and dividing complex tasks into simpler instructions. The video emphasizes the importance of clear objectives, examples, and structured prompts to activate the latent memory of the model for accurate and detailed answers.

Takeaways

- 🤖 The effectiveness of large language models like GPT, Gemini, and Lama depends on the quality of the prompts used to interact with them.

- 🔍 Prompt engineering and design are crucial fields of study to optimize interactions with AI models.

- 📈 A well-conducted study identified 26 techniques or tips to create better prompts leading to improved responses from AI.

- 🎯 Directness in prompts can lead to more concise responses from language models, avoiding polite phrases like 'please' or 'thank you'.

- 🧐 Tailoring prompts to the expected audience, such as assuming the reader is an expert or a novice, can significantly alter the response.

- 📝 Breaking down complex tasks into a sequence of simpler instructions can improve the AI's performance, especially in problem-solving.

- 🤔 Using 'chain of thoughts' techniques can help in guiding the AI through a step-by-step process, which is beneficial for complex problem-solving.

- 🚫 Avoiding negative directives and using affirmative language can help maintain clarity and avoid confusion in the AI's responses.

- 💡 Incorporate examples and use a 'few-shot' approach to train the AI on what is expected from it, providing practical examples to guide its output.

- 📋 Structuring prompts with clear sections for instructions, examples, questions, and context can enhance the AI's understanding and performance.

- 📝 Asking the AI to continue a text using specific words, phrases, or sentences provided can help maintain coherence and style.

- 🔧 For complex coding tasks, prompts can be structured to generate code across multiple files and create scripts for automating file creation or modifications.

Q & A

What is the main focus of the video?

-The main focus of the video is to discuss the concept of prompt engineering and provide 26 techniques or tips for crafting better prompts that lead to improved responses from large language models like GPT, Gemini, and Lama.

Why is asking the right question important when interacting with large language models?

-Asking the right question is crucial because it directly influences the quality of the responses from large language models. Properly structured questions can lead to more accurate, relevant, and concise answers by guiding the model's interpretation and focus.

What is the first principle suggested in the video for prompt engineering?

-The first principle suggested is to be direct in the questions. There is no need to be overly polite when interacting with large language models, so phrases like 'please' and 'thank you' are not necessary; simply state the question or request concisely.

How can incorporating the audience into the prompt improve responses?

-Incorporating the audience into the prompt helps tailor the response to the expected reader's knowledge level. For example, if the audience is an expert, the prompt should reflect that and avoid basic explanations, whereas if the audience is a novice, the information should be presented in a more simplified manner.

What is the technique of breaking down complex activities into simpler instructions?

-The technique of breaking down complex activities into simpler instructions involves dividing a complex task into a sequence of easier steps. This method helps the language model to better understand and execute the task by following each step individually, which can lead to more accurate and actionable responses.

How can using examples in prompts enhance the responses from language models?

-Using examples in prompts provides clear guidelines and expectations for the language model, which can improve the relevance and accuracy of the responses. It helps the model understand the desired output format and the specific information to include.

What is the purpose of incorporating phrases like 'You will be penalized' in prompts?

-Incorporating phrases like 'You will be penalized' is a way to guide the language model towards providing higher quality responses. It encourages the model to search its latent memory for better information rather than providing the first thing that comes to mind.

What is the 'chain of thought' technique mentioned in the video?

-The 'chain of thought' technique involves structuring the prompt in a way that it guides the language model through a logical sequence of steps or reasoning. This can help the model to provide more detailed and step-by-step explanations, enhancing the clarity and depth of the response.

How can the 'few-shot learning' method be applied in prompt engineering?

-The 'few-shot learning' method in prompt engineering involves providing a small number of examples or instances before the main prompt. This helps the language model to understand the context and the type of information expected in the response, leading to more accurate and relevant outputs.

What is the significance of using clear and precise requirements in prompts?

-Using clear and precise requirements in prompts helps the language model to understand exactly what is expected, reducing the chances of misinterpretation and ensuring that the response is tailored to the specific needs of the user. It also encourages the model to provide more detailed and targeted information.

What is the advice given in the video for improving the language model's understanding of a prompt?

-The advice given is to use sectioning techniques, such as creating distinct sections for instructions, questions, and examples, and to use placeholders and markers that the language model can recognize. This helps the model to differentiate between different parts of the prompt and to focus on the relevant information for each section.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

Simplifying Generative AI : Explaining Tokens, Parameters, Context Windows and more.

A basic introduction to LLM | Ideas behind ChatGPT

Prompt Engineering

Context Rot: How Increasing Input Tokens Impacts LLM Performance

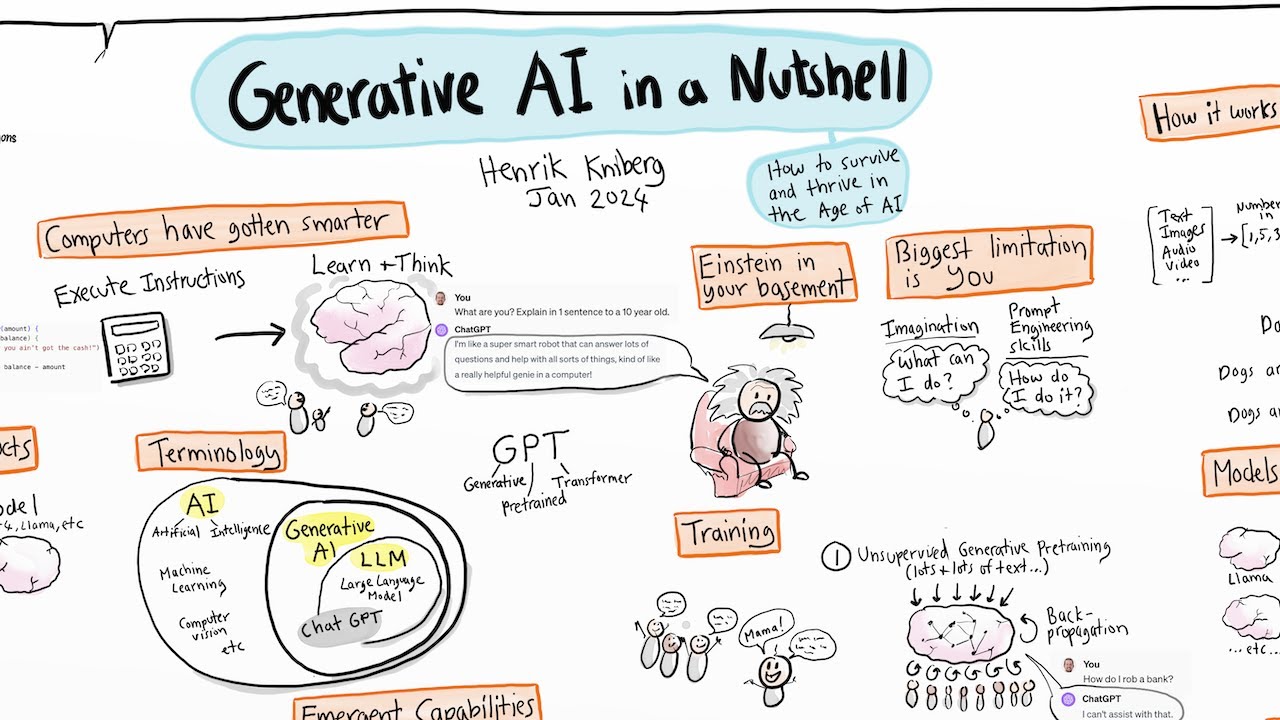

Generative AI in a Nutshell - how to survive and thrive in the age of AI

LLM Module 3 - Multi-stage Reasoning | 3.3 Prompt Engineering

5.0 / 5 (0 votes)