Generative AI Project Lifecycle-GENAI On Cloud

Summary

TLDRIn this informative video, Kish Naak delves into the Generative AI Project Life Cycle, essential for developing cloud-based applications. He outlines key steps: defining use cases, selecting and customizing models, prompt engineering, fine-tuning, and aligning models with human feedback. Naak emphasizes the importance of model evaluation, optimization, deployment for inferencing, and integration into applications. The video promises a series exploring AI on cloud platforms like AWS and Azure, aiming to guide viewers through the complexities of generative AI projects.

Takeaways

- 🌟 The video discusses the Generative AI (Gen AI) Project Life Cycle, emphasizing its importance for developing cloud-based applications.

- 📝 The first step in the Gen AI Project Life Cycle is defining the use case, which could range from a recommendation application to a text summarization or chatbot.

- 🔍 The scope of the project is determined by the specific use case, which influences the requirements and resources needed.

- 🤖 The second step involves choosing the right model, which can be a foundation model like OpenAI's GPT or a custom large language model (LLM) built from scratch.

- 🏭 Foundation models are large pre-trained models that can be fine-tuned for specific business use cases using techniques like prompt engineering.

- 🛠 Building a custom LLM requires significant resources and considerations to manage model hallucination and other challenges.

- 🔧 The third step includes tasks like prompt engineering, fine-tuning, and training with human feedback to align the model with desired outcomes.

- 📊 Evaluation is crucial to measure the model's performance using various metrics, ensuring it meets the project's objectives.

- 🚀 The deployment phase involves optimizing and deploying models for inferencing, where cloud platforms and LLM Ops play a significant role.

- ⚙️ Application integration is key after deployment, where the focus is on creating LLM-powered applications that solve specific use cases.

- 🌐 The video mentions the use of multiple cloud platforms like AWS, Azure, and GCP, highlighting the variety of services available for inferencing.

Q & A

What is the main topic of Kish naak's YouTube video?

-The main topic of the video is the Generative AI (Gen AI) Project Life Cycle, discussing the workflow and steps involved in developing AI applications in the cloud.

Why is understanding the Gen AI Project Life Cycle important for developing cloud applications?

-Understanding the Gen AI Project Life Cycle is important because it provides a generic workflow that helps developers systematically approach the development of AI applications from data injection to deployment in the cloud.

What are the four to five steps Kish naak outlines for the Gen AI Project Life Cycle?

-The steps outlined are: 1) Defining the use case, 2) Choosing the right model (Foundation models or custom LLM), 3) Adapting and aligning models through prompt engineering, fine-tuning, and training with human feedback, 4) Evaluation of model performance, and 5) Deployment and application integration.

What is a 'use case' in the context of Gen AI projects?

-A 'use case' in Gen AI projects refers to the specific application or problem that the AI is intended to solve, such as a recommendation application, text summarization, or a chatbot.

What are Foundation models in the context of AI?

-Foundation models are large pre-trained models like OpenAI's GPT models or Google's BERT, which can be used as a basis to solve a wide range of generic use cases in AI.

What does Kish naak mean by 'fine-tuning' a Foundation model?

-Fine-tuning a Foundation model means making adjustments to the model using specific custom data to improve its performance for a particular business use case, making it behave well for that specific application.

What is a custom LLM, and why would a company choose to build one from scratch?

-A custom LLM (Large Language Model) is an AI model developed from scratch to specifically address a company's unique use cases. Companies choose to build a custom LLM to have full control over the model's capabilities and to tailor it precisely to their needs, although it requires more resources.

Why is prompt engineering important in the Gen AI Project Life Cycle?

-Prompt engineering is important because it involves crafting the right prompts to guide the AI model to solve a specific use case effectively, which is crucial for the model's performance and accuracy.

What role does human feedback play in training LLM models?

-Human feedback plays a critical role in training LLM models as it helps align the model's outputs with desired outcomes, improving the model's accuracy and effectiveness in real-world applications.

What does Kish naak discuss regarding deployment and application integration in the Gen AI Project Life Cycle?

-Kish naak discusses that after the model is ready and evaluated, the next steps involve deployment for inferencing and application integration. This includes optimizing the models for performance and integrating them into different applications to solve various use cases.

What platforms and services will be covered in Kish naak's series on Gen AI on cloud?

-The series will cover platforms like AWS SageMaker, Azure AI Studio, and potentially Google Cloud Platform (GCP), focusing on their services and capabilities for developing and deploying Gen AI applications in the cloud.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

What Skillsets Takes You To Become a Pro Generative AI Engineer #genai

Detailed LLMOPs Project Lifecycle

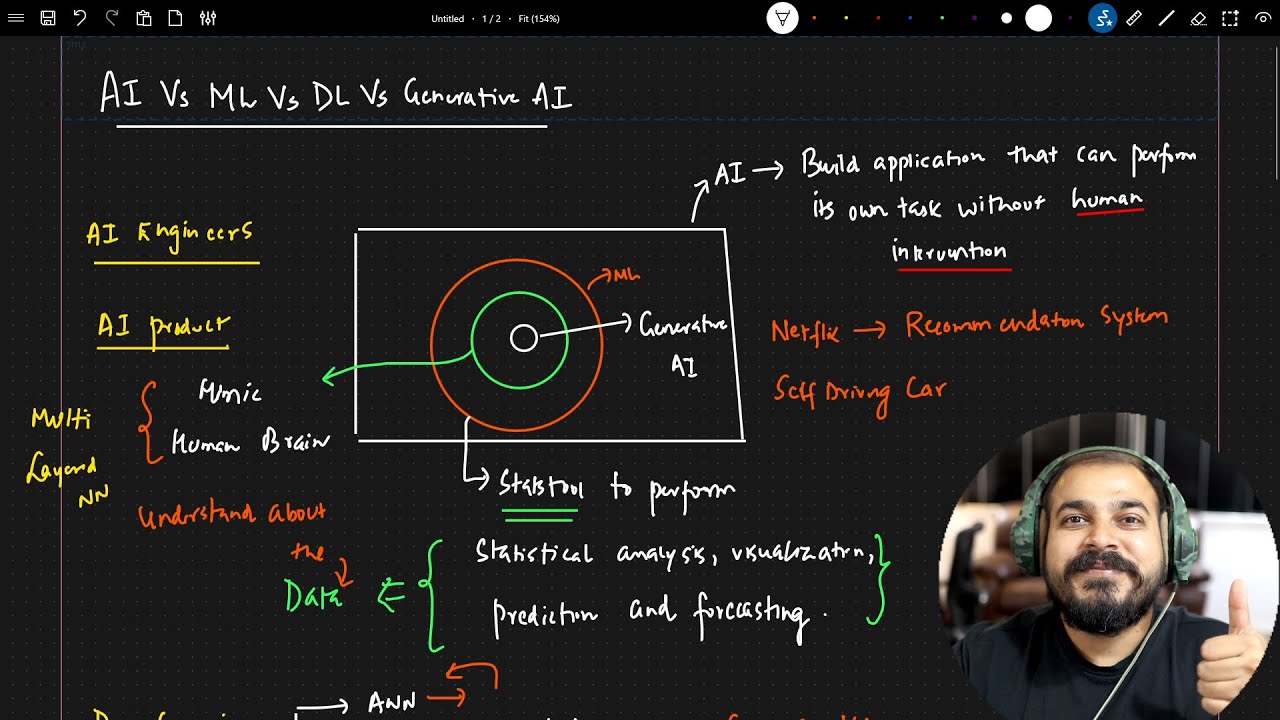

AI vs ML vs DL vs Generative Ai

Fresh And Updated Langchain Series- Understanding Langchain Ecosystem

Exploring Job Market Of Generative AI Engineers- Must Skillset Required By Companies

Introduction to Generative AI

5.0 / 5 (0 votes)