Konsep dan Jenis Reliabilitas

Summary

TLDRThis video discusses the concept of reliability in measurement, explaining its various types and the relationship between reliability and validity. Reliability is defined as consistency in measurements, while validity refers to accuracy. The speaker highlights types of reliability such as test-retest, parallel forms, and internal consistency, and explains how these methods ensure stable results across tests. The video also covers factors that affect reliability, including the number of items, variance, homogeneity, and sample size, providing insights into best practices for ensuring accurate and reliable measurements.

Takeaways

- 📏 Reliability refers to the consistency of a measurement, whereas validity refers to the accuracy of a measurement.

- 🎯 A measurement can be reliable (consistent) but not valid (accurate), as in the example of a clock consistently showing the wrong time.

- 🔁 Test-retest reliability measures the consistency of scores over time, often used for stable constructs like intelligence.

- 📚 Parallel forms reliability addresses the issue of carry-over effects by using two equivalent tests to measure the same construct.

- 🔄 Internal consistency reliability, such as Cronbach’s Alpha, measures the consistency of items within the same test.

- ⚖️ Split-half reliability involves dividing the test into two parts to assess consistency, requiring that the two halves have equal means and variances.

- 📝 Inter-rater reliability measures consistency between different raters or judges assessing the same subject.

- 📊 Factors affecting reliability include the number of items, the variance of scores, and the stability of the construct being measured.

- 🚀 Speed tests often produce higher reliability estimates but should avoid internal consistency methods due to incomplete item responses.

- 💡 Reliability should be interpreted with respect to specific contexts and sample groups, and not as an absolute quality of the test itself.

Q & A

What is the basic definition of reliability in measurement?

-Reliability in measurement refers to the consistency of the results. A measurement is considered reliable if it consistently produces the same results under the same conditions.

How is reliability related to validity?

-Reliability is related to validity in that for a measurement to be valid (accurate), it must also be reliable (consistent). However, a measurement can be reliable without being valid. For example, a clock that is consistently 5 minutes fast is reliable but not valid.

What is an example of a reliable but not valid measurement?

-An example is a clock that is always 5 minutes ahead. It consistently gives the same error, making it reliable, but because it does not show the correct time, it is not valid.

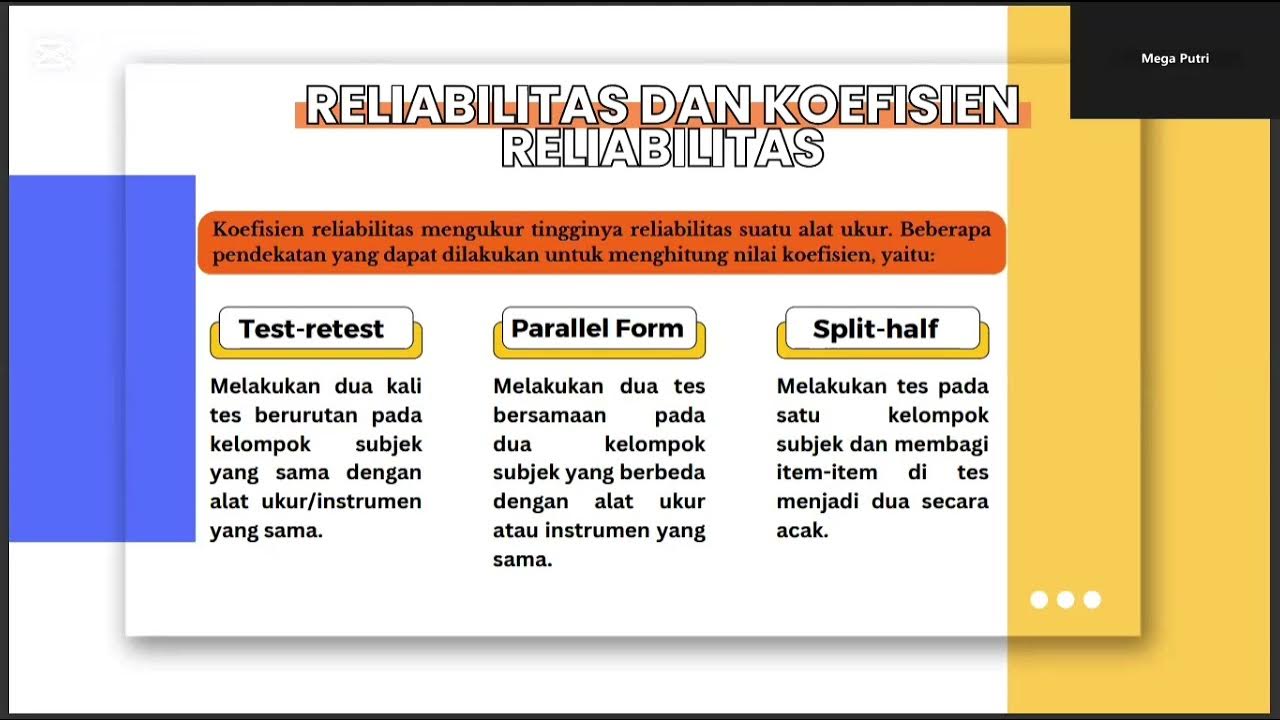

What are the types of reliability mentioned in the video?

-The video mentions several types of reliability: test-retest reliability, parallel forms reliability, internal consistency reliability, and inter-rater reliability.

What is test-retest reliability?

-Test-retest reliability measures the stability of a test over time. It involves administering the same test twice to the same group of people and then correlating the two sets of scores. If the scores are consistent, the test has high test-retest reliability.

When is test-retest reliability most appropriate?

-Test-retest reliability is most appropriate when the construct being measured is stable, such as intelligence or personality. It is less suitable for constructs that can change rapidly, like attitudes or emotions.

What is the main issue with test-retest reliability?

-The main issue with test-retest reliability is the carry-over effect, where participants remember the test content from the first session and use that knowledge in the second session, potentially inflating the reliability score.

How does parallel forms reliability address the issue of the carry-over effect?

-Parallel forms reliability addresses the carry-over effect by using two different versions of the same test. These versions are designed to be equivalent in difficulty, and the reliability is determined by correlating the scores from the two versions.

What is internal consistency reliability?

-Internal consistency reliability measures the consistency of results within a single test. It is often assessed by dividing the test into two parts and correlating the scores from each part. A commonly used method is Cronbach's alpha.

What factors can influence the reliability of a test?

-Several factors influence the reliability of a test, including the number of items, the homogeneity of the items, the number of subjects, the stability of the construct being measured, and whether the test is a speed test or a power test.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführen5.0 / 5 (0 votes)