Using Mistral Large 2 in IBM watsonx.ai flows engine

Summary

TLDRIn this video, the presenter explores using Watson's Exploratory (Mr. Large 2) engine with large language models. Mr. Large 2 excels in reasoning and code generation, supporting a multitude of languages, including character-based ones like Chinese and Japanese. The video demonstrates creating text completion and chat flows using Watson's X Flows engine, deploying them via CLI, and interacting with them through JavaScript SDK. It showcases Mr. Large's capabilities in multilingual translation and maintaining conversation context, highlighting the ease of building applications with Watson's tools.

Takeaways

- 🌐 The video explores integrating the Mistral Large 2 language model with the Watson Explorer engine for multilingual AI applications.

- 💬 Mistral Large 2 is designed to be multilingual, supporting a wide range of languages including character-based languages like Chinese, Japanese, and Hindi.

- 💡 The model excels in reasoning and code generation, making it a valuable tool for developers looking to build applications with diverse linguistic capabilities.

- 🔗 The Watson Explorer engine is introduced as a framework for building AI flows, which can be used for various AI tasks like text completion, summarization, classification, and more.

- 🛠️ The video demonstrates creating a text completion flow using the Watson Explorer engine, showcasing the ease of deploying AI flows to a live endpoint.

- 🔑 The Watson Explorer CLI is used to deploy the flow, and an SDK is available for JavaScript and Python to interact with the deployed flows.

- 🌐 The video provides an example of using Mistral Large 2 for text completion in multiple languages, showcasing its capabilities with character-based languages.

- 💬 A chat flow is also demonstrated, where the model maintains context across multiple user inputs, simulating a conversational interface.

- 🔧 The video suggests that for practical applications, maintaining state across interactions would be necessary, hinting at the need for client-side or server-side solutions.

- 🔄 The script mentions future content on tool calling with Mistral Large, indicating ongoing development and exploration of the model's capabilities.

Q & A

What is the main focus of the video series?

-The main focus of the video series is exploring how to use the Watson Explorer engine with different large language models, including IBM Granite and MeLLa 3.1, and in the final video, with Mistral Large 2.

What are the capabilities of Mistral Large 2 according to the video?

-Mistral Large 2 is capable of reasoning and code generation. It is multilingual by design, supporting not only languages like English, Spanish, and French but also character-based languages such as Chinese, Japanese, and Hindi. It also excels at reasoning involving larger pieces of text due to its large context window of almost 130,000 tokens.

What is Watson Explorer Flows engine?

-Watson Explorer Flows engine is a framework for building AI flows. It allows users to use a declarative flow language to build any AI flow ranging from text completion, summarization, classification, to retrieval augmentation, and generation.

How does the Watson Explorer Flows engine work with the CLI?

-The Watson Explorer Flows engine uses the CLI to deploy flows to a live endpoint. Users can deploy their flow configurations and environment variables by running the 'WX flow deploy' command.

What is the purpose of the JavaScript code example in the video?

-The JavaScript code example demonstrates how to interact with the deployed flows using the SDK. It shows how to set up the Watson Explorer endpoint and API key, and how to send requests to the flow, such as asking for translations in different languages.

What is the significance of the model variable in the text completion flow?

-The model variable in the text completion flow allows the user to change the language model being used without modifying the flow itself. Although the video only uses Mistral Large, this flexibility enables the use of different models in the future.

How does the video demonstrate the multilingual capability of Mistral Large 2?

-The video demonstrates the multilingual capability of Mistral Large 2 by asking it to translate the word 'computer' into five different languages, showcasing its ability to handle both alphabetic and character-based languages.

What is the purpose of the chat flow example in the video?

-The chat flow example in the video is to show how Mistral Large 2 can maintain context over multiple turns of a conversation. It uses a chat flow to answer questions and provide follow-up responses based on the chat history.

What is the role of the 's' and 'instruct' tags in the prompt template?

-The 's' and 'instruct' tags in the prompt template are used to help the language model parse the prompt more effectively. They provide instructions and context to the model, which aids in generating more accurate responses.

How can the state be maintained in a chat flow as demonstrated in the video?

-In the video, the state is maintained by sending the entire chat history with each request. However, for a more practical application, it suggests using client-side JavaScript or server-side routes to store and manage the state between requests.

What is tool calling and when can we expect to see a video on it?

-Tool calling is a feature that allows the language model to interact with external tools or services. The video mentions that a video demonstrating tool calling with Mistral Large will be released soon.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

Nursing Theorist Presentation: Jean Watson

How to Build Classification Models (Weka Tutorial #2)

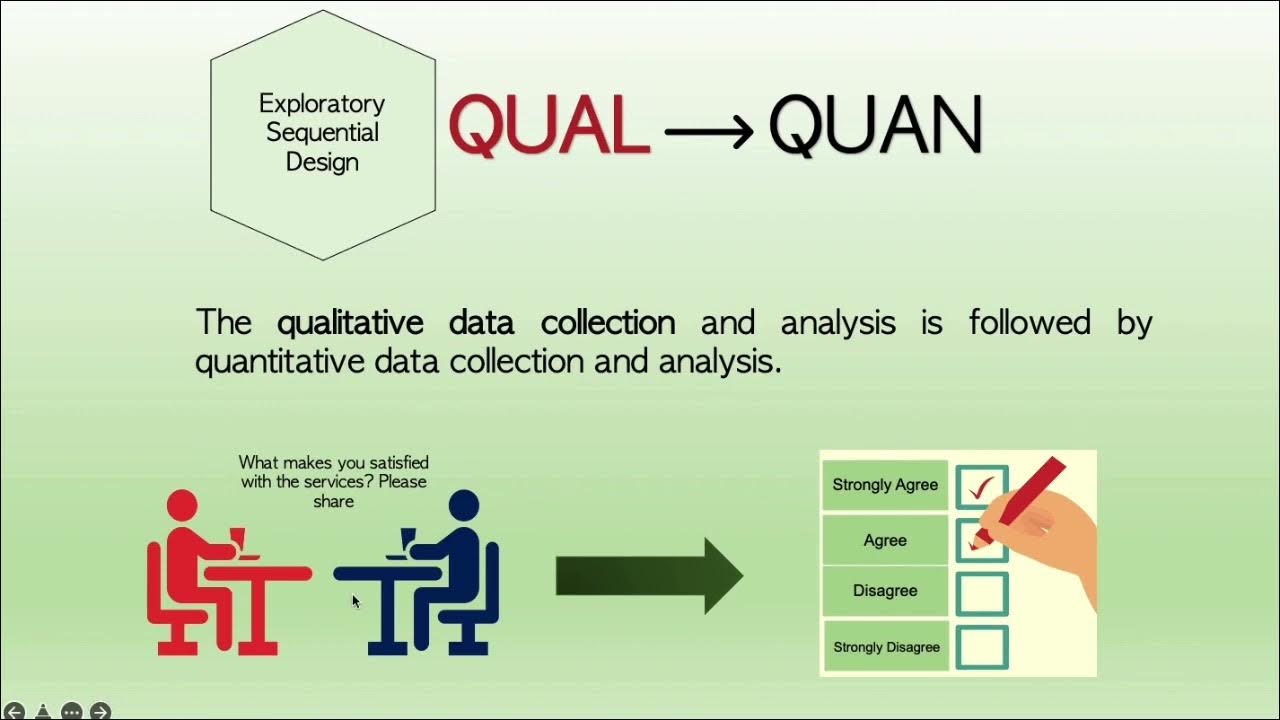

EXPLORATORY SEQUENTIAL MIXED METHOD RESEARCH DESIGN

Regresi Resisten (Garis Resisten)

How to Pass CISSP in 2024: Pass the Exam on Your First Try

Behaviorism: The Beginnings - Ch10 - History of Modern Psychology - Schultz & Schultz

5.0 / 5 (0 votes)