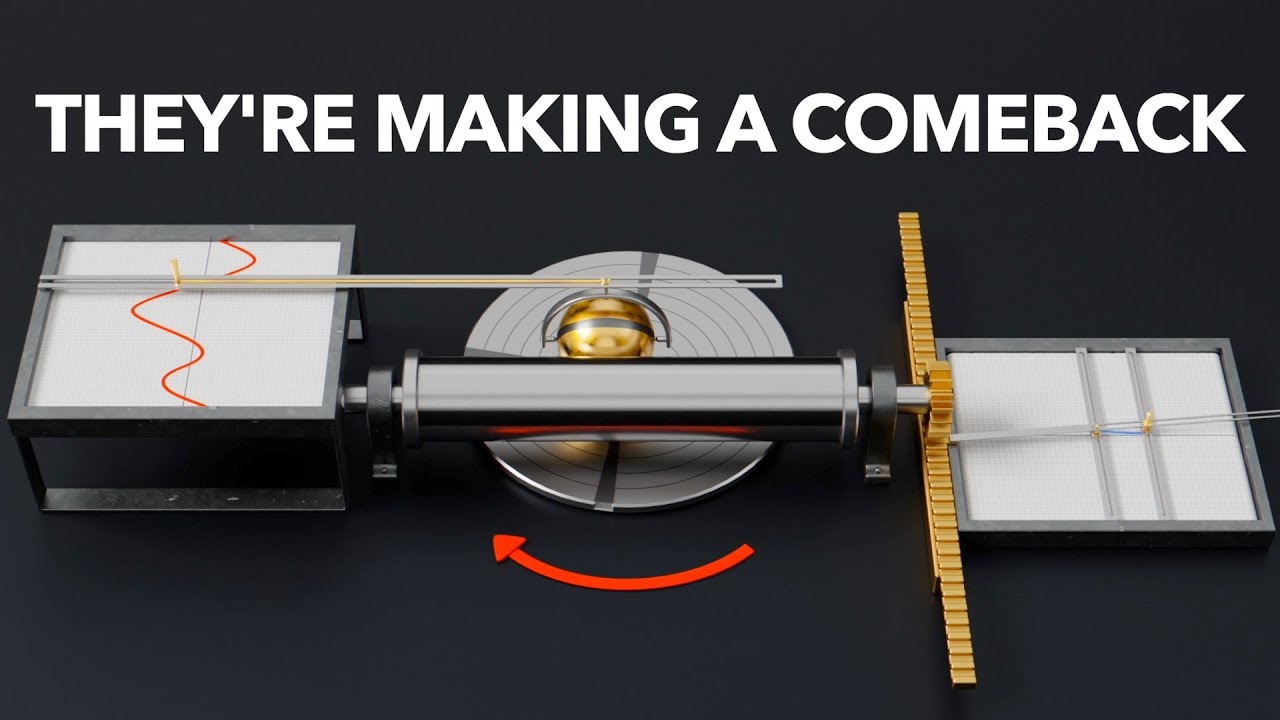

Future Computers Will Be Radically Different (Analog Computing)

Summary

TLDRThis script explores the resurgence of analog computing in the era of artificial intelligence. It explains how analog computers, once dominant for tasks like predicting eclipses and guiding anti-aircraft guns, fell out of favor with the advent of digital computers. However, factors like energy efficiency, speed, and the unique requirements of neural networks may signal a comeback for analog technology. The script delves into the history of AI, the limitations of perceptrons, and the breakthroughs that led to the current AI boom, highlighting the potential of analog computing to perform matrix multiplications essential for AI with less power and greater efficiency.

Takeaways

- 🕒 Analog computers were once the most powerful on Earth, used for predicting eclipses, tides, and guiding anti-aircraft guns.

- 🔄 The advent of solid-state transistors led to the rise of digital computers, which have become the standard for virtually all computing tasks today.

- 🔧 Analog computers can be reprogrammed by changing the connections of wires to solve a range of differential equations, such as simulating a damped mass oscillating on a spring.

- 🌐 Analog computers represent data as varying voltages rather than binary digits, which allows for certain computations to be performed more directly and with less power.

- ⚡ The efficiency of analog computers is highlighted by the fact that adding two currents can be done by simply connecting two wires, compared to the hundreds of transistors required in digital computers.

- 🚫 However, analog computers are not general-purpose devices and cannot perform tasks like running Microsoft Word.

- 🔄 They also suffer from non-repeatability and inexactness due to the continuous nature of their inputs and outputs and the variability in component manufacturing.

- 🌟 The potential resurgence of analog technology is linked to the rise of artificial intelligence and the specific computational needs of neural networks.

- 🤖 The perceptron, an early neural network model, demonstrated the potential of AI but was limited in its capabilities and faced criticism, leading to the first AI winter.

- 📈 The development of deeper neural networks, like AlexNet, and the use of large datasets like ImageNet have significantly improved AI performance, but also increased computational demands.

- 🔌 Modern challenges for AI, such as high energy consumption and the limitations of Moore's Law, suggest that analog computing could offer a more efficient solution for certain AI tasks.

- 🛠️ Companies like Mythic AI are developing analog chips to run neural networks, offering high computational power with lower energy consumption compared to digital counterparts.

Q & A

What were analog computers historically used for?

-Historically, analog computers were used for predicting eclipses, tides, and guiding anti-aircraft guns.

How did the advent of solid-state transistors impact the development of computers?

-The advent of solid-state transistors marked the rise of digital computers, which eventually became the dominant form of computing due to their versatility and efficiency.

What is the fundamental difference between analog and digital computers in terms of processing?

-Analog computers process information through continuous signals, like voltage oscillations, whereas digital computers process information in discrete binary values, zeros and ones.

How does the analog computer in the script simulate a physical system like a damped mass on a spring?

-The analog computer simulates a damped mass on a spring by using electrical circuitry that oscillates like the physical system, allowing the user to see the position of the mass over time on an oscilloscope.

What is the Lorenz system and why is it significant in the context of analog computers?

-The Lorenz system is a set of differential equations that model atmospheric convection and is known for being one of the first discovered examples of chaos. It is significant because analog computers can simulate such systems and visualize their behavior, like the Lorenz attractor.

What are some advantages of analog computers mentioned in the script?

-Advantages of analog computers include their ability to perform computations quickly, requiring less power, and having a simpler process for certain mathematical operations like addition and multiplication.

What are the main drawbacks of analog computers as discussed in the script?

-The main drawbacks of analog computers are their single-purpose nature, non-repeatability of exact results, and inexactness due to component variations, which can lead to errors.

Why might analog computers be making a comeback according to the script?

-Analog computers may be making a comeback due to the rise of artificial intelligence and the need for efficient processing of matrix multiplications, which are common in neural networks, and the limitations being reached by digital computers.

What is the perceptron and how was it initially perceived in the context of AI?

-The perceptron was an early neural network model designed to mimic how neurons fire in our brains. It was initially perceived as a breakthrough in AI, with claims that it could perform original thought and even distinguish between complex patterns like cats and dogs.

How did the development of self-driving cars contribute to the resurgence of AI and neural networks?

-The development of self-driving cars, such as the one created by researchers at Carnegie Mellon using an artificial neural network called ALVINN, demonstrated the potential of neural networks to process complex data like images for steering, contributing to the resurgence of AI.

What is the significance of ImageNet and how did it impact AI research?

-ImageNet, a database of 1.2 million human-labeled images created by Fei-Fei Li, was significant because it provided a large dataset for training neural networks. It led to the ImageNet Large Scale Visual Recognition Challenge, which spurred advancements in neural network performance and understanding.

What challenges are neural networks facing as they grow in size and complexity?

-Neural networks face challenges such as high energy consumption for training, the Von Neumann Bottleneck due to data fetching taking up most of the computation time and energy, and the limitations of Moore's Law as transistor sizes approach atomic levels.

How does Mythic AI's approach to using analog technology for neural networks address these challenges?

-Mythic AI uses analog chips that repurpose digital flash storage cells as variable resistors to perform matrix multiplications, which are common in neural networks. This approach can potentially offer high computational power with lower energy consumption and may bypass some of the limitations faced by digital computers.

What are some potential applications of Mythic AI's analog computing technology?

-Potential applications of Mythic AI's technology include security cameras, autonomous systems, manufacturing inspection equipment, and smart home speakers for wake word detection, offering efficient AI processing with lower power requirements.

How does the script suggest the future of information technology might involve a return to analog methods?

-The script suggests that the future of information technology might involve a return to analog methods due to their potential for efficient processing of tasks like matrix multiplications, which are central to AI and neural networks, and the limitations being reached by digital technologies.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

5.0 / 5 (0 votes)