Can We Evaluate Embedding Models for RAG Without Ground Truth?

Summary

TLDRThis video explores how to evaluate the retrieval accuracy of embedding models in a RAG system without access to ground truth data. The presenter demonstrates using multiple independent embedding models to simulate ground truth through majority voting on the most frequently retrieved contexts. By comparing retrieval results with this simulated ground truth, the performance of different models is evaluated. The approach enables accurate assessment of embedding models even in the absence of explicit context pairs, making it a practical solution when ground truth is unavailable.

Takeaways

- 😀 Evaluation of retrieval accuracy of an embedding model without ground truth is possible through a majority-voting method using multiple independent embedding models.

- 😀 In a typical scenario with ground truth, an embedding model retrieves the most relevant document for a given question, and the accuracy is calculated by checking if the right context was retrieved.

- 😀 The experiment in the video involves seven embedding models, each with varying parameter sizes and context lengths, which are evaluated based on their performance in a RAG system.

- 😀 In real-world scenarios where ground truth is not available, multiple embedding models can be used to estimate the correct context by voting on the most frequently retrieved response.

- 😀 When testing embedding models, it’s important to ensure that the models are independent of each other (i.e., not based on the same methodology or developed by the same team) to prevent correlated results.

- 😀 The embeddings are created by building a vector store, ingesting documents, and retrieving the most relevant context for each question, with performance being tracked based on processing time and retrieval accuracy.

- 😀 Context lengths for embedding models are typically much smaller than LLMs, with a typical embedding model having a context length of a few hundred to a couple thousand tokens.

- 😀 The seven embedding models tested in the video vary in size, with parameters ranging from 33 million to 560 million, which influences their performance in retrieving relevant contexts.

- 😀 The majority-voting method works by checking the most common retrieved context across all embedding models for each question, and if there’s a tie, the context is discarded.

- 😀 By using ensemble learning with multiple models, the evaluation process becomes more robust, and the model with the most frequent retrieved context is considered as the ground truth, even when no explicit ground truth is available.

Q & A

What is the main challenge in evaluating the retrieval accuracy of embedding models in a RAG system without ground truth?

-The main challenge is that without ground truth (correct question-context pairs), it's difficult to know whether the model retrieves the right context for a given question. Typically, ground truth provides the correct context to measure retrieval accuracy, but in real-world scenarios, such a ground truth is often unavailable.

How was retrieval accuracy evaluated when ground truth was available in the earlier video?

-When ground truth was available, the retrieval accuracy was evaluated by using known question-context pairs. For each question, the embedding model would retrieve the top document, and the retrieval accuracy was measured by checking how often the model retrieved the correct context.

What approach is used to evaluate retrieval accuracy when ground truth is not available?

-When ground truth is not available, the approach involves using multiple independent embedding models. The retrieved contexts from these models are compared, and the most frequent context retrieved across the models is assumed to be the 'ground truth' for evaluating retrieval accuracy.

Why is ensemble modeling important in this context of evaluating retrieval accuracy?

-Ensemble modeling is important because it allows the system to aggregate the outputs of multiple independent embedding models, ensuring that the most frequently retrieved context across models is likely the correct one. This method compensates for the lack of a direct ground truth by using the majority vote from several models.

What is the importance of using independent models in ensemble evaluation?

-Using independent models is crucial because models based on similar architectures or methodologies may retrieve the same context, leading to biased results. Independent models help ensure that the majority vote truly reflects the most relevant context, rather than reinforcing errors made by similar models.

How are the retrieved contexts from multiple models compared?

-The retrieved contexts from the multiple models are compared by counting how many times each context is retrieved for a particular question. The context with the highest retrieval count is considered the most likely correct answer. In case of a tie, those instances are excluded from the final evaluation.

What is the significance of using different embedding models with varying sizes?

-Using embedding models of different sizes helps test a range of model capabilities. Smaller models, like the minLM with 33 million parameters, are faster but may have lower accuracy compared to larger models, such as Snowflake with 560 million parameters. Comparing models of different sizes helps identify the most efficient and accurate model for the task.

What did the results indicate about the performance of smaller versus larger embedding models?

-The results indicated that, despite being 17 times smaller, the minLM model performed with about 87% accuracy, while larger models, like BGE, performed better, with accuracies over 90%. This suggests that even smaller models can still be effective, but larger models tend to provide better retrieval accuracy.

What role do the context length and embedding size play in model performance?

-Context length and embedding size are crucial factors affecting model performance. Models with shorter context lengths (e.g., 512 tokens) have less information to work with, which can limit their ability to retrieve the most relevant context. Additionally, embedding size (the number of parameters) impacts a model's capacity to represent and retrieve nuanced information, with larger models generally yielding better results.

Why can evaluating embedding models without ground truth be valuable in real-world applications?

-Evaluating embedding models without ground truth is valuable because in many real-world applications, ground truth may be expensive or impractical to create. By using the ensemble method to simulate ground truth, businesses and researchers can evaluate and choose the best models without needing manually annotated data.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Retrieval Augmented Generation - Neural NebulAI Episode 9

Build your own RAG (retrieval augmented generation) AI Chatbot using Python | Simple walkthrough

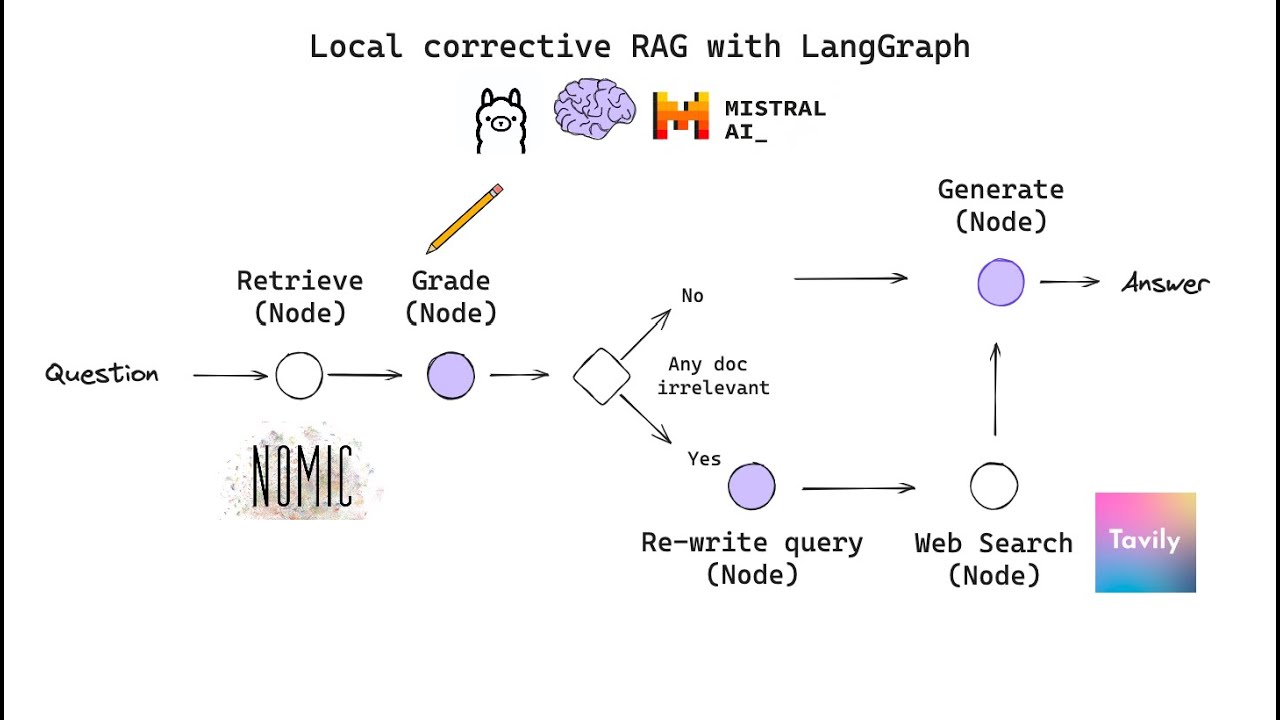

Building Corrective RAG from scratch with open-source, local LLMs

RAG From Scratch: Part 1 (Overview)

The Rise and Fall of the Vector DB category: Jo Kristian Bergum (ex-Chief Scientist, Vespa)

What is a Vector Database? Powering Semantic Search & AI Applications

5.0 / 5 (0 votes)