Detailed LLMOPs Project Lifecycle

Summary

TLDRIn this video, Prashna dives into the essential aspects of the LLMOps (Large Language Model Operations) project life cycle, explaining its significance in the world of generative AI applications. The video covers every step, from planning and data collection to model selection, fine-tuning, and deployment. Special attention is given to tools like MLflow, LangChain, and cloud platforms like AWS, Azure, and GCP for effective monitoring, debugging, and versioning. Prashna also touches on the importance of frameworks and strategies for handling AI agents, RAG applications, and chatbots, offering valuable insights for professionals looking to build and deploy successful LLM-based projects.

Takeaways

- 😀 LLM Ops is the lifecycle of projects involving Large Language Models (LLMs), focusing on their operation and deployment, similar to MLOps.

- 😀 Transitioning from MLOps to LLM Ops is easy for those already familiar with MLOps, as many tools and concepts overlap.

- 😀 The core of LLM Ops includes planning, data collection, model selection, fine-tuning, and deployment, tailored specifically for LLM-based projects.

- 😀 Key use cases for LLMs include generative AI applications (chatbots), authentic AI agents, and RAG (retrieval-augmented generation) applications using vector databases.

- 😀 Data collection and preparation are crucial for both fine-tuning LLMs and creating RAG applications, which involve storing data in vector databases.

- 😀 Model selection is vital, with options including OpenAI, Anthropic, and Google models, and requires evaluating external tools for integration.

- 😀 Fine-tuning adjusts the LLM model to better suit the specific needs of an application, while RAG focuses on transforming data into vectors for easy retrieval.

- 😀 Evaluation and testing involve assessing LLM applications through various metrics, using tools like MLflow and LangChain for monitoring and debugging.

- 😀 Prompt engineering is a continuous improvement process, refining prompts to ensure the desired output from the LLM.

- 😀 Deployment involves setting up infrastructure, scaling strategies, optimizing API call costs, and choosing between cloud platforms like AWS, Azure, and GCP.

- 😀 Ongoing monitoring and maintenance are essential, requiring tools for observability, version control, and cloud services to ensure the LLM app's efficiency and security.

Q & A

What is the focus of the video presented by Prashna?

-The video focuses on explaining the LLMOps project life cycle, covering the steps involved in building and deploying AI agents using large language models (LLMs).

What does LLMOps stand for, and how does it relate to MLOps?

-LLMOps stands for 'Large Language Model Operations.' It is similar to MLOps, which stands for 'Machine Learning Operations,' but LLMOps focuses specifically on handling operations involving LLM models, such as building and fine-tuning AI agents and chatbots.

Why is following industry trends important according to Prashna?

-Following industry trends is crucial because it helps individuals stay relevant in the field, making it easier to secure jobs and consultancy work by aligning with current demands and technologies.

What are some common use cases for LLM models discussed in the video?

-Common use cases for LLM models include generative AI applications like chatbots, AI agents, and RAG (Retrieval-Augmented Generation) applications that involve vector databases and document retrieval systems.

What is the importance of data collection in the LLMOps life cycle?

-Data collection is critical as it supports both fine-tuning LLM models and creating RAG applications. It involves gathering the necessary data for these applications, whether it's used for retraining models or stored in vector databases for document retrieval.

What tools are recommended for model selection in LLMOps?

-Prashna recommends using tools like Hugging Face, OpenAI models, Anthropic models, Google models, and other third-party frameworks for model selection. These tools help evaluate different LLMs for a given project.

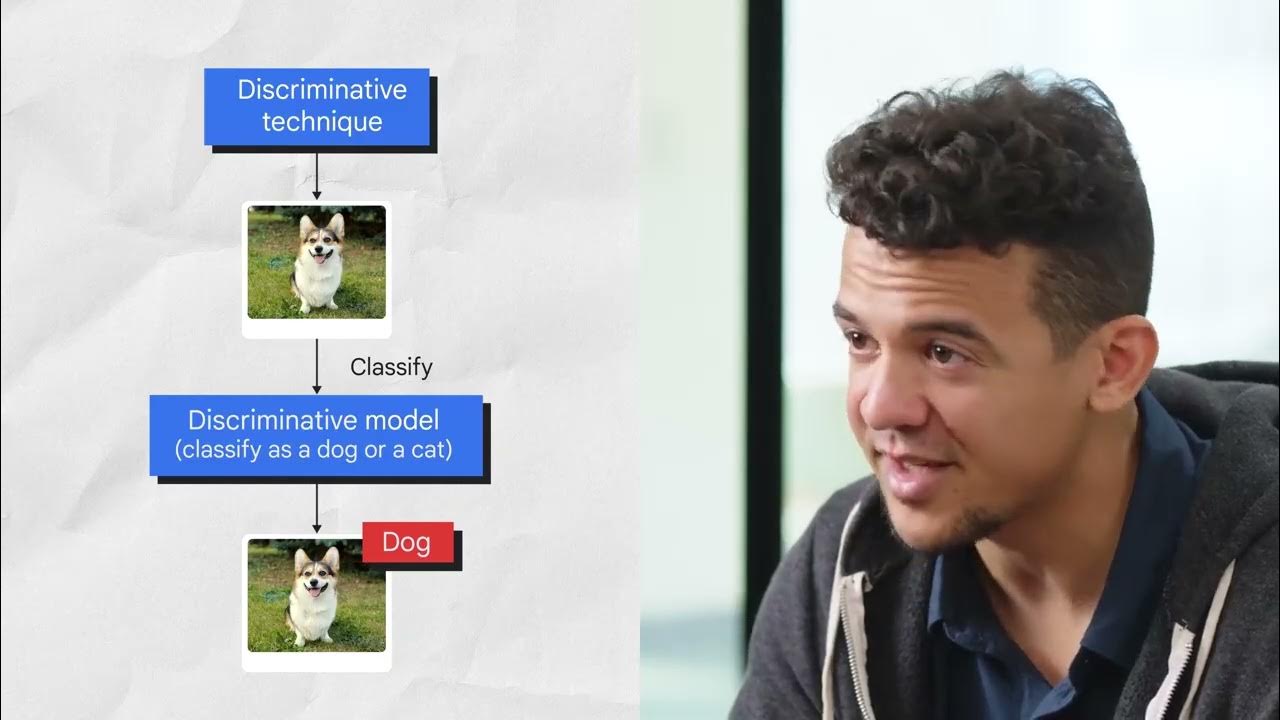

What is the difference between fine-tuning and RAG applications in the LLMOps life cycle?

-Fine-tuning involves retraining an LLM to improve its performance on a specific task, whereas RAG applications focus on using vector databases to store data and retrieve it efficiently, enhancing the model's ability to generate responses based on stored information.

What role does prompt engineering play in LLMOps?

-Prompt engineering involves crafting and refining prompts to ensure the LLM produces the desired output. This process is iterative, where different prompts are tested and adjusted until the optimal result is achieved.

What is the significance of evaluation and testing in the LLMOps project life cycle?

-Evaluation and testing are essential for assessing the performance of LLM applications. They involve using various metrics, such as AB testing, bias detection, and performance assessments, to ensure the application meets its objectives before deployment.

How does Prashna suggest deploying and maintaining LLM applications in production?

-Prashna suggests using cloud platforms like AWS, Azure, or GCP for deploying LLM applications. Deployment includes setting up infrastructure, creating API endpoints, scaling strategies, and optimizing API costs. Post-deployment, monitoring and maintenance are handled using tools like MLflow, LangChain, and other frameworks for observability and debugging.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

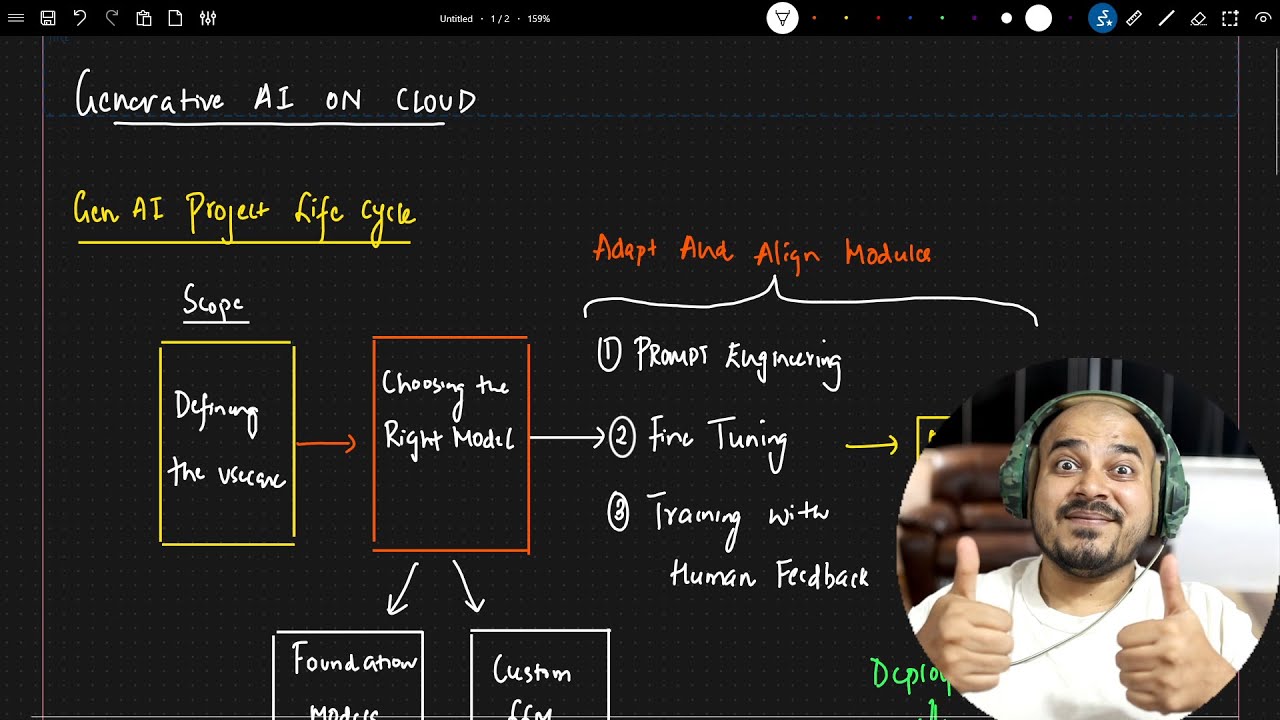

Generative AI Project Lifecycle-GENAI On Cloud

Intro to Generative AI for Busy People

Roadmap to Learn Generative AI(LLM's) In 2024-Krish Naik Hindi #generativeai

Simplifying Generative AI : Explaining Tokens, Parameters, Context Windows and more.

Introduction to Generative AI

Introduction to Generative AI

5.0 / 5 (0 votes)