Design an ML Recommendation Engine | System Design

Summary

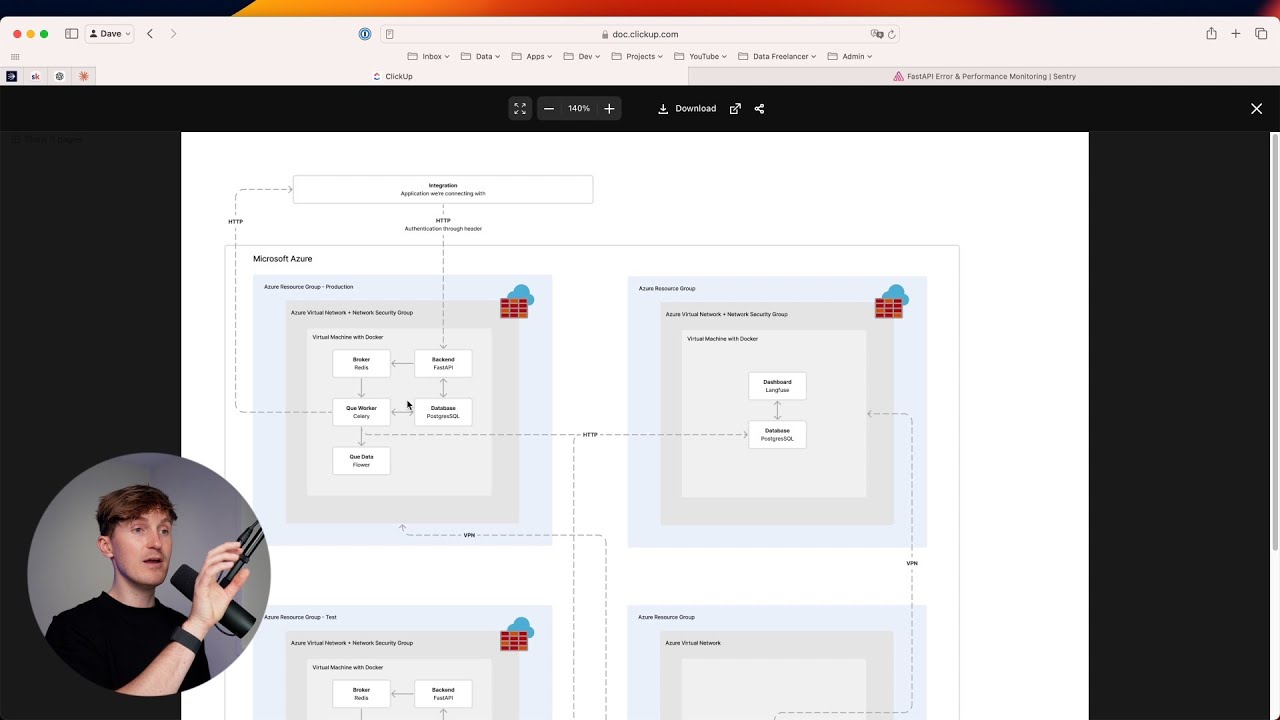

TLDRThis video outlines the design and deployment of an AI-based recommendation system for a large-scale social media platform. It covers the entire process, from training a machine learning model on user interactions (likes and dislikes) to providing real-time recommendations. The system uses a queue for efficient data handling, incremental model updates, and a caching layer for quick retrieval of recommendations. It also explores scalability, observability, and model management using tools like Airflow and MLflow. The goal is to continuously refine the recommendation engine to offer personalized content while managing data flow and system performance effectively.

Takeaways

- 😀 The goal of a recommendation system is to predict what content a user will like based on their past actions, and continually improve those recommendations over time.

- 😀 User actions such as likes and dislikes are used as training data for the machine learning model, which outputs the probability that a user will like specific content.

- 😀 The machine learning model is trained on historical data (likes/dislikes) and generates recommendations by evaluating recent posts.

- 😀 Data storage and processing are critical—ML models need to pull data from databases, and updates to the model should be done regularly to keep recommendations relevant.

- 😀 Periodic retraining of the model is essential as user data changes, and this can be done using a Cron job or automated job scheduler.

- 😀 Tools like MLflow help track and manage machine learning models, storing model versions, metadata, and experiment results for better model performance tracking.

- 😀 To scale a recommendation system, incremental training is useful—only new data needs to be added, instead of retraining the entire model each time.

- 😀 A queue can be introduced to manage new user actions and simplify the training process by batching and incrementally updating the model.

- 😀 Using a workflow orchestration tool like Airflow improves observability and scalability of the system, monitoring when jobs succeed, fail, and their durations.

- 😀 An inference API can serve real-time recommendations by loading the trained model and generating recommendations based on current data, with potential optimizations such as caching to reduce latency.

- 😀 Load balancing and distributed systems are essential for large-scale systems, with training, inference, and APIs able to scale horizontally across multiple machines to meet growing demands.

Q & A

What is the main purpose of a recommendation engine in a social media platform?

-A recommendation engine is designed to suggest content to users based on their past interactions (likes, dislikes). It learns from these actions to continuously improve the suggestions it provides, creating a feedback loop that helps make better recommendations over time.

What data is typically used to train a machine learning model for a recommendation system on a social media platform?

-The primary data used includes user actions such as likes and dislikes, along with metadata about recent posts. This historical data is used to train the machine learning model to predict which posts a user is most likely to engage with.

How does the recommendation engine generate recommendations for users?

-The engine processes recent posts and applies a trained model to predict the likelihood that each user will like a given post. These predictions are represented as probabilities between 0 and 1, and posts are sorted to generate the top recommendations.

What are the key components involved in training a machine learning model for a recommendation system?

-The key components include a training server that fetches data (likes and dislikes) from a database, a machine learning algorithm that trains the model, and an ML tracking tool like MLflow to store and monitor the trained model. Periodic retraining is necessary to keep the model up-to-date with new data.

What challenges are associated with retraining machine learning models for recommendation systems in large-scale systems?

-In large-scale systems, retraining can be computationally expensive due to the need to process vast amounts of data. Downloading all historical data for each retraining cycle can create significant overhead. Incremental training, where the model is updated with only new data, helps mitigate this issue.

What role does a queue play in the recommendation system’s training process?

-A queue helps decouple the recommendation engine from the database by holding new data (likes and dislikes) that needs to be processed. This allows the training server to pull batches of data from the queue, ensuring efficient handling of new information without overloading the system.

How does using an orchestration platform like Airflow improve the recommendation system’s training process?

-Airflow helps schedule and monitor training jobs, providing visibility into when processes run, what failed, and how long tasks take. It allows for better scalability and error handling compared to simple Cron jobs, and can facilitate distributed training across multiple machines.

What is the difference between inference and training in a recommendation system?

-Training involves using historical data to create and update a machine learning model, while inference is the process of using that trained model to generate recommendations in real time based on new data (such as recent posts) that users interact with.

Why might a recommendation system use an inference server and a cache for delivering recommendations?

-An inference server allows recommendations to be precomputed in batches, reducing latency when serving recommendations to users. The cache stores these precomputed recommendations, ensuring fast delivery without having to run the model each time, and removing outdated recommendations automatically.

What are some scalability concerns and potential optimizations for a large-scale recommendation system?

-Scalability concerns include handling large volumes of data, ensuring low latency for real-time recommendations, and managing computational resources. Optimizations include distributing the training job across multiple machines, using load balancing for inference, and preprocessing data with distributed analytics frameworks.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

I ACED my Technical Interviews knowing these System Design Basics

2: Instagram + Twitter + Facebook + Reddit | Systems Design Interview Questions With Ex-Google SWE

TikTok Akan Mendisrupsi Semua Industri. Begini Strateginya.

How to Find, Build, and Deliver GenAI Projects

How to grow on social media as an artist

TOP 7 New AI Business Ideas for Beginners in 2024 (No money)

5.0 / 5 (0 votes)