Comment j'ai recodé Google en 7 jours.

Summary

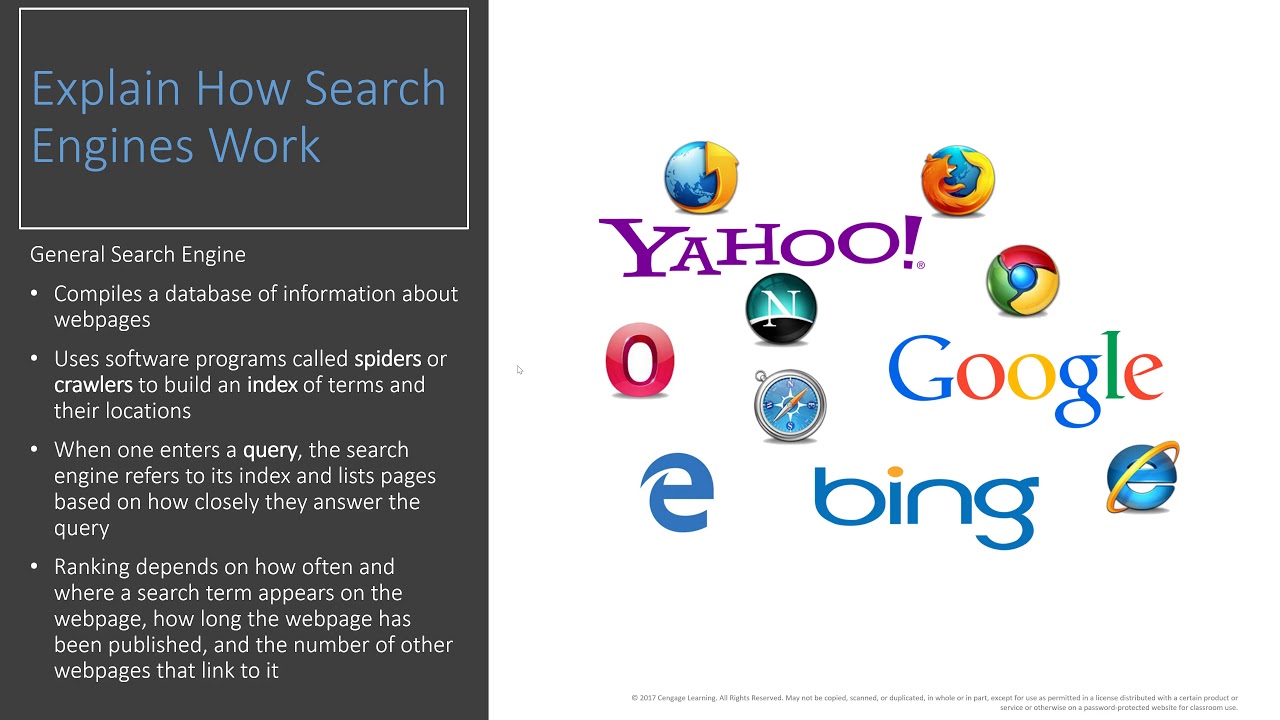

TLDRThe video explores how to build a web search engine from scratch. It starts by explaining web crawling using multiple crawlers in parallel to find and index web pages. An indexing system is then created to enable fast searching, using techniques like TF-IDF scoring and PageRank algorithm. The system is hosted on a VPS server with FastAPI. A website is made with WordPress to showcase the search engine. Overall, the project demonstrates core concepts like crawling, indexing, ranking web pages that power search engines like Google.

Takeaways

- 😀 Takeaway 1

- 😀 Takeaway 2

- 😀 Takeaway 3

- 😀 Takeaway 4

- 😀 Takeaway 5

- 😀 Takeaway 6

- 😀 Takeaway 7

- 😀 Takeaway 8

- 😀 Takeaway 9

- 😀 Takeaway 10

Q & A

Who is considered to have created the very first search engine, and what was its name?

-The first search engine is often considered to be Aliweb.

What year did Tim Berners-Lee invent the World Wide Web?

-Tim Berners-Lee invented the World Wide Web in 1989.

What innovative method did the script discuss for indexing the web?

-The script discussed the use of a web crawler, a program that visits web pages to gather information and links to index the web.

Who was Matthew Grey, and what was his contribution to the early web?

-Matthew Grey was an MIT student who created the Wanderer in 1993, aiming to estimate the number of websites that existed, introducing an early concept of web crawling.

What is the significance of the 'robots.txt' file according to the script?

-The 'robots.txt' file is used by websites to indicate to search engines which pages should not be indexed, part of respecting web standards and privacy.

What does the script describe as a potential legal issue with web crawlers?

-The script mentions that if multiple crawlers download pages from the same site simultaneously, it could overwhelm the server, potentially leading to a denial-of-service (DoS) attack, which is illegal.

What is the 'Deep Web' as described in the script?

-The Deep Web refers to parts of the internet not indexed by search engines, often because site owners have indicated a preference not to be included in search engine databases.

What solution does the script propose for managing the queue of sites to be crawled, considering the memory constraints?

-The script suggests creating a separate database table for the queue to efficiently manage memory usage, despite potential slowdowns due to database searches.

What is the primary bottleneck for the speed of web crawlers as mentioned in the script?

-The primary bottleneck is the speed at which requests are made and processed, along with the number of crawlers running in parallel.

What indexing method is described to improve search result relevance and how does it work?

-The TF-IDF (Term Frequency-Inverse Document Frequency) method is described, which improves relevance by considering both the frequency of words in documents and their commonness across all documents.

How does the script suggest making a web crawler more efficient and avoid legal issues?

-By using a dictionary to track domains that have been crawled and ensuring a respectful delay before making new requests to the same domain, thus avoiding overloading servers.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Is Redis the ONLY database you need? // Fullstack app from scratch with Next.js & Redis

Code Anything with Perplexity, Here's How

Upgrade Your AI Using Web Search - The Ollama Course

Computer Concepts - Module 2 The Web Part 4

Starting a Web Design Business in 2024: My Step-by-Step Guide

I Made A Personal Search Engine with OpenAI and Pinecone

5.0 / 5 (0 votes)