OpenAI-o1 x Cursor | Use Cases - XML Prompting - AI Coding ++

Summary

TLDRIn this video, the creator explores the use of OpenAI's GPT-3.5 and GPT-4 models on the coding platform, Cursor. They test different Cursor rules to enhance productivity and experiment with structured prompts using XML tags for clearer instructions. The video compares the efficiency of GPT-3.5 and GPT-4 for coding tasks, highlighting GPT-3.5's speed and reliability. The creator also discusses the benefits of GPT-4's larger output limit for complex refactoring jobs. They demonstrate using these models to create folder structures and automate website updates, showcasing practical applications and workflow improvements.

Takeaways

- 😀 The video explores the use of OpenAI's GPT-3.5 and GPT-4 models, focusing on the 'mini' version for coding within the Cursor platform.

- 🔧 The creator has been testing Cursor's AI rules to enhance productivity and is experimenting with XML tags to structure prompts more effectively.

- 📊 A Reddit post is discussed, comparing GPT-3.5 to GPT-4, with the conclusion that GPT-3.5 is currently superior for daily tasks due to its speed and reliability.

- 📝 The video highlights the 64k output token limit of GPT-4 as a significant advantage for large refactoring jobs, allowing more extensive code generation in fewer prompts.

- 💬 The necessity for precise prompts with GPT-4 is emphasized due to its longer 'thinking time', suggesting that getting the prompt right the first time is crucial.

- 🛠️ Cursor's implementation of GPT-4 is noted to have some bugs, such as occasional failures to output text along with code.

- 📁 The video demonstrates using structured prompts with XML tags to generate complex folder structures and code, leveraging GPT-4's capabilities.

- 🔄 The creator shares a workflow of switching between GPT-4 for initial setup and GPT-3.5 for debugging and fine-tuning.

- 🎥 A practical example is given where GPT-4 is used to automate the process of updating video content on the creator's website, showcasing a real-world application.

- 🔗 The video concludes with a call to action for viewers to experiment with the models, share their findings, and consider integrating these techniques into their own workflows.

Q & A

What is the main focus of the video?

-The main focus of the video is to explore the use of OpenAI's GPT-3.5 and GPT-4 models, particularly the 'mini' version, for coding tasks using the Cursor platform. The video also discusses the effectiveness of different models for productivity and coding efficiency.

What is Cursor and how does it relate to the video's content?

-Cursor is a code editor that the video uses to test different coding rules and to integrate with OpenAI models for coding assistance. The video explores using Cursor to rewrite prompts with XML tags for more precise instructions when using AI models.

Why does the video mention XML tags in the context of coding?

-The video mentions XML tags as a method to structure and clarify prompts for AI models. By using XML tags, the video aims to provide more precise instructions to the AI, which can lead to better and more efficient code generation.

What are the advantages of using GPT-4 'mini' mentioned in the video?

-The video highlights the 64k output tokens of GPT-4 'mini' as an advantage, allowing for larger refactoring jobs to be done in fewer prompts due to the increased output limit compared to GPT-3.5.

What is the 'Chain of Thought' mentioned in the video in relation to AI models?

-The 'Chain of Thought' refers to the process where AI models think through steps to solve a problem, which can be time-consuming. The video suggests that with GPT-4 'mini', users need to provide more specific prompts to minimize waiting time for the AI's thought process.

What is the verdict on GPT-3.5 vs. GPT-4 'mini' in the video?

-The video concludes that for day-to-day coding tasks, GPT-3.5 is still considered better due to its speed and reliability, while GPT-4 'mini' is more suitable for large refactoring jobs that can benefit from its 64k output token limit.

How does the video suggest improving prompts for AI models?

-The video suggests improving prompts by using more structured language with XML tags to provide clear and detailed instructions to the AI, which can help in getting more accurate and efficient code generation.

What is the significance of the 64k output tokens in GPT-4 'mini' as discussed in the video?

-The 64k output tokens in GPT-4 'mini' allow for the generation of more extensive code in a single prompt, which is beneficial for creating large-scale project structures or refactoring without needing to split the task into multiple prompts.

How does the video demonstrate the use of GPT-4 'mini' for creating folder structures?

-The video demonstrates using GPT-4 'mini' to create complex folder structures by providing a detailed prompt wrapped in XML tags. It then uses the large output token capacity to generate all the necessary files and folders in one go.

What is the practical application shown in the video for automating website updates?

-The video shows a practical application where GPT-4 'mini' is used to create a script that can update a website's video section by generating a description based on a video title and URL, streamlining the process of adding new content to the site.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

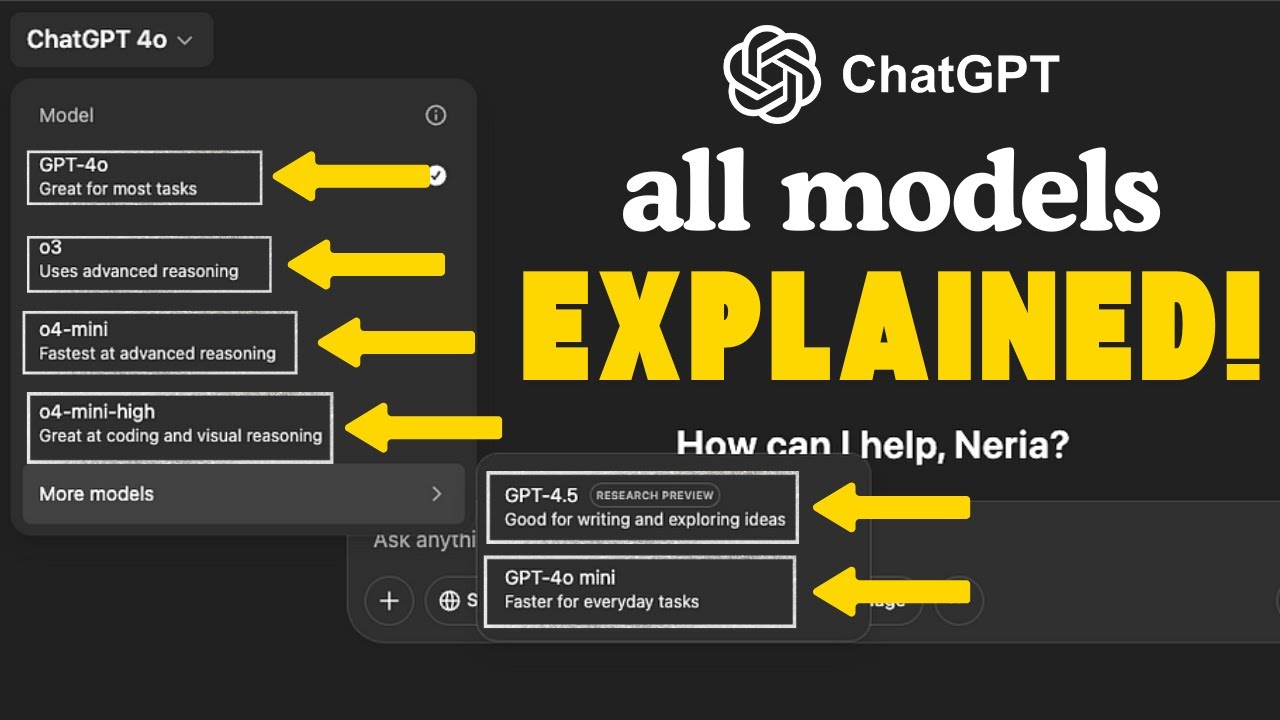

How to Use Different ChatGPT Models (Full Beginner's Guide)

Have I Finally Found A Free Alternative To Elevenlabs?

🛑 Stop Making WordPress Affiliate Websites (DO THIS INSTEAD)

ChatGPT Operator is expensive....use this instead (FREE + Open Source)

OpenAI o1 | GPT-5 | Finalmente 🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓🍓

ChatGPT o1-preview model python strategy made 432% profit (FULL tutorial)

5.0 / 5 (0 votes)