Algorithms Lesson 6: Big O, Big Omega, and Big Theta Notation

Summary

TLDRThis lesson delves into algorithm efficiency through Big O, Big Omega, and Big Theta notations, focusing on scalability and speed relative to input size. It explains these mathematical bounds using real functions and their graphical representations, illustrating how they can limit the growth of more complex functions. The discussion transitions to their application in algorithms, particularly with integer inputs like array sizes in sorting. The lesson aims to provide a visual understanding of these bounds, with practical algorithmic examples to follow.

Takeaways

- 🔍 The lesson focuses on analyzing the efficiency of algorithms, specifically their scalability and speed, in relation to input size.

- 📏 It introduces the use of the letter 'N' to represent an unknown input size, which is a common practice in algorithm analysis.

- 📊 Three types of bounds are discussed to formalize the growth of an algorithm's operations relative to input size: Big O, Big Omega, and Big Theta.

- 📈 Big O notation is used to describe an upper bound on the growth of a function, indicating that f(x) is less than or equal to C * g(x) for some constant C and for all x greater than x0.

- 📉 Big Omega notation is used for a lower bound, where f(x) is greater than or equal to C * g(x) for some constant C and for all x greater than or equal to x0.

- 🔗 Big Theta notation is used when a function is bounded both from above and below by the same function g(x), suggesting that f(x) grows at the same rate as g(x).

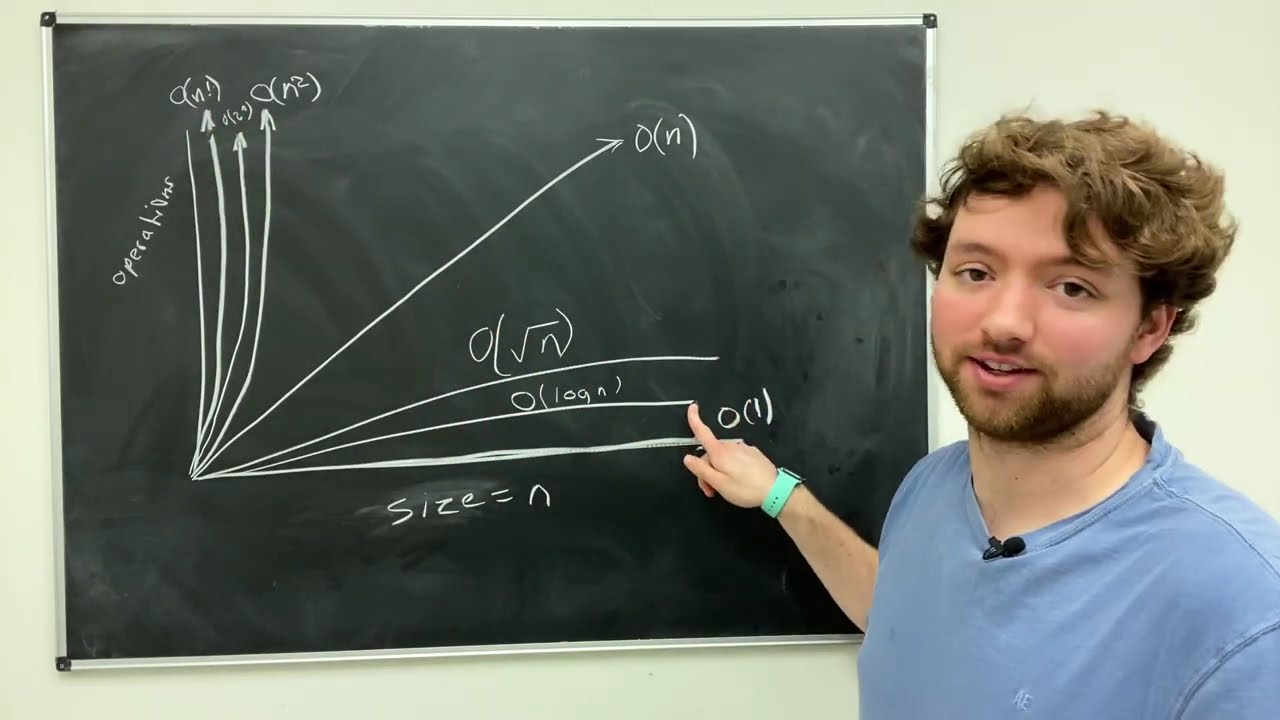

- 📋 The lesson provides a visual representation of these bounds using graphs to help understand how they apply to real functions and algorithms.

- 💡 The concept of bounding is crucial for understanding the scalability of algorithms when applied to large datasets.

- 🔢 The script emphasizes that these notations are used for functions defined on positive integers, which is typical for algorithm analysis where the input size is a positive integer like the size of an array.

- 📚 The lesson concludes with a promise of upcoming lessons that will provide concrete examples of these notations applied to specific algorithms.

Q & A

What is the main focus of the lesson six on algorithm efficiency?

-The main focus of lesson six is to understand an algorithm's scalability, particularly its speed, in terms of the growth in the number of operations relative to the input size.

What is the input size typically referred to in the context of algorithm analysis?

-In the context of algorithm analysis, the input size typically refers to the number of elements that an algorithm is processing, such as the number of elements in an array for a sorting algorithm.

What do the letters N and n commonly represent in algorithm analysis?

-In algorithm analysis, the letter N is used to represent an unknown input size, while n is used to signify an integer variable, often representing the size of an array or a similar discrete structure.

What are the three types of bounds used to formalize the growth of functions in algorithm analysis?

-The three types of bounds used to formalize the growth of functions in algorithm analysis are Big O (O), Big Omega (Ω), and Big Theta (Θ).

What does it mean for a function f(x) to be in Big O of G(x)?

-A function f(x) is said to be in Big O of G(x) if there exist positive constants C and x0 such that f(x) is less than or equal to C * G(x) for all x greater than x0.

How is Big Omega notation used to describe the growth of a function relative to another?

-Big Omega notation is used to describe the growth of a function f(x) from below relative to another function G(x), where there exist positive constants C and x0 such that f(x) is greater than or equal to C * G(x) for all x greater than or equal to x0.

What is the condition for a function f(x) to be in Big Theta of G(x)?

-A function f(x) is in Big Theta of G(x) if there exist positive constants C1, C2, and x0 such that C1 * G(x) is greater than or equal to f(x) which is greater than or equal to C2 * G(x) for all x greater than or equal to x0.

Why are constants C1 and C2 different in the definition of Big Theta?

-Constants C1 and C2 are different in the definition of Big Theta to indicate that the function f(x) can be bounded by G(x) from both above and below by different multiples, ensuring that f(x) grows at a rate that is asymptotically equal to G(x).

How does the concept of Big O notation help in understanding algorithm efficiency?

-Big O notation helps in understanding algorithm efficiency by providing an upper bound on the growth rate of an algorithm's running time or space requirements, allowing us to classify and compare the scalability of different algorithms.

What is the significance of the white region in the graphs shown during the lesson?

-The white region in the graphs represents the area where the function f(x) is bounded by the function G(x) multiplied by a constant, indicating the growth rate of f(x) relative to G(x) from a certain point onward.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Asymptotic Notations in Tamil | Data Structures and algorithm in Tamil | Unit 1 Abstract Data Types

L-1.3: Asymptotic Notations | Big O | Big Omega | Theta Notations | Most Imp Topic Of Algorithm

Time Complexity and Big O Notation - Data Structures and Algorithms

Asymptotic Notation | Big O Notation | Omega Notation | Big Theta Notation | Most Imp. in Algorithm

1.8.2 Asymptotic Notations - Big Oh - Omega - Theta #2

AQA A’Level Algorithmic complexity, efficiency & permutation

5.0 / 5 (0 votes)