Training a model to recognize sentiment in text (NLP Zero to Hero - Part 3)

Summary

TLDRIn this TensorFlow tutorial, Laurence Moroney guides viewers through building a sentiment classifier for text using natural language processing. Starting with text tokenization and preprocessing, the video progresses to training a model on a dataset of sarcastic headlines. It explains the importance of embeddings for understanding context and sentiment, and demonstrates how to implement and evaluate a neural network model for sentiment analysis, achieving promising accuracy on unseen test data.

Takeaways

- 📈 The video series is about building a sentiment classifier using TensorFlow for Natural Language Processing (NLP).

- 🗣️ The series focuses on how to tokenize text into numeric values and preprocess text data for NLP tasks.

- 📚 It uses Rishabh Misra's dataset from Kaggle, which categorizes headlines as sarcastic or not.

- 🔢 The dataset includes fields for sarcasm labels (1 for sarcastic, 0 for not), headlines, and URLs to the articles.

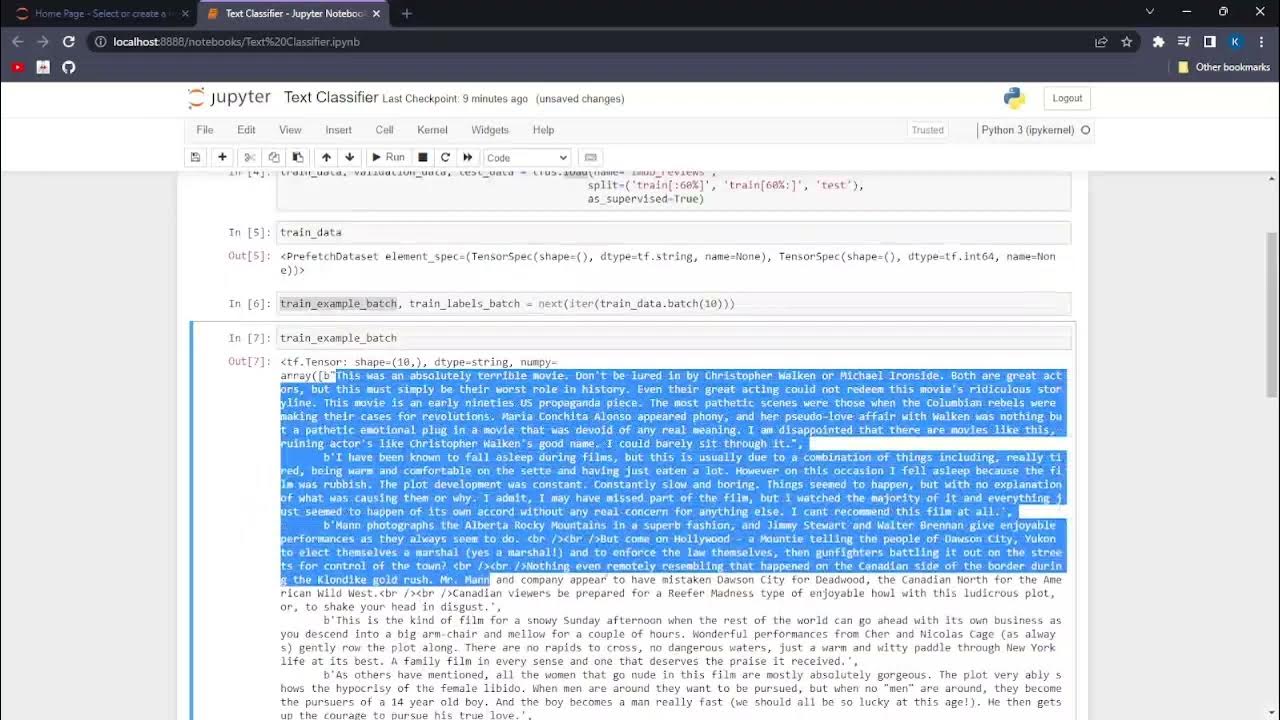

- 🔄 The data is in JSON format and needs to be converted to Python format for training purposes.

- 📝 Preprocessing steps involve tokenizing the text, creating a word index, and padding sequences to the same length.

- ✂️ The dataset is split into training and test sets to evaluate the model's performance on unseen data.

- 🔑 The tokenizer is fit only on the training data to prevent information leakage from the test set.

- 📊 The concept of embeddings is introduced to understand the context and meaning of words in a multi-dimensional space.

- 🤖 A neural network model is built with an embedding layer, global average pooling, and a deep learning layer for classification.

- 📊 The model achieves high accuracy on both training and test data, indicating its effectiveness in recognizing sarcasm in text.

- 🔧 The video provides code examples and encourages viewers to try the process themselves using a provided Colab link.

Q & A

What is the main topic of the video series 'Zero to Hero with TensorFlow'?

-The main topic of the video series is Natural Language Processing (NLP), specifically focusing on building a classifier to recognize sentiment in text using TensorFlow.

What is the purpose of tokenizing text into numeric values in NLP?

-Tokenizing text into numeric values is a preprocessing step that allows the text data to be used in machine learning models, as these models require numerical input.

Which dataset is used in the video to train the sentiment classifier?

-The dataset used is Rishabh Misra's dataset from Kaggle, which contains headlines categorized as sarcastic or not sarcastic.

What does the 'is_sarcastic' field represent in the dataset?

-The 'is_sarcastic' field is a binary indicator where 1 represents a sarcastic headline and 0 represents a non-sarcastic one.

How is the data stored in the provided dataset?

-The data is stored in JSON format, which is then converted to Python format for training purposes.

What is the role of the tokenizer in the preprocessing of text data?

-The tokenizer is used to convert words into tokens, which are then used to create sequences of tokens for each sentence, and these sequences are padded to the same length.

Why is it important to split the data into training and testing sets?

-Splitting the data into training and testing sets is crucial for evaluating the model's performance on unseen data, ensuring that it can generalize well.

How does the video script address the issue of the tokenizer seeing the test data?

-The script suggests rewriting the code to fit the tokenizer only on the training data, ensuring that the neural network does not see the test data during training.

What is the concept of embeddings in the context of NLP and sentiment analysis?

-Embeddings are a representation of words in a multi-dimensional space where words with similar meanings are closer to each other, allowing the model to understand the context and sentiment of words.

What is the structure of the neural network used in the video for sentiment classification?

-The neural network consists of an embedding layer to learn word representations, a global average pooling layer to sum up the word vectors, and a deep neural network layer for classification.

How can the trained model be used to predict sentiment for new sentences?

-The model uses the trained tokenizer to convert new sentences into sequences, pads them to match the training set dimensions, and then makes predictions based on the learned embeddings.

What was the accuracy of the model on the test data as mentioned in the video?

-The model achieved an accuracy of 81% to 82% on the test data, which consists of words the network has never seen during training.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Sequencing - Turning sentences into data (NLP Zero to Hero - Part 2)

ML with Recurrent Neural Networks (NLP Zero to Hero - Part 4)

Long Short-Term Memory for NLP (NLP Zero to Hero - Part 5)

Training an AI to create poetry (NLP Zero to Hero - Part 6)

AIP.NP1.Text Classification with TensorFlow

Natural Language Processing - Tokenization (NLP Zero to Hero - Part 1)

5.0 / 5 (0 votes)