OpenAI shocks the world yet again… Sora first look

Summary

TLDR近日,OpenAI发布了名为Sora的先进AI视频模型,引发了广泛的关注和讨论。Sora能够基于文本提示生成长达一分钟的逼真视频,其画面质量和帧间连贯性大大超出了之前的模型。此外,Sora还能处理不同的宽高比,提供更为灵活的创作可能。虽然Sora的技术细节仍有待揭晓,但其强大的性能已经令人瞩目,同时也引发了关于AI发展对人类工作和创作的影响的深刻思考。本视频深入探讨了Sora的工作原理、潜在应用及其对未来的意义。

Takeaways

- 🤖 Open AI发布了一种创新的视频生成模型Sora,它能够根据文本提示制作长达一分钟的逼真视频。

- 🚀 Sora模型标志着人工智能领域的一次巨大飞跃,能够生成连贯的视频帧,超越了之前的任何AI视频模型。

- 🌐 与同日发布的Google Gemini 1.5相比,Sora由于其视频生成能力而迅速成为焦点,后者是一个具有高达1000万token上下文窗口的语言模型。

- 📱 Sora的视频可以根据文本提示或从一张起始图片生成,展示了高度的逼真度和多样的宽高比渲染能力。

- 🔍 尽管初步担心Open AI可能挑选了示例,但Sam Altman通过Twitter实时响应请求,证明了Sora的广泛应用能力。

- 🛡️ 由于潜在的滥用风险,Sora模型不太可能向公众开放,其生成的视频将包含C2P元数据以跟踪内容来源和修改历史。

- 💡 Sora利用了大规模的计算能力和一个类似于大型语言模型的处理方法,通过对视觉块进行编码来理解和生成视频内容。

- 🎞️ 视频生成面临的挑战包括庞大的数据点处理需求和时间维度的复杂性,Sora通过创新的技术克服了这些障碍。

- 🌍 Sora的技术可能会彻底改变视频编辑和内容创建领域,使得复杂的视频制作变得更加简单和快捷。

- 🎨 尽管Sora生成的视频在细节上可能仍存在缺陷,但它预示着AI在模拟物理和人类互动方面未来可能的进步。

Q & A

OpenAI最近发布了什么样的AI新技术?

-OpenAI最近发布了一种名为Sora的文本到视频模型,这是一种能够生成长达一分钟的、现实感极强的视频的人工智能技术。

Sora的名字来源是什么?

-Sora这个名字来源于日语中的“空”,意味着天空。

Sora与之前的AI视频模型有什么不同?

-Sora在视频的真实感、时长(可达一分钟)、帧间的连贯性以及不同宽高比的视频渲染方面,超越了之前的模型,如稳定视频扩散(Stable Video Diffusion)和私有产品Pika。

Sora如何生成视频?

-Sora可以通过文本提示来创建视频,描述你想看到的场景,或者从一个起始图像出发,将其转化为生动的视频。

为什么说Sora模型可能不会向公众开源?

-鉴于Sora模型的强大能力,若落入不当之手,可能会被用于不良用途。因此,虽然其视频会包含C2P元数据以追踪内容来源和修改方式,但它很可能不会被公开源代码。

Sora是基于什么样的技术工作的?

-Sora是一个扩散模型,类似于DALL·E和稳定扩散(Stable Diffusion),它从随机噪声开始,逐步更新这些噪声以形成连贯的图像。

Sora如何处理视频数据?

-Sora采用了类似于大型语言模型处理文本的方法,通过对视频中的视觉块进行编码,这些视觉块既捕获了它们的视觉信息,也捕获了它们随时间或帧变化的方式。

Sora的训练数据和输出有什么特点?

-与典型的视频模型不同,Sora能够在其原生分辨率上训练数据,并输出可变的分辨率。

Sora技术将如何改变视频编辑和制作领域?

-Sora技术将使得视频编辑变得更加简单和快捷,例如,改变一辆行驶中的车辆的背景,或者在数秒内创建一个全新的Minecraft世界,从而对视频制作和Minecraft流媒体等行业产生重大影响。

Sora的视频生成存在哪些局限性?

-尽管Sora生成的视频印象深刻,但如果仔细观察,可以发现一些缺陷,如不完美的物理模型或人类互动模拟,这些都带有AI生成内容的独特外观。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

You Won't Believe OpenAI JUST Said About GPT-5! Microsoft Secret AI, Hallucination Solved, GPT2

What's New in ChatGPT-4o ?

Sora AI出场即巅峰,ChatGPT实现全面统治 | Sora视频生成模型能力详解

中共为什么取消总理记者会?|两会|总理记者会|国务院|李强|习近平|朱镕基|温家宝|李克强|王局拍案20240306

DEEPSEEK DROPS AI BOMBSHELL: A.I Improves ITSELF Towards Superintelligence (BEATS o1)

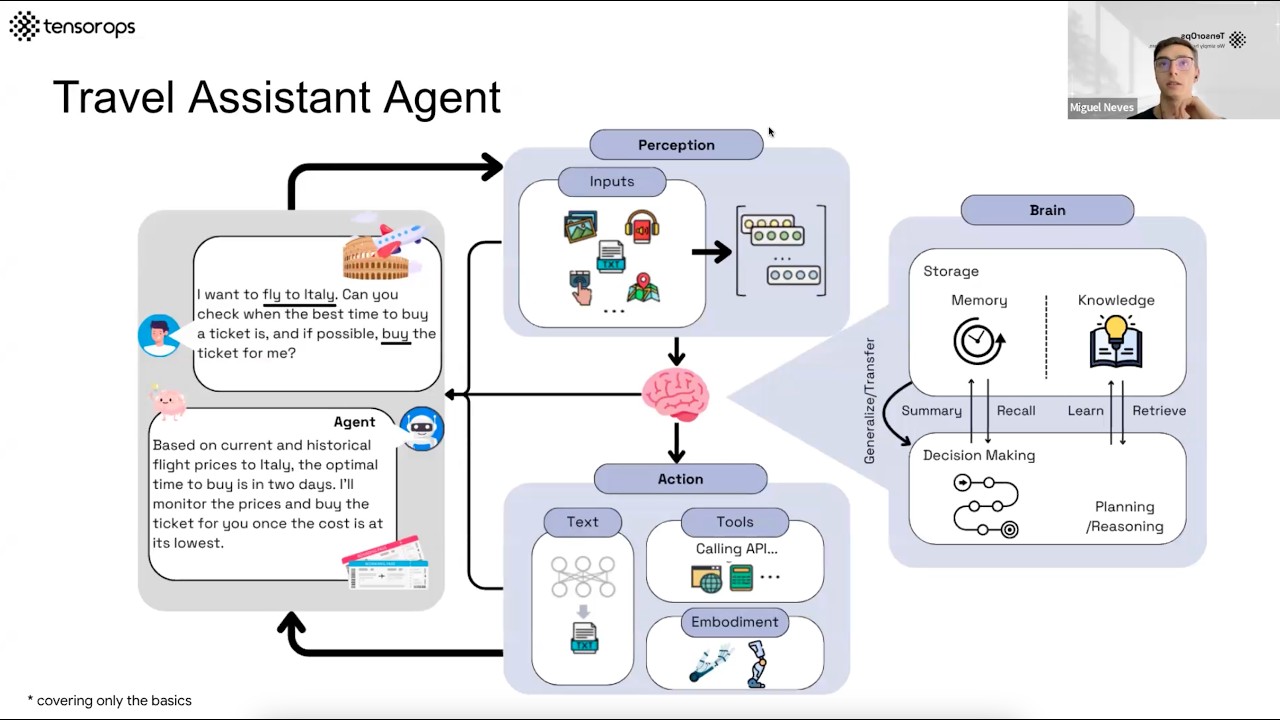

AI Agents– Simple Overview of Brain, Tools, Reasoning and Planning

5.0 / 5 (0 votes)