Ethernet Won’t Replace InfiniBand for AI Networking in 2024

Summary

TLDRThe transcript discusses the evolving debate between Infiniband and Ethernet in the context of AI networking, particularly in high-performance computing (HPC). The conversation highlights the technical advantages of Infiniband, known for its low latency and high throughput, versus Ethernet's growing capabilities with 100G and 400G speeds. As Ultra Ethernet gains traction, driven by vendors like Cisco, Intel, and Broadcom, there’s speculation about whether it can replace Infiniband for AI workloads. The challenge lies in replicating Infiniband’s performance while maintaining Ethernet’s cost-effectiveness and scalability. The podcast emphasizes the nuances of vendor influence and cloud adoption, ultimately leaving the future of AI networking uncertain.

Takeaways

- 😀 **Infiniband** has long been the preferred technology for high-performance computing (HPC) and AI workloads due to its low latency and high throughput, especially for GPU-to-GPU communication.

- 😀 **Ethernet** is gaining ground as a more cost-effective and flexible alternative, especially with developments like **Ultra Ethernet** targeting high-performance applications traditionally dominated by Infiniband.

- 😀 While **Ethernet** offers high speeds (e.g., 400 Gbps), it still faces challenges in terms of latency, lossless performance, and the ability to support **RDMA (Remote Direct Memory Access)** at scale.

- 😀 **Cloud providers** tend to favor Ethernet due to its scalability, standardization, and cost-effectiveness, making it more attractive for large-scale cloud AI workloads.

- 😀 The key difference between **Infiniband** and **Ethernet** is the vendor ecosystem: Infiniband is mostly driven by a single vendor (**NVIDIA/Mellanox**), while Ethernet benefits from a competitive multi-vendor environment (e.g., **Cisco, Intel, Broadcom**).

- 😀 **Infiniband** is expected to remain a dominant solution in **on-premises AI clusters**, especially for applications that require ultra-low latency and consistent performance.

- 😀 The rise of **Ultra Ethernet** (a consortium of vendors focused on high-performance Ethernet) is aiming to bridge the gap between Ethernet's cost advantages and the performance of Infiniband.

- 😀 As much as **Ethernet** has advanced, it is still viewed as a ‘**snowflake**’ for those who are accustomed to **Infiniband**-based infrastructures for AI workloads.

- 😀 The transition to Ethernet in AI clusters will largely depend on whether hardware vendors offer Ethernet-enabled **HPC hardware** and whether Ethernet can deliver on the performance needed for cutting-edge workloads.

- 😀 **Vendor motivations** play a crucial role—Ethernet vendors like Cisco are investing heavily in Ethernet solutions for high-performance computing, but the true test will be whether these solutions can outperform Infiniband in practical scenarios.

Q & A

What is the primary topic discussed in the transcript?

-The transcript discusses the competition between Infiniband and Ethernet for AI networking, particularly focusing on whether Ethernet can surpass Infiniband for high-performance workloads, such as in AI and HPC (High-Performance Computing) environments.

What are the key advantages of Infiniband for high-performance computing (HPC) and AI workloads?

-Infiniband offers low latency, high throughput, and deterministic performance, which makes it ideal for AI workloads and HPC clusters. It also supports RDMA (Remote Direct Memory Access), allowing for faster data transfer without burdening the CPU.

Why is Ethernet seen as a potential competitor to Infiniband in the AI space?

-Ethernet is cost-effective, scalable, and widely adopted. Recent developments, such as 400G Ethernet and RDMA over Converged Ethernet (ROCE), have improved Ethernet's performance, making it competitive in high-performance computing environments, especially for cloud-based solutions.

How does Ethernet's cost and flexibility influence its adoption in AI and HPC?

-Ethernet’s lower cost and flexibility make it an attractive option for cloud providers and organizations looking for scalable solutions. Its widespread availability also lowers the barrier to entry compared to Infiniband, which is typically more expensive and specialized.

What challenges does Ethernet still face in replacing Infiniband for AI workloads?

-Ethernet still faces challenges in areas such as lossless transmission, packet ordering, and consistent high-performance networking, which Infiniband handles more effectively. While innovations like RDMA over Ethernet are emerging, Ethernet is not yet as seamless or efficient as Infiniband for the most demanding AI workloads.

Why are cloud providers more inclined to use Ethernet over Infiniband?

-Cloud providers prefer Ethernet due to its scalability, cost-effectiveness, and the ability to support a wide variety of workloads. Homogeneity across data center infrastructure also helps reduce complexity and costs, which makes Ethernet a more attractive option than Infiniband in many cases.

What role do vendors like Cisco, Intel, and Broadcom play in the evolution of Ethernet for AI and HPC?

-Vendors like Cisco, Intel, and Broadcom are heavily invested in improving Ethernet’s performance for AI and HPC workloads. They are working through initiatives like the Ultra Ethernet Consortium to integrate RDMA-like capabilities into Ethernet and make it a more viable alternative to Infiniband.

Can you explain the concept of RDMA and why it is important for networking in AI?

-RDMA (Remote Direct Memory Access) allows for data to be transferred directly between the memory of two computers without involving the CPU, reducing latency and improving throughput. It is crucial for AI workloads that require fast and efficient data exchange, such as training large machine learning models.

What is the long-term outlook for Infiniband in the face of Ethernet's advancements?

-While Ethernet is making strides, Infiniband is unlikely to disappear in the near future, especially in on-premises, high-performance AI clusters. It remains a strong option due to its specialized hardware and optimizations for low-latency, high-throughput applications. However, the shift towards Ethernet in some areas may continue over time, especially for less demanding workloads.

How does the perspective of the user (cloud provider vs. on-premises deployment) affect the choice between Ethernet and Infiniband?

-For cloud providers, Ethernet’s scalability, cost-effectiveness, and ability to standardize infrastructure make it the preferred choice. In contrast, on-premises AI deployments often require the specialized performance that Infiniband provides, particularly for large-scale, mission-critical applications where low-latency and high-throughput are essential.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

What runs ChatGPT? Inside Microsoft's AI supercomputer | Featuring Mark Russinovich

What is RDMA and RoCE? SmartNICs explained.

Success Story: HPC-based Design of Wind Assisted Propulsion Technology

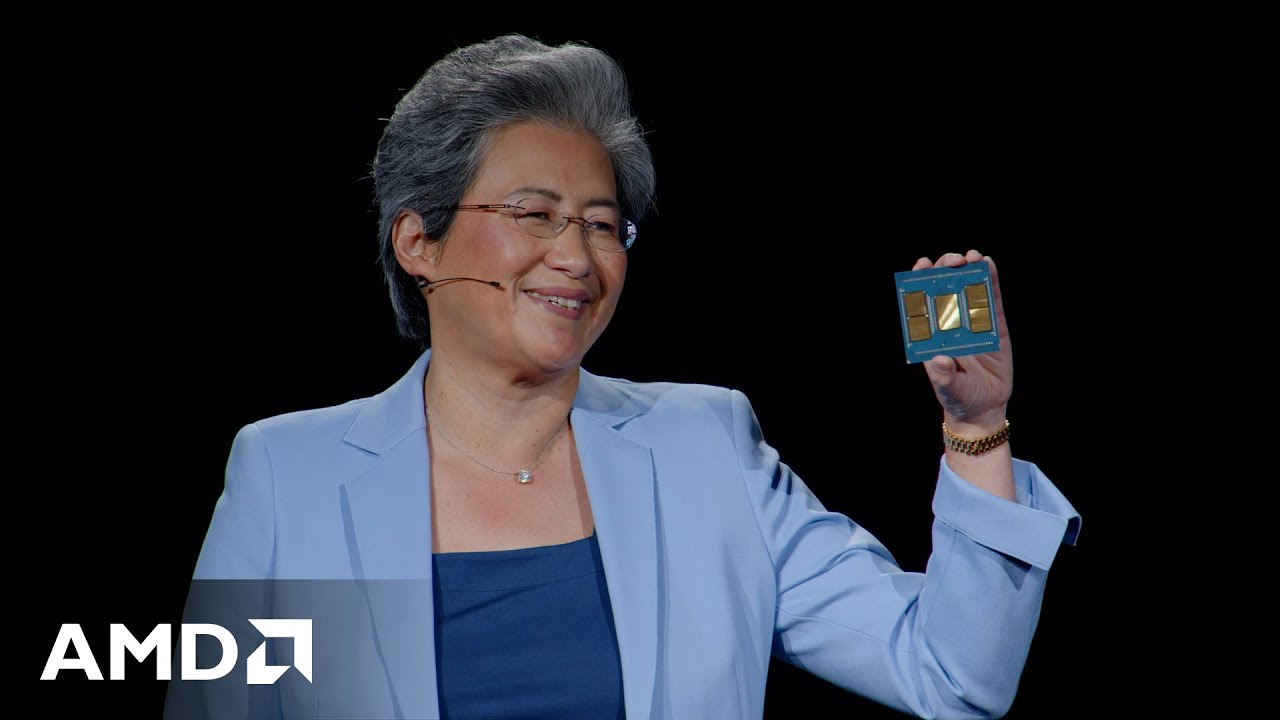

AMD Advancing AI 2024 Highlights

Maverick Chips for the Next Silicon Generation

BAGAIMANA PENGOLAHAN BIG DATA BISA DILAKUKAN? | 15 MINUTES METRO TV

5.0 / 5 (0 votes)