Range, variance and standard deviation as measures of dispersion | Khan Academy

Summary

TLDRThis video explores the concept of data dispersion, building on previous discussions about central tendency. It explains how two data sets with the same mean can differ significantly in their spread or dispersion. Various measures of dispersion, such as range, variance, and standard deviation, are introduced. The video walks through calculations for each measure using two example data sets, illustrating how variance and standard deviation offer deeper insights into the spread of data compared to simple range. It emphasizes how standard deviation is particularly useful for understanding how data points deviate from the mean.

Takeaways

- 😀 The video introduces the concept of statistical measures of spread, building on the previous video about central tendency.

- 😀 Two data sets are compared: one with values -10, 0, 10, 20, 30 and another with 8, 9, 10, 11, 12. Both have the same mean but differ in dispersion.

- 😀 The mean of both data sets is calculated and found to be 10, but the data sets are clearly different in terms of spread.

- 😀 The first data set has more dispersion, with values more spread out from the mean compared to the second data set.

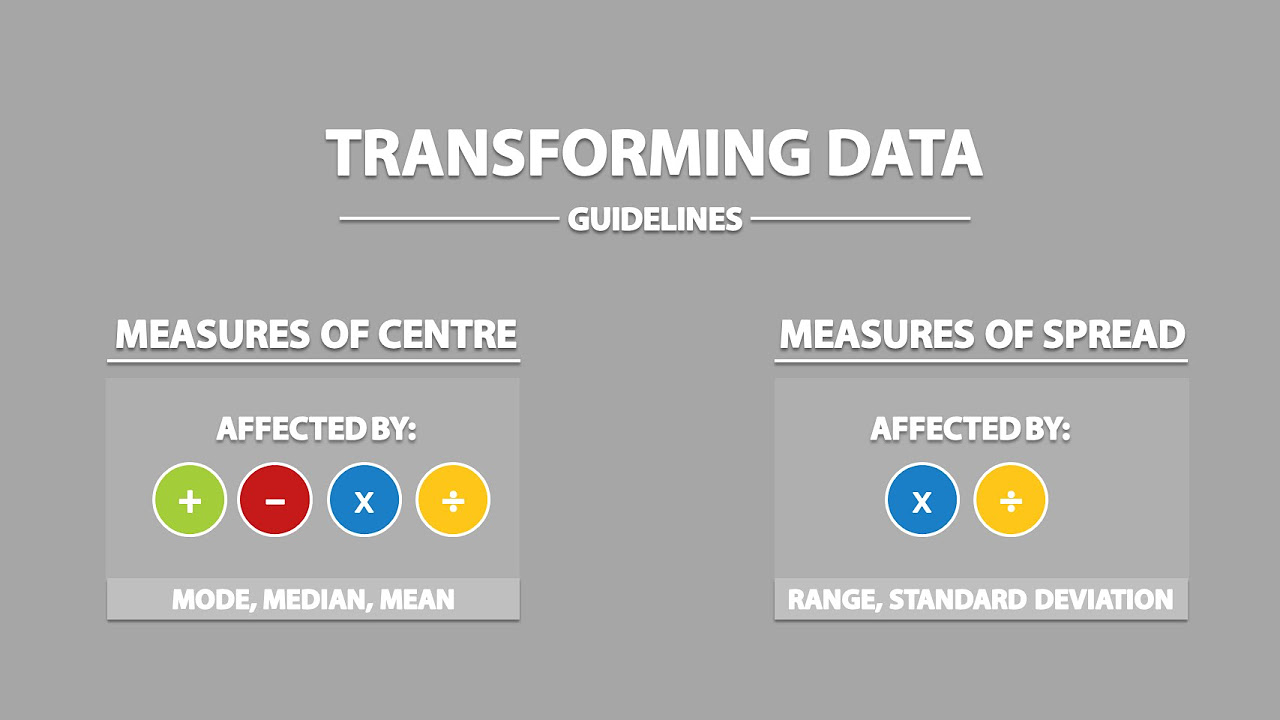

- 😀 The range is one simple measure of dispersion, calculated as the difference between the largest and smallest values in a data set.

- 😀 The range of the first data set is 40, while the range of the second data set is only 4.

- 😀 While the range provides some insight, it doesn't always tell the full story about how the data points are distributed.

- 😀 Variance is a more commonly used measure of dispersion, calculated by squaring the differences between each data point and the mean, then averaging them.

- 😀 The variance of the first data set is 200, and the variance of the second data set is 2, indicating that the first set is much more dispersed.

- 😀 Variance can result in awkward units, such as meters squared, so the standard deviation, the square root of the variance, is often used instead to give a more intuitive understanding of dispersion.

- 😀 The standard deviation of the first data set is 10√2, which is much larger than the standard deviation of the second data set, which is √2, reflecting the greater spread in the first data set.

Q & A

What is the main focus of this video?

-The video focuses on understanding how spread apart the data is, alongside representing the central tendency (average) of a data set.

How do the two data sets presented in the video compare in terms of their central tendency?

-Both data sets have the same arithmetic mean of 10, despite having very different distributions.

What is the arithmetic mean of the first data set, which consists of -10, 0, 10, 20, and 30?

-The arithmetic mean of this data set is 10, calculated as the sum of the data points divided by the number of data points (50/5).

What is the range of the first data set, and how is it calculated?

-The range of the first data set is 40, calculated by subtracting the smallest number (-10) from the largest number (30).

What does the range tell us about the data set?

-The range provides a simple measure of dispersion by showing the difference between the largest and smallest values in the data set. A larger range suggests more dispersion.

How does the variance measure dispersion?

-Variance measures dispersion by calculating the average of the squared differences between each data point and the mean, providing a more nuanced understanding of how spread out the data is.

What is the variance of the first data set and how is it calculated?

-The variance of the first data set is 200, calculated by squaring the differences between each data point and the mean (10), summing them, and dividing by the number of data points (1000/5).

What is the significance of the variance being larger in one data set compared to the other?

-A larger variance indicates that the data points are more spread out from the mean, suggesting higher dispersion. In this case, the first data set has a higher variance (200), indicating more dispersion than the second set (variance of 2).

Why is the standard deviation often preferred over variance?

-Standard deviation is preferred because it is the square root of the variance, which returns the measure of dispersion to the original units of the data, making it easier to interpret.

How do the standard deviations of the two data sets compare?

-The standard deviation of the first data set is 10 times larger than the second data set. This means the first set has more spread, on average, than the second set.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)