¿QUE ES KUBERNETES? - Introducción al orquestador más usado

Summary

TLDRThis video offers an introductory explanation of Kubernetes, a container orchestration tool created by Google and now open source. It discusses Kubernetes' ability to manage applications across multiple servers, ensuring high availability and reducing downtime through application replication. The script covers the basic architecture, including Masters and Workers, and introduces key components like the API server, controller manager, and scheduler. It also explains the concept of Pods, Services, and the use of declarative manifests for application deployment, highlighting Kubernetes' role in simplifying container management at scale.

Takeaways

- 😀 Kubernetes is a tool for managing containerized applications across multiple servers, simplifying deployment and scaling.

- 🔧 Docker is used to create containerized applications, ensuring they run the same way on any machine, avoiding 'it works on my machine' problems.

- 👨💻 Developers can focus on creating and modifying applications without needing to coordinate with system administrators for configuration.

- 🚀 Kubernetes was created by Google and is now open-source, allowing anyone to download, modify, and improve it.

- 🌐 Kubernetes solves the problem of managing a large number of containers across many nodes, providing high availability and reducing downtime.

- 🔄 High availability in Kubernetes is achieved by creating replicas of applications, ensuring traffic is redirected to functioning copies if one fails.

- 📈 Scalability is managed by Kubernetes, allowing for the creation of additional application copies to handle increased traffic or capacity needs.

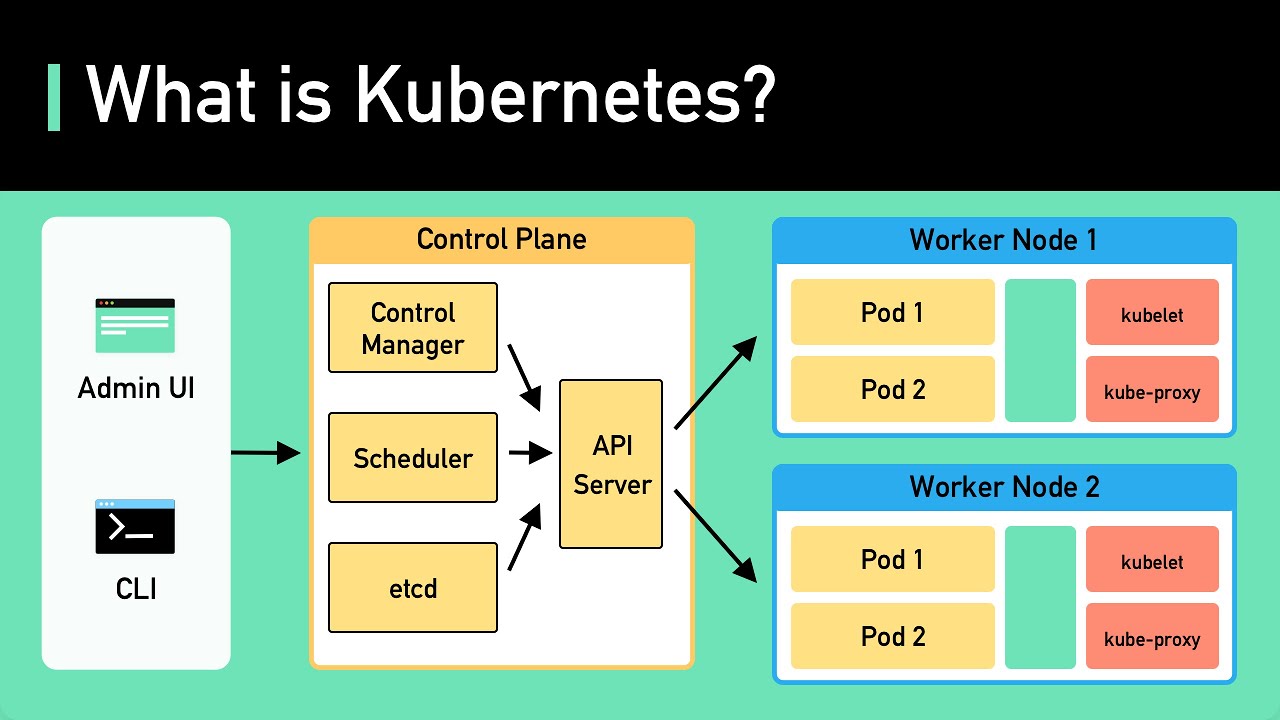

- 🛠 Kubernetes architecture consists of two main groups: Masters and Workers, with the Masters running several components like the API server, controller manager, and scheduler.

- 📦 Pods in Kubernetes are sets of containers that share a network space and IP, typically running a single container each but can run more for task division.

- 🔗 An overlay network in Kubernetes allows pods to communicate across different nodes, facilitating inter-pod communication regardless of their location.

- 🌐 Services in Kubernetes provide a stable way to access applications, with different types like ClusterIP, NodePort, LoadBalancer, and Ingress to manage traffic.

Q & A

What is the main topic of the video?

-The main topic of the video is Kubernetes, explaining what it is, its purpose, and how it works.

What is Docker and how does it relate to Kubernetes?

-Docker is a tool that allows you to run applications in containers, ensuring that the application runs the same way on any machine. It is related to Kubernetes as Kubernetes is an orchestration tool for managing Docker containers across multiple servers.

What problem does Kubernetes solve that Docker does not?

-Kubernetes solves the problem of managing containers across many servers, providing high availability, scaling, and disaster recovery, which Docker does not handle on its own.

What is an orchestrator in the context of container management?

-An orchestrator is a tool that manages the deployment, scaling, and operations of application containers across a cluster of hosts.

Who originally created Kubernetes and what is its current status?

-Kubernetes was originally created by Google and is now an open-source project that anyone can download, use, and modify.

What does Kubernetes provide in terms of application management?

-Kubernetes provides high availability by creating replicas of applications, scaling capabilities to handle varying traffic, and disaster recovery through its declarative manifests.

What are the two main components of a Kubernetes architecture?

-The two main components of a Kubernetes architecture are the Masters and the Workers. The Masters manage the cluster, while the Workers run the applications in containers.

What is a Pod in Kubernetes and why are they considered volatile?

-A Pod in Kubernetes is a set of containers that share a network space and have a single IP. They are considered volatile because they can be destroyed and recreated during deployments, such as version updates.

What is a Service in Kubernetes and why is it used?

-A Service in Kubernetes is an abstraction that defines a logical set of Pods and a policy by which to access them. It is used to provide a stable endpoint for accessing the Pods, which are otherwise volatile.

What are some types of Services in Kubernetes?

-Some types of Services in Kubernetes include ClusterIP, NodePort, LoadBalancer, and Ingress, each providing different methods of accessing the Pods.

How does Kubernetes handle the creation and management of Pods?

-Kubernetes uses deployments, which are templates for creating Pods. When a deployment is applied to a Kubernetes cluster, the controller manager takes care of creating the Pods according to the template.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Containerizing and Orchestrating Apps with GKE

Kubernetes Explained in 6 Minutes | k8s Architecture

you need to learn Kubernetes RIGHT NOW!!

Managing containers

What Is Kubernetes - The Engine Behind Google's massive Container Systems | KodeKloud

Benefits of Kubernetes | Scalability, High Availability, Disaster Recovery | Kubernetes Tutorial 16

5.0 / 5 (0 votes)