Plant Leaf Disease Detection Using CNN | Python

Summary

TLDRIn this YouTube tutorial, the host demonstrates how to build a deep learning model for plant disease classification using Python and TensorFlow. They showcase a demo application that predicts leaf diseases from images, utilizing a dataset from Kaggle. The video guides viewers through the process of creating a CNN model, training it with image data augmentation, and compiling it for accuracy. The host then explains how to deploy the model into a web application using Flask, allowing users to upload images for disease prediction. The tutorial is practical, providing step-by-step coding instructions and emphasizing the importance of data balance and model evaluation.

Takeaways

- 🌟 The video is a tutorial on building a deep learning application for plant disease classification using Python and the Flux web framework.

- 🔍 The demo application allows users to upload images of plant leaves and receive predictions on diseases like rust and powdery mildew.

- 📁 The tutorial uses a dataset from Kagle, which includes training, testing, and validation sets with images categorized as healthy, rust, or powdery.

- 💾 The data is already split into these sets, so there's no need for additional data partitioning by the user.

- 🔄 The script discusses the importance of data augmentation to increase the dataset size and improve model training, using techniques like image rescaling and flipping.

- 🤖 The model used is a Convolutional Neural Network (CNN) due to the image-based nature of the data, with layers including convolution, max pooling, and fully connected layers.

- 📈 The tutorial covers the process of compiling the model with categorical cross-entropy as the loss function, using the Adam optimizer, and training the model with a specified batch size and number of epochs.

- 📊 The video includes a demonstration of the accuracy and loss curves to evaluate the model's performance and ensure it is neither overfitting nor underfitting.

- 🖼️ The process of pre-processing new images for prediction involves resizing, normalization, and expanding dimensions to match the model's input requirements.

- 🛠️ The video concludes with instructions on building a web application using the trained model, including setting up routes and handling image uploads and predictions in a Flask application.

- 🔗 The source code and additional resources for the web application are available in the video description or on GitHub for further exploration and use.

Q & A

What is the main topic of the video?

-The main topic of the video is building a deep learning application for plant disease classification using Python and TensorFlow.

What is the purpose of the demo application shown in the video?

-The purpose of the demo application is to classify plant leaf diseases by predicting whether a leaf is healthy, affected by rust, or has powdery mildew based on images.

What dataset is used in the video for building the deep learning model?

-The dataset used is from Kaggle called 'Plant Disease Recognition' which contains images divided into training, testing, and validation sets for three classes: healthy, powdery, and rust.

What are the three classes of plant diseases considered in the dataset?

-The three classes of plant diseases considered are healthy leaves, leaves with powdery mildew, and leaves with rust.

Why is data augmentation used in this context?

-Data augmentation is used to increase the amount of training data by creating new images from existing ones through techniques like rescaling, shearing, zooming in/out, and horizontal flipping, which helps in improving the model's generalization.

What type of model is being built in the tutorial?

-A Convolutional Neural Network (CNN) model is being built in the tutorial, which is suitable for image data.

How does the video demonstrate the model's performance?

-The video demonstrates the model's performance by showing the accuracy on the training and validation sets, as well as by plotting accuracy and loss curves.

What activation function is used in the final layer of the CNN model?

-The Softmax activation function is used in the final layer of the CNN model because it is a multiclass classification problem.

How can the trained model be utilized in a web application?

-The trained model can be saved as a file (e.g., model.h5) and then integrated into a web application using a framework like Flask, where users can upload images to get predictions.

What is the significance of the 'pre-process_image' function in the context of the video?

-The 'pre-process_image' function is significant as it prepares the images for the model by resizing them to the required input size of the CNN, converting them into a NumPy array, and normalizing the pixel values.

How does the video ensure the model is not overfitting or underfitting?

-The video ensures the model is neither overfitting nor underfitting by comparing the accuracy on the training set with the validation set and by showing that there is no significant gap between the two, indicating a well-generalized model.

What is the role of the 'secure_file' in the web application code?

-The 'secure_file' role in the web application code is to prevent unauthorized file access, ensuring that only approved files can be used within the application.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

PNEUMONIA Detection Using Deep Learning in Tensorflow, Keras & Python | KNOWLEDGE DOCTOR |

Deep Learning Project Environment Setup | Installing Tensorflow Cudatoolkit Nvidia driver in Windows

Tutorial Klasifikasi Teks dengan Long Short-term Memory (LSTM): Studi Kasus Teks Review E-Commerce

Image Classification App | Teachable Machine + TensorFlow Lite

Plant Disease Detection System using Deep Learning Part-2 | Data Preprocessing using Keras API

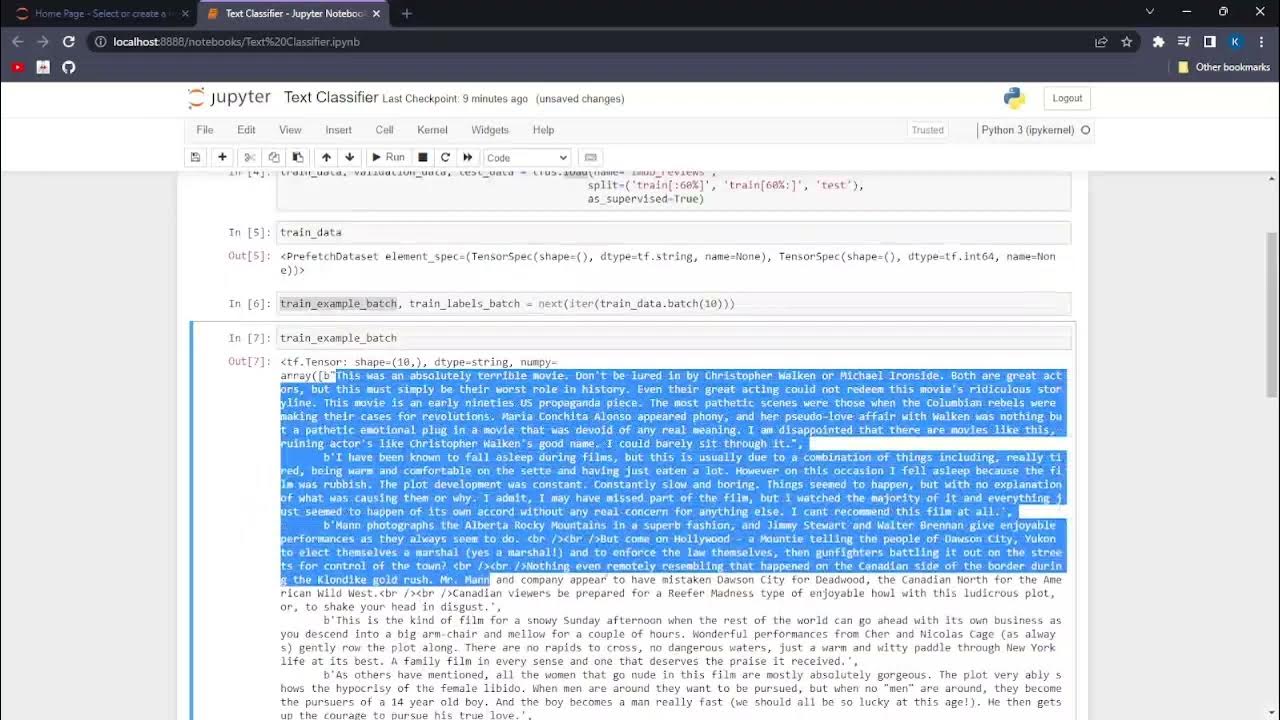

AIP.NP1.Text Classification with TensorFlow

5.0 / 5 (0 votes)