What is Apache Hive? : Understanding Hive

Summary

TLDRApache Hive is a pivotal component of the Big Data landscape, developed by Facebook and now maintained by the Apache Software Foundation. It bridges the gap between traditional SQL and the Hadoop ecosystem by allowing users to execute SQL-like queries (HQL) on large datasets stored in HDFS. Hive is adept for OLAP and analytics but not suited for OLTP or real-time data processing due to inherent latency. It supports various file formats, offers compression techniques, and enables the use of UDFs. Unlike traditional RDBMS, Hive operates on a 'schema on read' model, suitable for handling massive data volumes with scalable storage on HDFS.

Takeaways

- 🐝 **What is Hive?** - Apache Hive is a data warehouse software that facilitates reading, writing, and managing large datasets in distributed storage using SQL-like queries.

- 🚀 **Development and Maintenance** - Originally developed by Facebook, Hive is now maintained by the Apache Software Foundation and widely used by companies like Netflix and Amazon.

- 🔍 **Purpose of Hive** - It was created to make Hadoop more accessible to SQL users by allowing them to run queries using a SQL-like language called HiveQL.

- 🧩 **Integration with Hadoop** - Hive interfaces with the Hadoop ecosystem and HDFS file system, converting HiveQL queries into MapReduce jobs for processing.

- 📊 **Use Cases for Hive** - It's ideal for OLAP (Online Analytical Processing), providing a scalable and flexible platform for querying large datasets stored in HDFS.

- ❌ **Limitations of Hive** - Not suitable for OLTP (Online Transaction Processing), real-time updates, or scenarios requiring low-latency data retrieval due to the overhead of converting Hive scripts to MapReduce jobs.

- 📚 **Features of Hive** - Supports various file formats, stores metadata in RDBMS, offers compression techniques, and allows for user-defined functions (UDFs) to extend functionality.

- 🔄 **Schema Enforcement** - Hive operates on a 'schema on read' model, which means it doesn't enforce schema during data ingestion but rather when data is queried.

- 📈 **Scalability** - Unlike traditional RDBMS, which typically have a storage capacity limit of around 10 terabytes, Hive can handle storage of hundreds of petabytes due to its integration with HDFS.

- 📋 **Comparison with RDBMS** - Hive differs from traditional RDBMS in that it doesn't support OLTP and enforces schema on read rather than on write, making it more suitable for big data analytics than transactional databases.

Q & A

What is Apache Hive?

-Apache Hive is a data warehouse software project built on top of Apache Hadoop for providing data query and analysis. It was originally developed by Facebook and is now maintained by the Apache Software Foundation.

Why was Hive developed?

-Hive was developed to provide a SQL-like interface (Hive Query Language or HQL) for users to interact with the Hadoop ecosystem, making it easier for those familiar with SQL to work with large volumes of data stored in Hadoop.

How does Hive complement the Hadoop ecosystem?

-Hive complements the Hadoop ecosystem by allowing users to run SQL-like queries on large datasets in Hadoop, which are then converted into MapReduce jobs for processing.

What are the main use cases for Hive?

-Hive is primarily used for OLAP (Online Analytical Processing), allowing for scalable, fast, and flexible data analysis on large datasets residing on the Hadoop Distributed File System (HDFS).

When is Hive not suitable for use?

-Hive is not suitable for OLTP (Online Transaction Processing), real-time updates or queries, or scenarios requiring low-latency data retrieval due to the inherent latency in converting Hive scripts into MapReduce jobs.

What file formats does Hive support?

-Hive supports various file formats including Sequence File, Text File, Avro, ORC, and RC file formats.

Where does Hive store its metadata?

-Hive stores its metadata in an RDBMS like Apache Derby, which allows for metadata management and query optimization.

What are some of the finest features of Hive?

-Hive features include support for different file formats, compression techniques, user-defined functions (UDFs), and specialized joins to improve query performance.

How does Hive's schema enforcement differ from traditional RDBMS?

-Hive enforces schema on read, meaning data can be inserted without checking the schema, which is verified only upon reading. Traditional RDBMS enforce schema on write, verifying data during insertion.

What is the storage capacity difference between Hive and traditional RDBMS?

-Hive can store hundreds of petabytes of data in HDFS, whereas traditional RDBMS typically have a storage capacity of around 10 terabytes.

Does Hive support OLTP operations?

-No, Hive does not support OLTP operations as it is designed for batch processing and analytics rather than transactional processing.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

What is Apache Iceberg?

System Design: Apache Kafka In 3 Minutes

1. Intro to Big Data

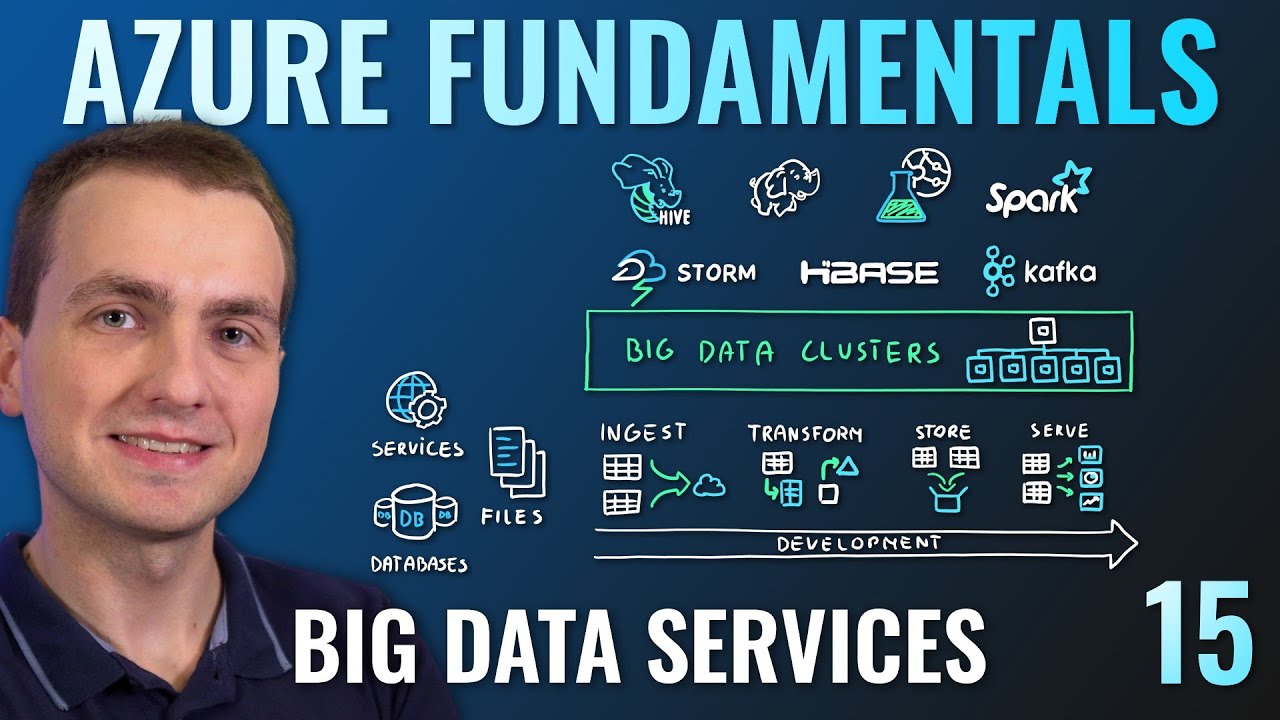

AZ-900 Episode 15 | Azure Big Data & Analytics Services | Synapse, HDInsight, Databricks

Introduction Hadoop: Big Data – Apache Hadoop & Hadoop Eco System (Part2 ) Big Data Analyticts

Hadoop Pig Tutorial | What is Pig In Hadoop? | Hadoop Tutorial For Beginners | Simplilearn

5.0 / 5 (0 votes)