Intro to Markov Chains & Transition Diagrams

Summary

TLDRIn this video, the concept of Markov chains is introduced through a superhero traveling between cities with random connections. Markov chains are statistical processes where future states depend solely on the present state, not past events. The video compares this with a non-Markovian process where past states are considered. It also explores real-life applications, such as modeling the stock market with probabilities for bull and bear markets. The video concludes by discussing how to predict future events using transition and tree diagrams, highlighting the need for more efficient tools like matrices for long-term predictions.

Takeaways

- 😀 Markov chains are statistical processes where future states only depend on the present, not the past.

- 😀 A Markov chain example involves a superhero traveling between cities, making random choices based only on their current location.

- 😀 The superhero's decision-making process follows the principle of a Markov chain, where their next move is independent of prior moves.

- 😀 A non-Markovian process, on the other hand, considers the history of past states in its decision-making.

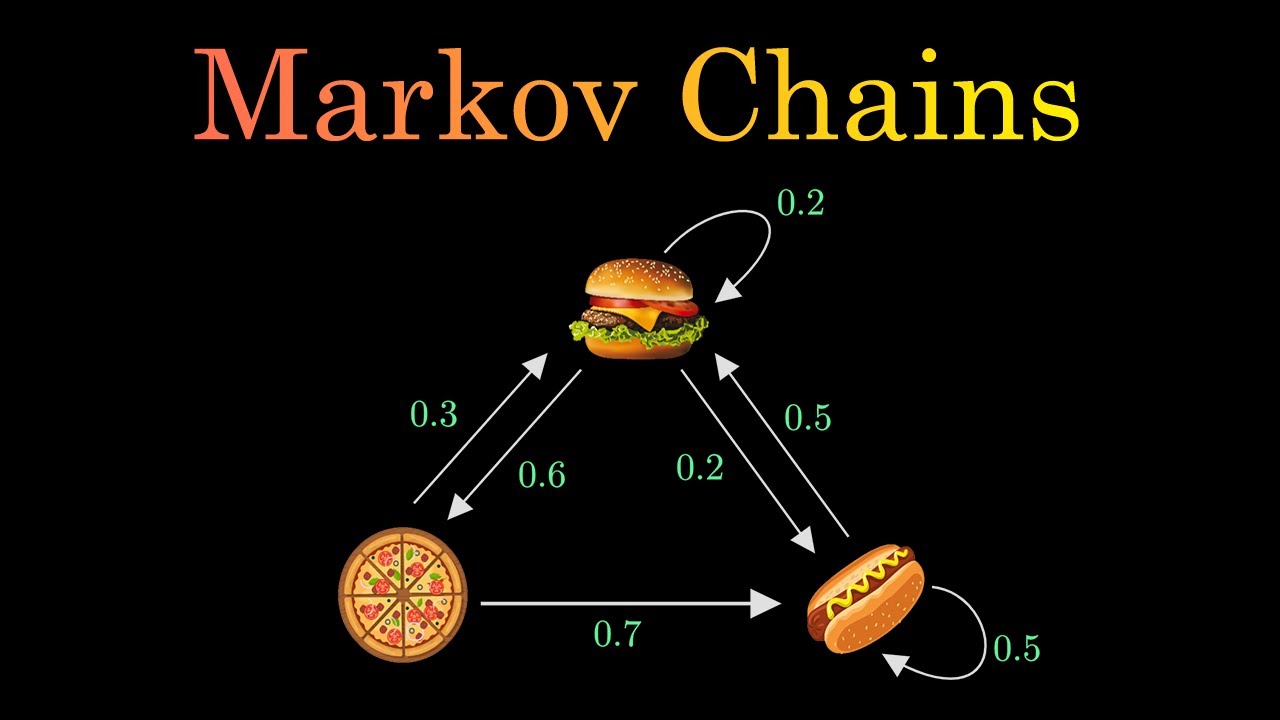

- 😀 Transition diagrams represent all possible states and the probabilities of transitioning between them in a Markov process.

- 😀 In the stock market example, a Markov chain is used to model the probabilities of a bull or bear market based on past market states.

- 😀 Transition probabilities can be derived from historical data, like a 75% chance of a bull market following a bull market.

- 😀 A tree diagram is helpful for predicting multiple future steps in a Markov process, such as projecting two weeks ahead in the stock market example.

- 😀 To calculate the probability of a specific future outcome, we add up the probabilities of all possible paths leading to that outcome.

- 😀 Long-term predictions in a Markov process can become complex, so matrices and linear algebra are introduced as a more efficient method for handling large-scale computations.

Q & A

What is a Markov chain?

-A Markov chain is a sequence of events where the probability of the next event depends only on the current state, not on the previous events. In the context of the script, it was illustrated using a superhero moving between cities, where the superhero's next move is chosen randomly based on their current location.

What makes the superhero in the example a Markovian superhero?

-The superhero is Markovian because they only consider their current city when deciding where to go next, without remembering previous cities they've visited. This random, present-state-dependent behavior exemplifies a Markov process.

How is a non-Markovian superhero different from a Markovian superhero?

-A non-Markovian superhero remembers where they've been and uses this memory to make decisions. Unlike a Markovian superhero, who chooses their next move solely based on their current location, the non-Markovian superhero avoids revisiting cities they've already been to.

What is a transition diagram in the context of a Markov chain?

-A transition diagram represents all the possible states and the probabilities of moving from one state to another. In the superhero example, the transition diagram illustrates the different cities the superhero can visit and the probability of moving between them.

How are probabilities assigned in a Markov chain transition diagram?

-Probabilities in a transition diagram are assigned based on the likelihood of moving from one state to another. For instance, if a superhero is at city A, they have a 100% chance of moving to city B. From city B, the superhero has a 1/3 chance of going to cities A, C, or D.

What does a tree diagram help with in a Markov chain?

-A tree diagram helps visualize the possible outcomes over multiple steps in a Markov chain. It is especially useful when predicting future states, as it shows how different pathways and probabilities unfold over time.

How is the stock market example related to Markov chains?

-In the stock market example, the Markov chain is used to model the transition between two states: bull market and bear market. The probabilities for transitioning from one state to another (e.g., from bull to bull or bear to bear) are based on historical data.

What is the probability of a bull market two weeks from now if this week is a bull market?

-The probability of a bull market two weeks from now is 66%. This is calculated by considering two possible pathways: a bull market followed by another bull market (75% chance * 75% chance), and a bull market followed by a bear market, then returning to a bull market (25% chance * 40% chance).

Why is a transition diagram insufficient for predicting long-term outcomes in Markov chains?

-A transition diagram becomes cumbersome and difficult to manage as the number of steps increases. For long-term predictions (e.g., 100 steps), a tree diagram would become too complex, and more efficient methods, such as matrices, are needed for easier manipulation and calculations.

What is the next step in understanding Markov chains after learning about transition and tree diagrams?

-The next step is to use matrices and linear algebra to simplify the manipulation and calculation of probabilities in Markov chains, especially for long-term predictions. The video mentions that this will be covered in the next session.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

5.0 / 5 (0 votes)